Watch the Video Tutorial

💡 Pro Tip: After watching the video, continue reading below for detailed step-by-step instructions, code examples, and additional tips that will help you implement this successfully.

Table of Contents

Open Table of Contents

- Introduction: The Power of Unified LLM Access in n8n

- Required Resources and Cost-Benefit Analysis

- Step-by-Step Guide: Connecting OpenRouter to n8n

- Critical Safety & Best Practice Tips

- Key Takeaways

- Conclusion

- Frequently Asked Questions (FAQ)

- Q: Can I use my existing API keys from OpenAI or Anthropic directly with OpenRouter?

- Q: What if a specific LLM I want to use isn’t available on OpenRouter?

- Q: How do I monitor my spending and token usage on OpenRouter?

- Q: Is n8n free to use, or do I need a paid subscription?

- Q: Can OpenRouter help me compare the performance of different LLMs for a specific task?

Introduction: The Power of Unified LLM Access in n8n

Integrating Large Language Models (LLMs) into our automation workflows isn’t just a cool party trick anymore; it’s becoming absolutely essential. Think of it like giving your automation superpowers! But here’s the kicker: managing a gazillion API keys for different LLM providers can quickly turn into a tangled mess. It’s like trying to juggle a dozen different remote controls just to watch one TV show. Annoying, right?

This is exactly where OpenRouter.ai swoops in like a superhero, offering a revolutionary solution. It’s like a universal remote for all your LLMs, giving you access to a vast array of models with just one, single, unified API key. Pretty neat, huh? In this guide, I’m going to walk you through connecting OpenRouter to your n8n instance, enabling you to leverage nearly 100 different AI models for all your automation needs. Imagine the possibilities!

What is OpenRouter.ai?

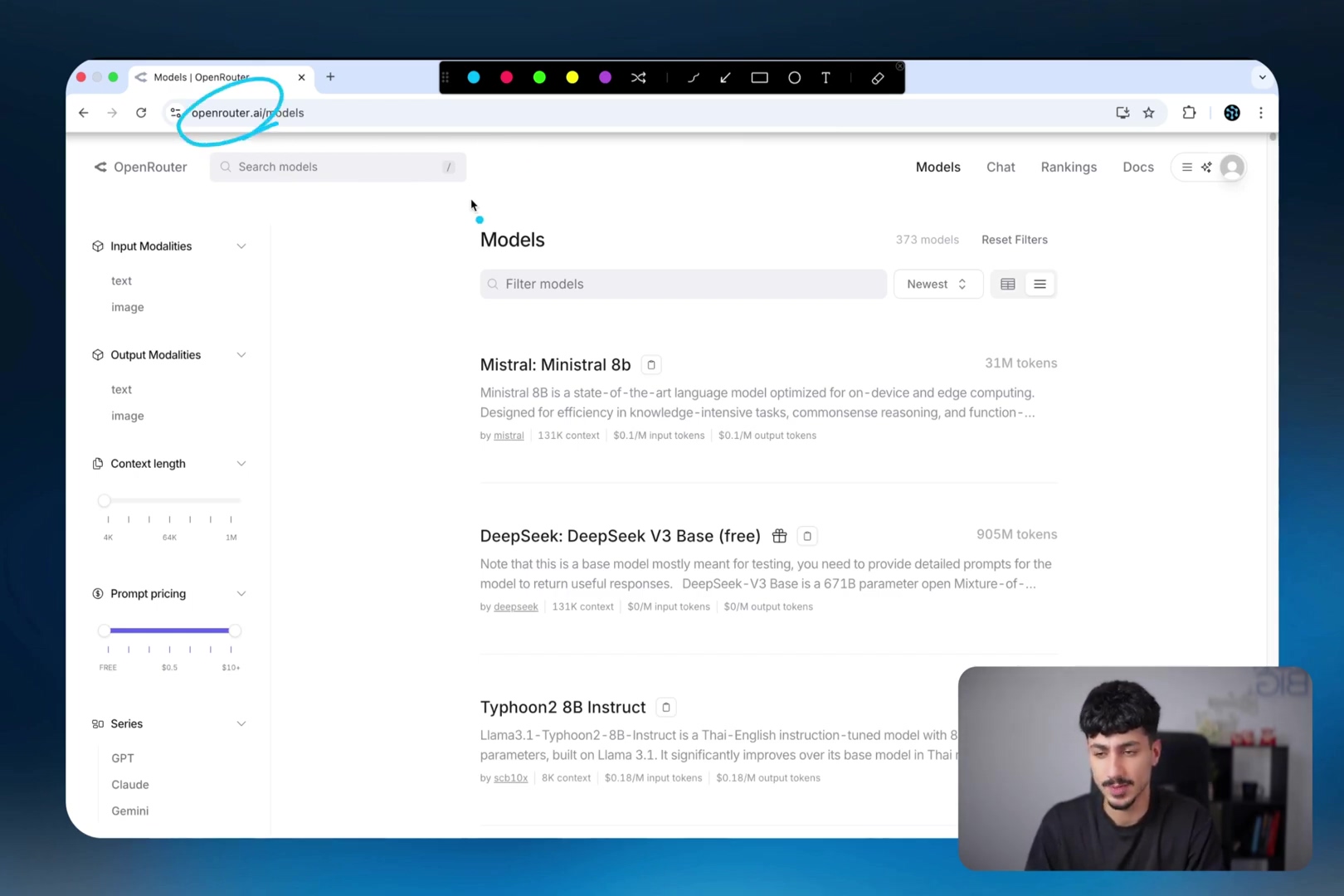

So, what exactly is OpenRouter.ai? Picture this: it’s a bustling central hub, a universal gateway to tons of different LLMs. Instead of having to go to each individual LLM provider – like Mistral, DeepSeek, or Typhoon – and get a separate API key for each one, OpenRouter brings them all under one roof. You get one API key from OpenRouter, and boom! You’ve got access to a whole universe of models.

This isn’t just about making your life easier (though it totally does!). It also simplifies credential management, centralizes your usage tracking (so you know exactly what you’re using), and helps you keep an eye on costs. For developers and businesses, it’s an invaluable tool. Seriously, it’s a game-changer.

Benefits of Using OpenRouter with n8n

Beyond just simplifying API key management (which, let’s be honest, is a huge win on its own), OpenRouter brings some serious advantages to the table when paired with n8n:

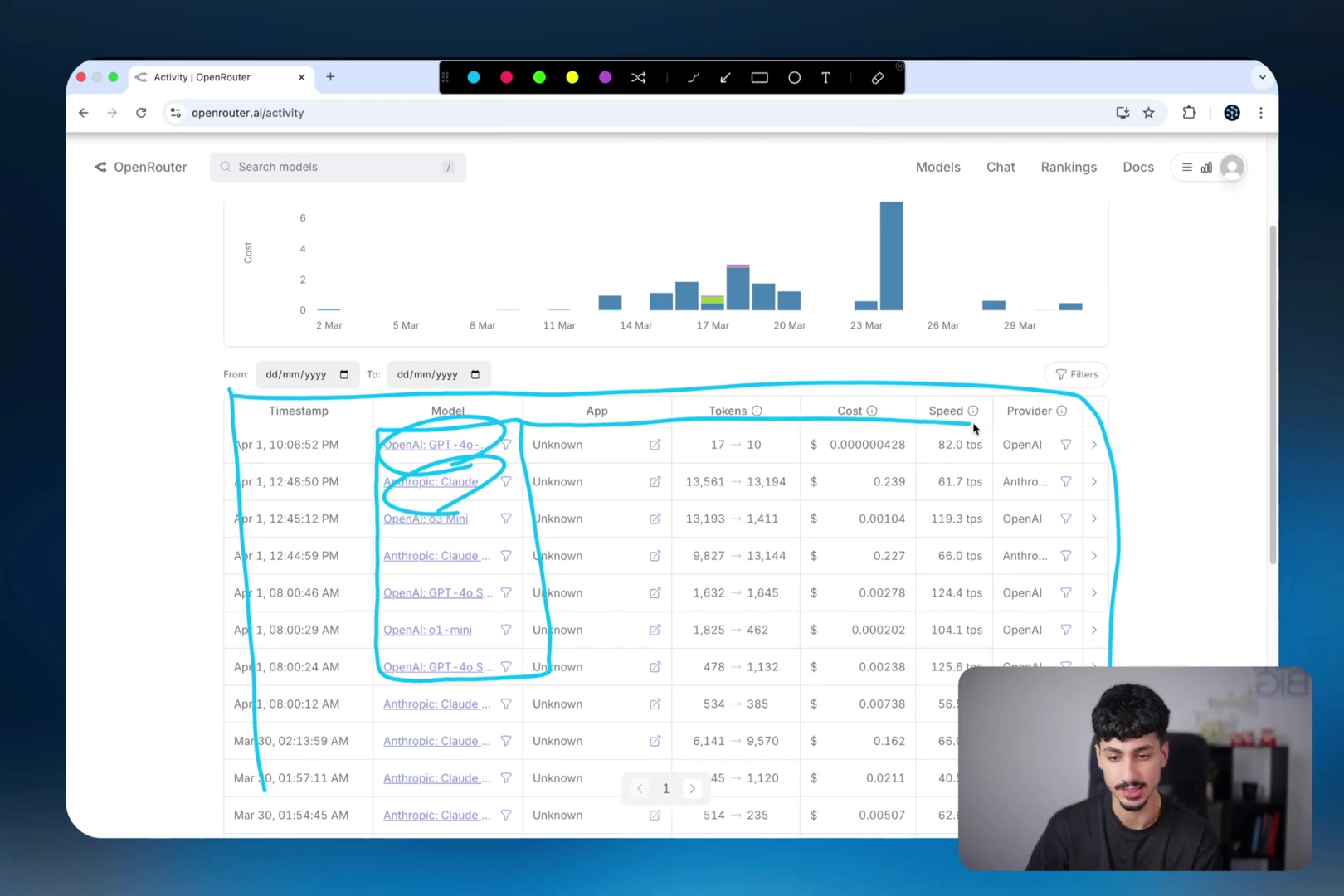

- Centralized Cost Tracking: Ever tried to figure out how much you’re spending across different LLM providers? It’s a nightmare! With OpenRouter, you can monitor all your LLM expenses from a single dashboard. This is super handy, especially when your complex workflows might be bouncing between models from OpenAI, Anthropic, and Google Gemini all at once.

- Unified Access: Remember that universal remote analogy? This is it! Access a vast library of LLMs without needing to set up individual integrations for each one. It’s like having a buffet of AI models at your fingertips.

- Streamlined Testing: This is one of my favorite parts. During workflow development, you can easily switch between different models. Want to see if a cheaper model can do the job just as well, or if a more powerful one gives you better results? Just a few clicks, and you’re testing! This helps you find the most cost-effective or performant option without tearing your hair out.

Required Resources and Cost-Benefit Analysis

Alright, before we dive into the nitty-gritty, let’s talk about what you’ll need and, more importantly, what it’s going to cost you. Understanding the financial side is crucial for optimizing your LLM usage, so no surprises down the road.

Resource Checklist

Think of this as your shopping list before you start building your awesome automation. You won’t need much, but these are non-negotiable:

| Item | Description |

|---|---|

| n8n Instance | A running n8n server (self-hosted or cloud). This is where your workflows live! |

| OpenRouter Account | A registered account on OpenRouter.ai. This is your gateway to all those LLMs. |

| Payment Method | For adding credits to your OpenRouter account. Gotta pay to play, right? |

| Internet Access | A stable connection for all those API calls. No internet, no AI magic! |

OpenRouter Fee Structure

Now, about the money. OpenRouter does charge a small fee for its aggregation service. It’s important to factor this into your cost calculations, so you’re not caught off guard. Think of it as a convenience fee for making your life so much easier!

- 5% fee on top of the cost of credits purchased via credit card (plus a $0.35 transaction fee). So, if you buy $100 worth of credits, you’ll pay $105.35.

- 4% fee for crypto payments (plus a 1% Coinbase processing fee). If you’re into crypto, this might be a slightly cheaper option.

- An additional 5% fee is deducted from the cost of requests if you use your own provider API keys through OpenRouter. This is for advanced users who want to centralize billing but use their existing keys.

Cost-Benefit Analysis: DIY vs. Commercial LLM Access

Let’s break it down. Is it better to go the DIY route and connect directly to each LLM provider, or use OpenRouter? Here’s a quick comparison to help you decide:

| Feature | DIY LLM Integration (Direct API) | OpenRouter Integration (Unified API) |

|---|---|---|

| API Keys | Multiple, per provider. A real headache to manage! | Single, unified. One key to rule them all! |

| Cost Tracking | Dispersed, requires manual aggregation. Get ready for some spreadsheet fun. | Centralized, unified dashboard. All your costs in one place. |

| Model Access | Limited to directly integrated providers. Only the ones you’ve set up. | Access to almost 100 models from various providers. A smorgasbord of AI! |

| Flexibility | High, direct control. You’re the boss! | High, easy switching between models. Experimentation is a breeze. |

| Overhead | Lower direct cost, higher management effort. You save pennies but spend hours. | Small fee (4-5%), significantly lower management effort. Pay a little, save a lot of time and sanity. |

| Ideal Use | Production workflows with stable model choice. Once you’ve picked your champion model. | Prototyping, testing, and diverse model utilization. Perfect for exploring and building. |

So, while OpenRouter does add a small percentage fee, the convenience and centralized management it offers, especially during testing and development phases, often outweigh this additional cost. For me, the time saved and the flexibility gained are totally worth it. Now, if you’re running a super high-volume, deployed workflow and you’ve settled on one specific model, you might consider switching back to direct LLM provider connections to avoid that small fee. But for getting started, exploring, and building, OpenRouter is my top recommendation. It’s just too good to pass up!

Step-by-Step Guide: Connecting OpenRouter to n8n

Alright, enough talk! Let’s get our hands dirty. Connecting OpenRouter to your n8n workflow is surprisingly straightforward. Follow these steps, and you’ll be an LLM integration wizard in no time.

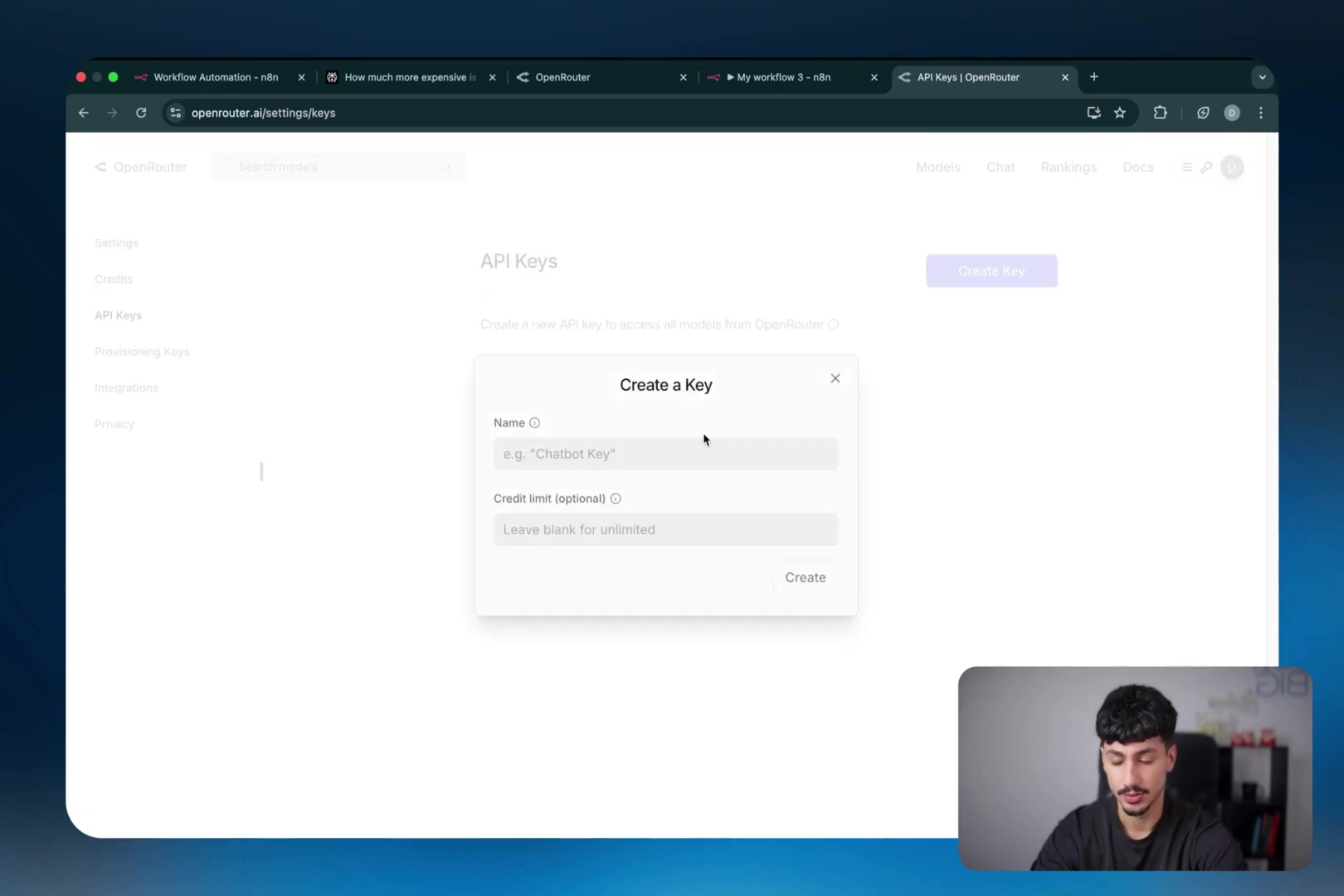

1. Create an OpenRouter Account and Generate an API Key

First things first, you need an OpenRouter account. Head over to OpenRouter.ai, sign in (or create a new account if you don’t have one). Once you’re logged in, you’ll need to add some credits to your account. Think of it like putting money on a gift card – you’ll use these credits to pay for your LLM usage. After you’ve funded your account, we can generate that all-important API key:

- Look for the burger menu (that’s the three horizontal lines, usually in the top right corner) and click on it.

- Select ‘Keys’ from the dropdown menu. This is where all your API keys live.

- Click the ‘Create Key’ button. You’ll be prompted to give your new API key a name. Something descriptive like “n8n Integration” works perfectly. You can also set an optional credit limit here, which is a super handy feature for keeping your spending in check.

- Once created, copy the generated API key to your clipboard immediately! This is crucial. You won’t be able to see it again once you navigate away from this page, so make sure you save it somewhere safe (like a password manager or a temporary secure note).

2. Connect OpenRouter to n8n for AI Agents

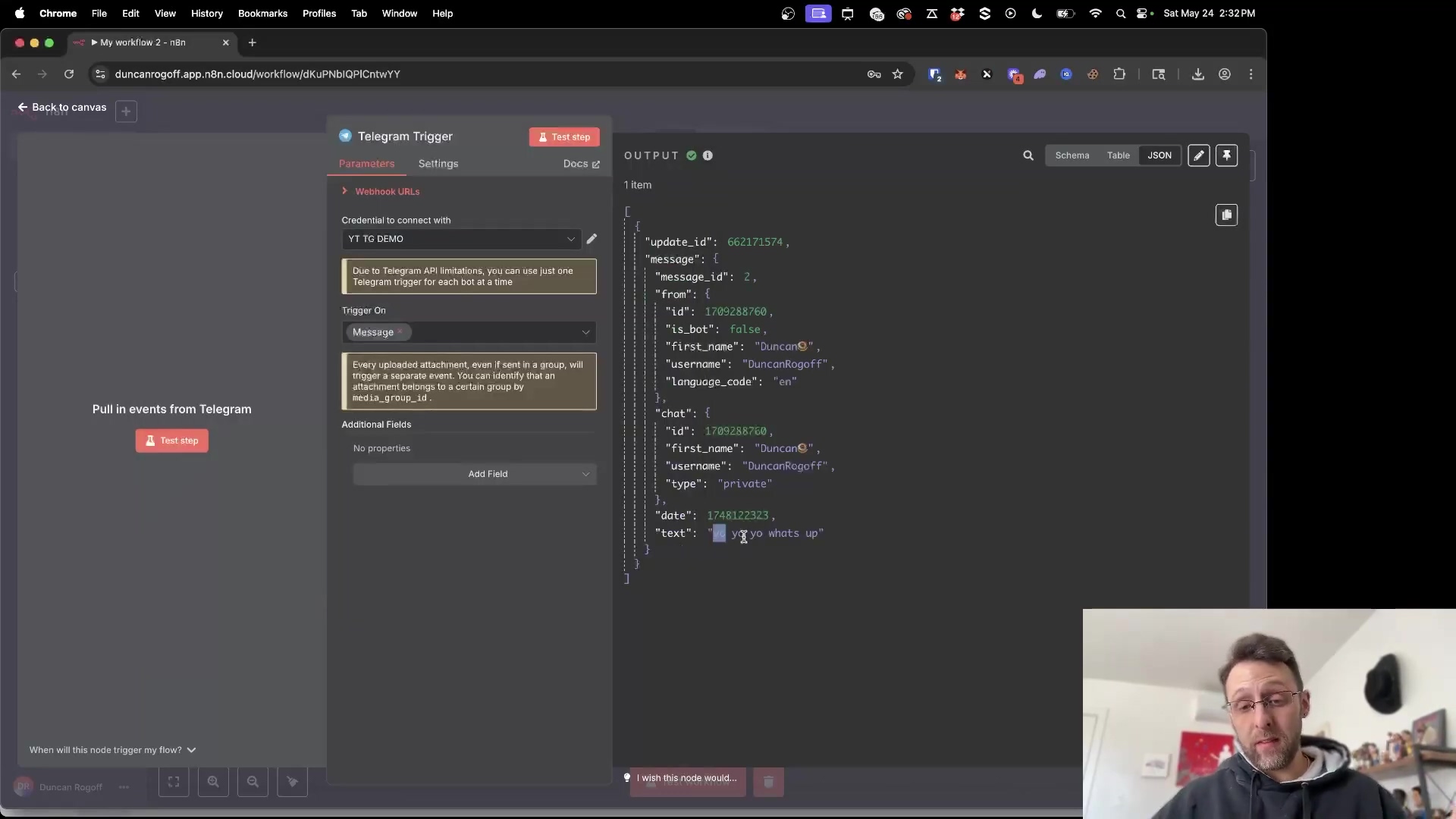

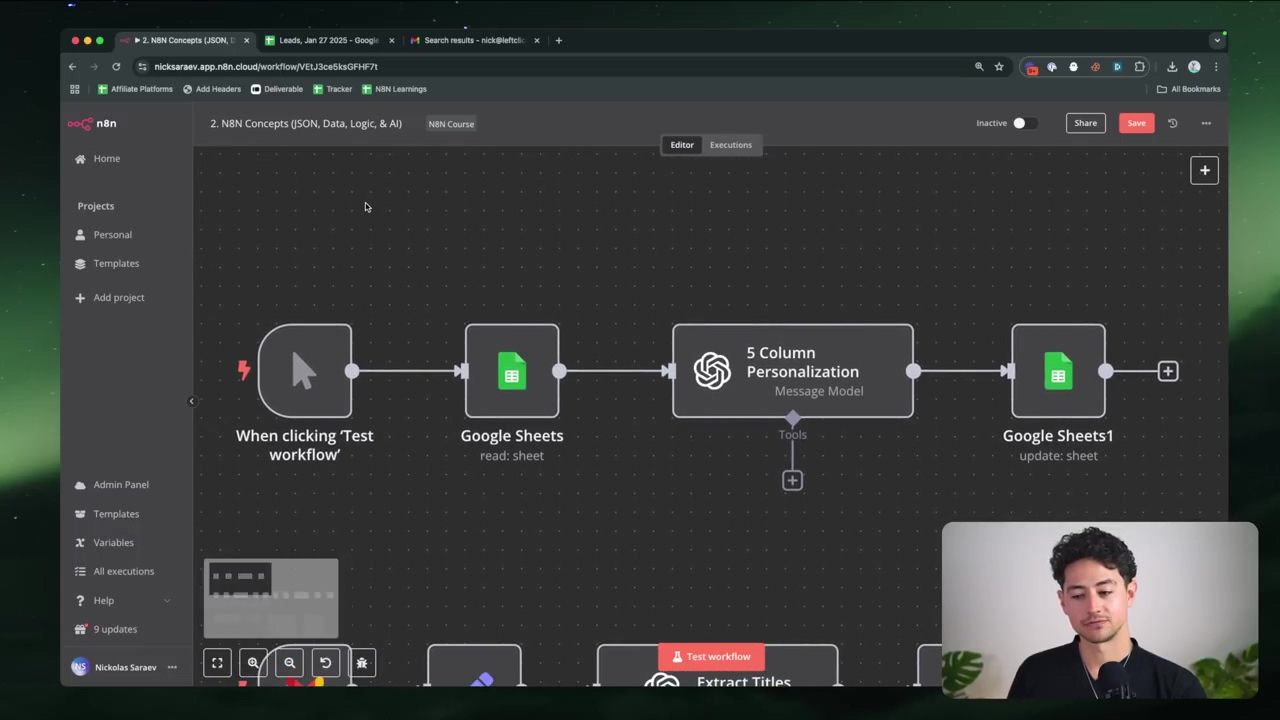

If you’re building AI agents within n8n (think chatbots, smart assistants, etc.), this integration is incredibly seamless. It’s designed to just work.

- In your n8n workflow canvas, add a new node. Search for ‘Chat Model’ and drag it onto your canvas.

- Once the node is added, open its settings. You’ll see a dropdown for the model. Search for ‘OpenRouter’ and select the ‘OpenRouter Chat Model’.

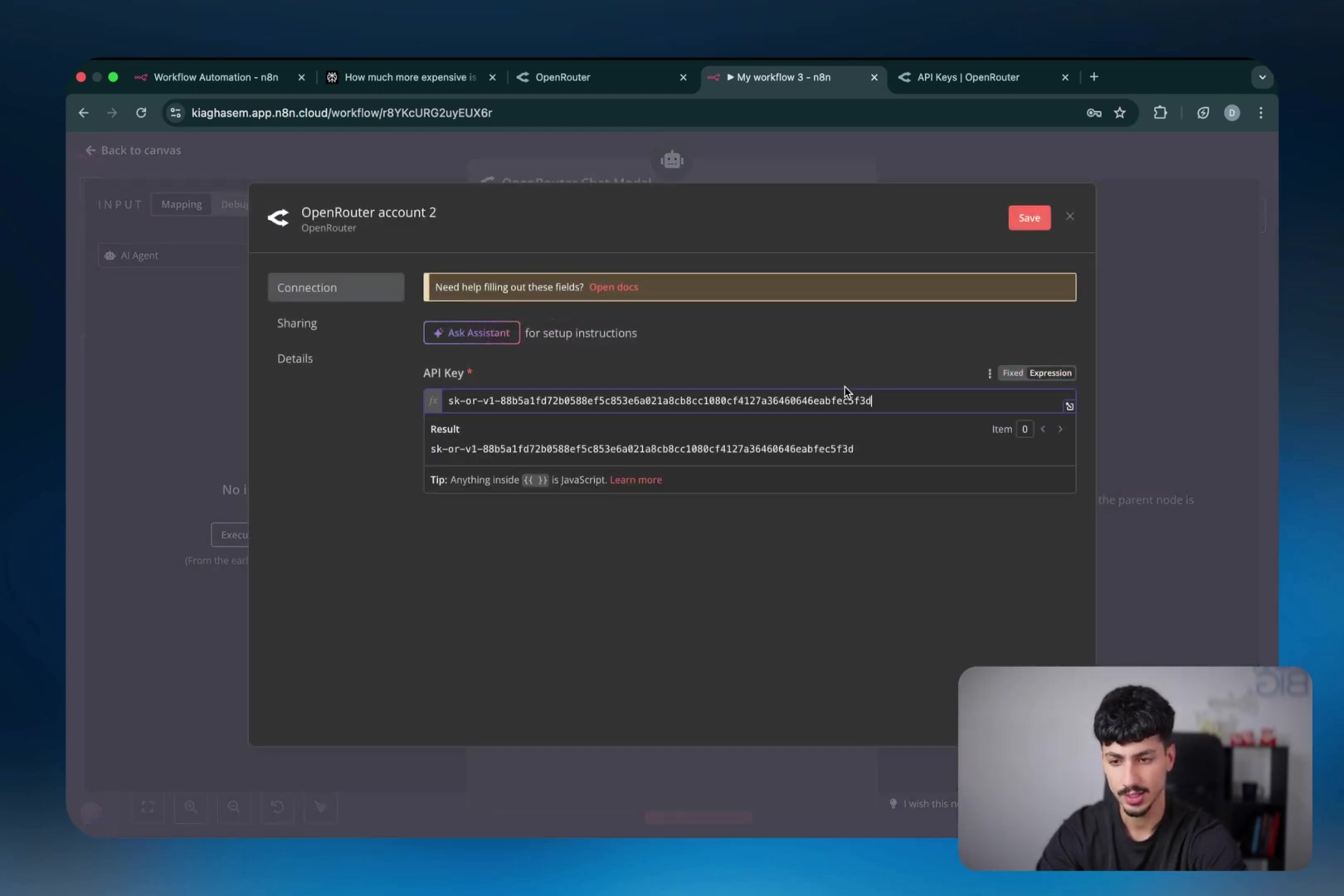

- Now, for the credentials! Click ‘Create New Credential’. This will open a small pop-up or sidebar where you can input your API key.

- Paste the OpenRouter API key you copied earlier into the designated ‘API Key’ field.

- Click ‘Save’.

Expected Feedback: You should see a green checkmark or a success message indicating that the credential was saved. The ‘OpenRouter Chat Model’ node should now show that it’s connected to your new credential.

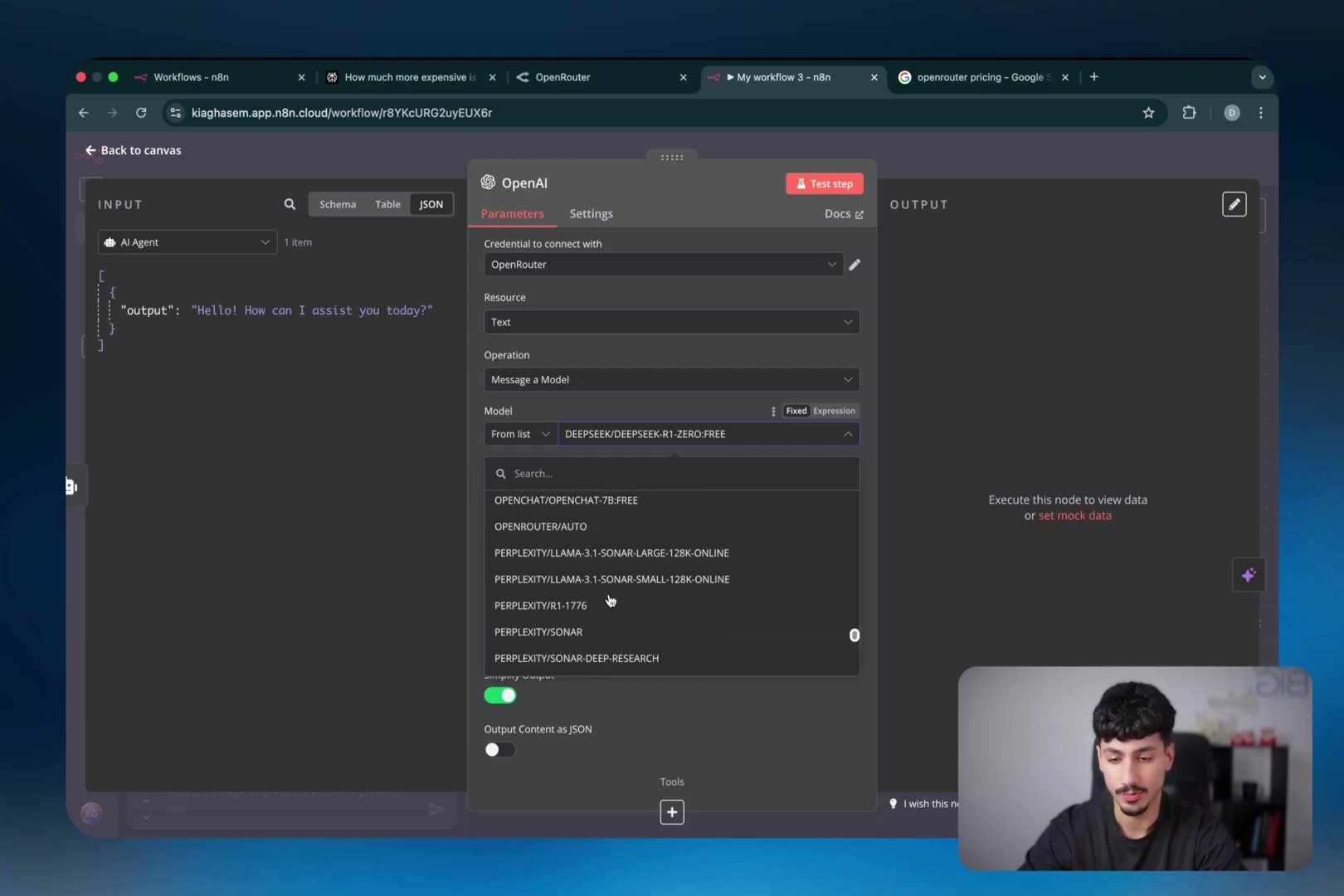

Once connected, you’ll be able to choose from a vast list of LLMs directly within the ‘OpenRouter Chat Model’ node settings. It’s like having a whole library of brains at your disposal to power your AI agent!

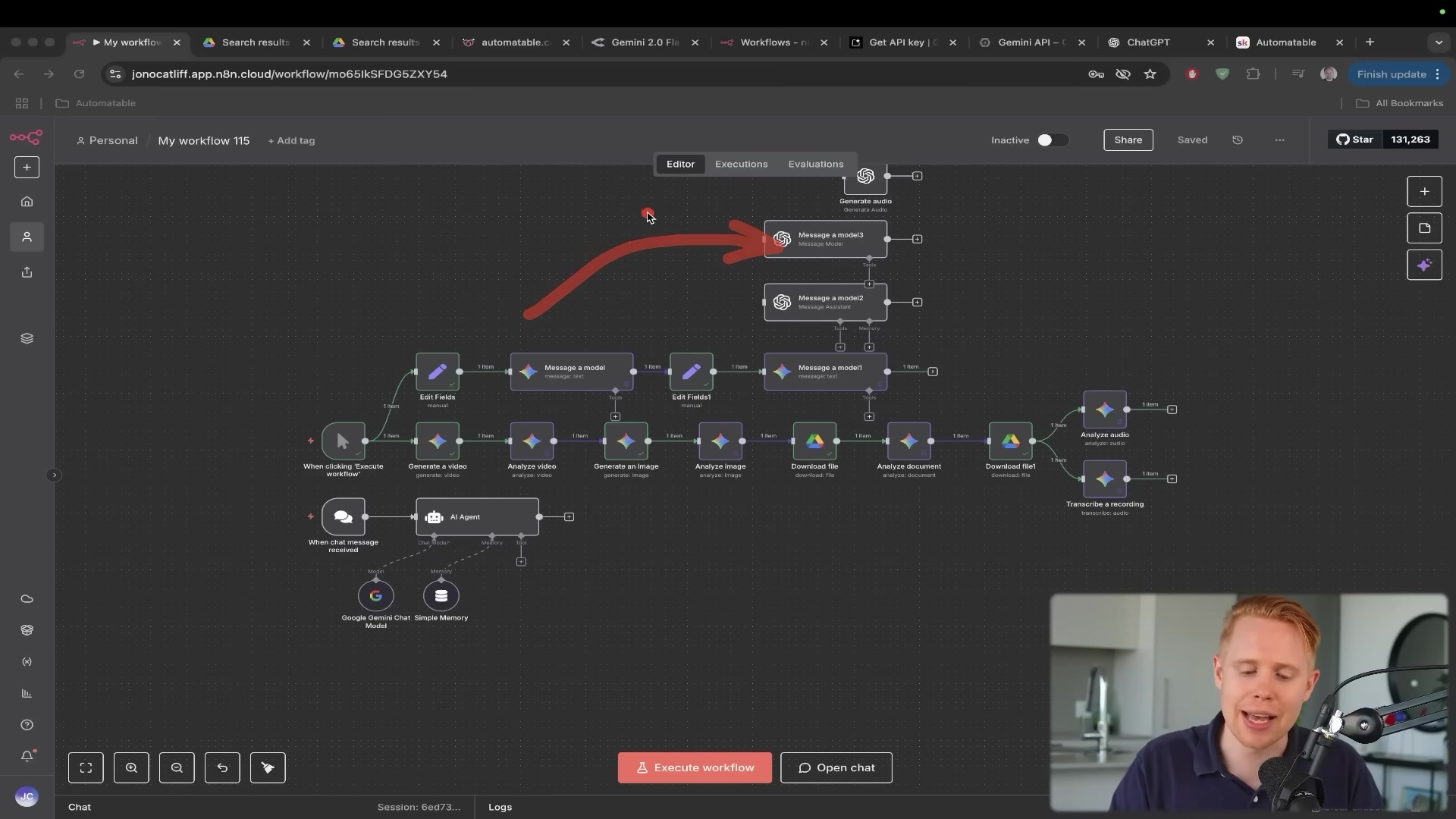

3. Connect OpenRouter for One-Off LLM Messages (OpenAI Node)

What if you just want to send a quick message to an LLM, or use it for a specific task that doesn’t necessarily involve a full-blown AI agent? No problem! You can totally use the standard OpenAI node in n8n and configure it to route through OpenRouter. This is a super flexible trick!

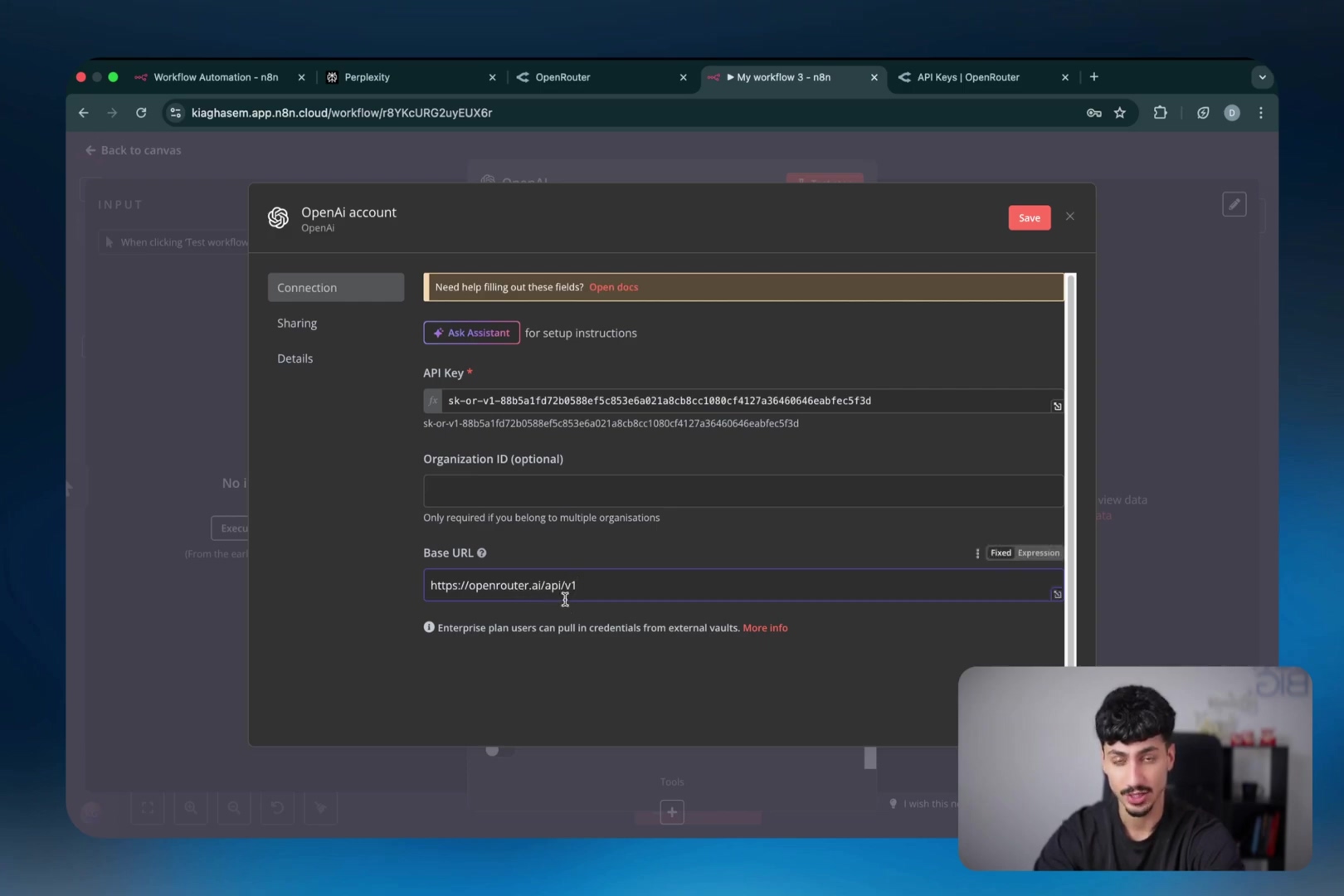

- Add an ‘OpenAI’ node to your n8n workflow. Don’t worry, we’re just borrowing its structure.

- In the node settings, select ‘Message a Model’ as the operation. This is the default, but just double-check.

- Click ‘Create a New Credential’. Again, this will open the credential input area.

- You’ll see fields for ‘API Key’, ‘Base URL’, and ‘Organization ID’. Here’s where the magic happens:

- Paste your OpenRouter API key into the ‘API Key’ field. Yes, the same OpenRouter key you used before.

- Crucially, change the ‘Base URL’ to

https://openrouter.ai/api/v1. This tells the OpenAI node to send its requests to OpenRouter’s gateway instead of OpenAI’s directly. This is the secret sauce! - Leave the ‘Organization ID’ field blank. You don’t need it for OpenRouter.

- Click ‘Save’.

Expected Feedback: Similar to before, you should see a success confirmation. Now, when you use this OpenAI node, it will actually be talking to OpenRouter, giving you access to all those models!

Upon successful connection, you’ll gain access to all OpenRouter-supported LLMs via this OpenAI node. You’ll see a dropdown list of models, and you can pick and choose just like you would with a native OpenAI connection, but with the added flexibility of OpenRouter’s vast catalog.

Critical Safety & Best Practice Tips

Alright, you’ve got the power! But with great power comes great responsibility, right? Here are some crucial tips to keep your LLM integrations safe, cost-effective, and running smoothly:

💡 API Key Security: This is a big one, folks! Your API keys are like the keys to your digital kingdom. Never, ever expose them directly in public code repositories (like GitHub) or in client-side applications (like a website’s frontend JavaScript). Always, always use environment variables or, even better, n8n’s built-in credential management system. That’s what it’s there for! Treat those keys like gold.

💡 Cost Monitoring: Remember that small fee we talked about? It’s easy to lose track of token usage, especially when you’re experimenting. Regularly check your OpenRouter activity dashboard to monitor your token usage and costs. This is super important during testing phases to identify and fix any unexpected expenses before they become a problem. Trust me, you don’t want a surprise bill!

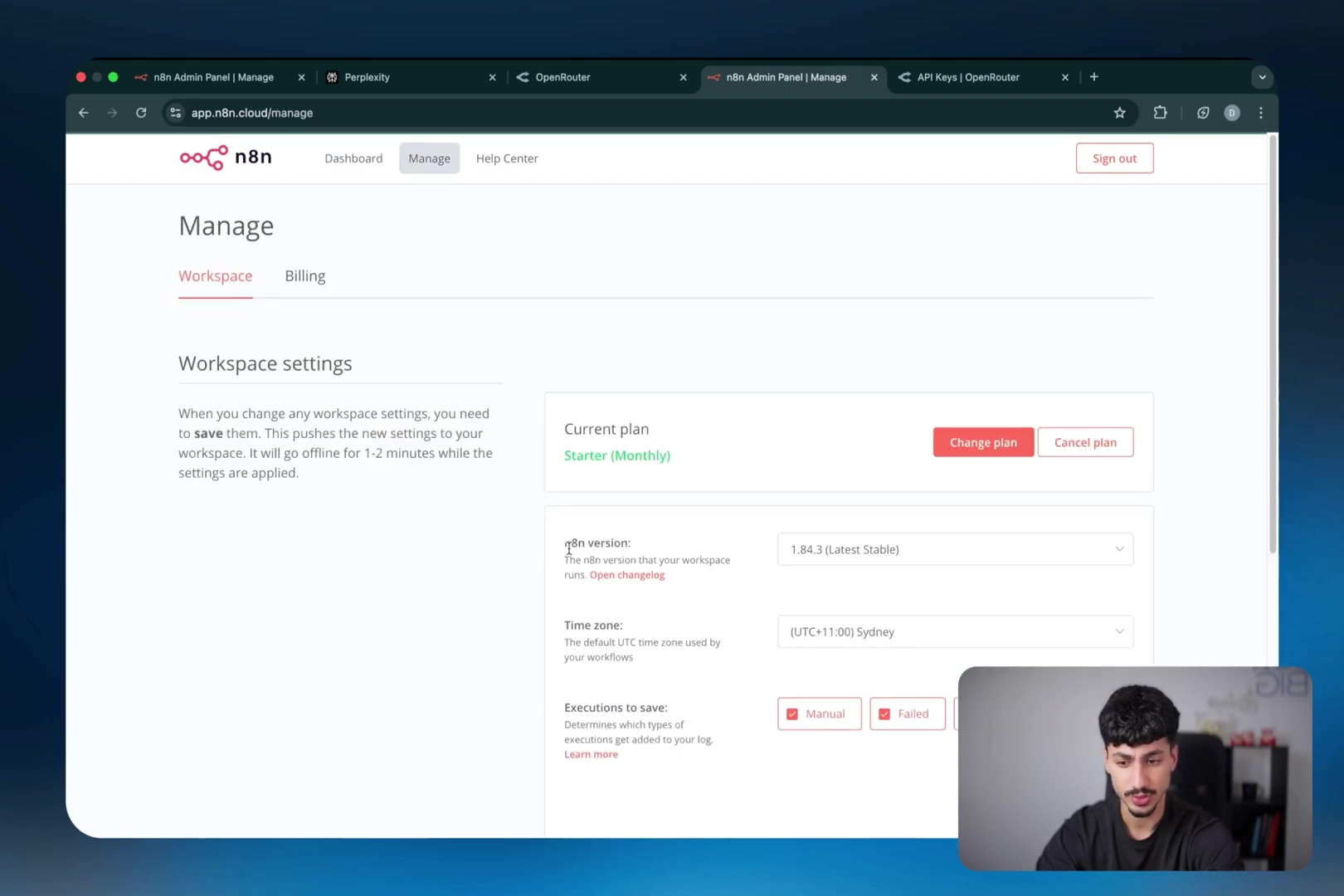

💡 Version Management: Technology moves fast, and n8n is no exception! Ensure your n8n instance is updated to the latest stable version. This isn’t just about getting cool new features; it’s also about ensuring compatibility with the latest OpenRouter integrations and getting the best performance. You can easily check and update your n8n version via the Admin Panel under ‘Workspace settings’. It’s usually a quick process, and it keeps everything humming along nicely.

Key Takeaways

Let’s quickly recap the awesomeness we just covered:

- Unified Access: OpenRouter is your one-stop shop, providing a single API key to access a wide range of Large Language Models (LLMs) from various providers. This simplifies integration with platforms like n8n big time.

- Cost Transparency: Centralized tracking of LLM usage and costs across all integrated models simplifies financial oversight for complex workflows. No more guessing where your money went!

- Flexible Integration: Whether you’re building sophisticated AI agents or just need to send a quick message to an LLM, OpenRouter can be integrated into n8n for both, offering incredible versatility in your workflow design.

- Minor Fee, Major Value: Yes, OpenRouter charges a small percentage fee (4-5%), but its benefits in terms of convenience, flexibility, and centralized management often far outweigh this cost, especially during development and testing phases. It’s an investment in your sanity!

- Version Compatibility: Keeping your n8n instance updated ensures you always have access to the latest OpenRouter features and optimal performance. Don’t fall behind!

Conclusion

Integrating OpenRouter with n8n offers an unparalleled advantage for developers and businesses looking to harness the power of diverse Large Language Models. By consolidating API access and centralizing cost management, this approach not only streamlines your workflow automation but also provides greater flexibility and insights into your AI expenditures. The initial setup is straightforward, and the long-term benefits in terms of efficiency and control are substantial. It’s like upgrading your automation from a bicycle to a rocket ship!

For those embarking on new AI-powered automation projects or seeking to optimize existing ones, OpenRouter provides a robust and user-friendly solution. Consider leveraging this powerful tool to unlock new possibilities in your n8n workflows. I’ve personally seen the difference it makes, and I’m confident you will too.

What are your experiences with integrating multiple LLMs into your automation platforms? Share your insights and tips in the comments below! I’d love to hear from you!

Frequently Asked Questions (FAQ)

Q: Can I use my existing API keys from OpenAI or Anthropic directly with OpenRouter?

A: Yes, you absolutely can! OpenRouter allows you to use your own provider API keys. This means you can centralize your billing and management through OpenRouter while still leveraging your existing accounts. Just be aware that there’s an additional 5% fee deducted from the cost of requests when you use this option.

Q: What if a specific LLM I want to use isn’t available on OpenRouter?

A: While OpenRouter offers access to nearly 100 different models, it’s possible a very niche or brand-new model might not be there yet. In such cases, you would need to integrate that specific LLM directly into n8n using its native API. However, OpenRouter is constantly adding new models, so it’s worth checking their supported models list regularly.

Q: How do I monitor my spending and token usage on OpenRouter?

A: OpenRouter provides a dedicated activity dashboard on their website. After logging in, navigate to your account settings or activity section. Here, you can see detailed breakdowns of your token usage, costs, and credit balance. It’s a great way to keep an eye on your expenses and ensure you’re not overspending, especially during development and testing.

Q: Is n8n free to use, or do I need a paid subscription?

A: n8n offers both a free, open-source version that you can self-host, and a cloud-hosted paid service. The self-hosted version is completely free and gives you full control, while the cloud service provides convenience and managed infrastructure. You can choose whichever option best fits your needs and technical comfort level.

Q: Can OpenRouter help me compare the performance of different LLMs for a specific task?

A: Absolutely! This is one of OpenRouter’s biggest strengths. Because you can easily switch between models within your n8n workflow (especially using the OpenAI node trick), you can run the same prompt through different LLMs and compare their outputs, speed, and cost. This makes it incredibly efficient to find the best model for your specific automation task without complex reconfigurations.