Watch the Video Tutorial

💡 Pro Tip: After watching the video, continue reading below for detailed step-by-step instructions, code examples, and additional tips that will help you implement this successfully.

Table of Contents

Open Table of Contents

- Understanding Grok 4: The Next Generation of AI

- Grok 4 Performance Benchmarks

- Practical Applications and Industry Impact

- Integrating Grok 4 with n8n for AI Automation

- Key Takeaways for Implementing Grok 4

- Conclusion

- Frequently Asked Questions (FAQ)

- Q: What is the main advantage of Grok 4 over other AI models?

- Q: Why would I use OpenRouter instead of directly integrating Grok 4 with n8n?

- Q: How can I optimize costs when using Grok 4, since it’s not the cheapest model?

- Q: What does ‘parameters’ mean in the context of AI models like Grok 4?

- Q: Grok 4’s speed can vary. How should I design my n8n automations to account for this?

Understanding Grok 4: The Next Generation of AI

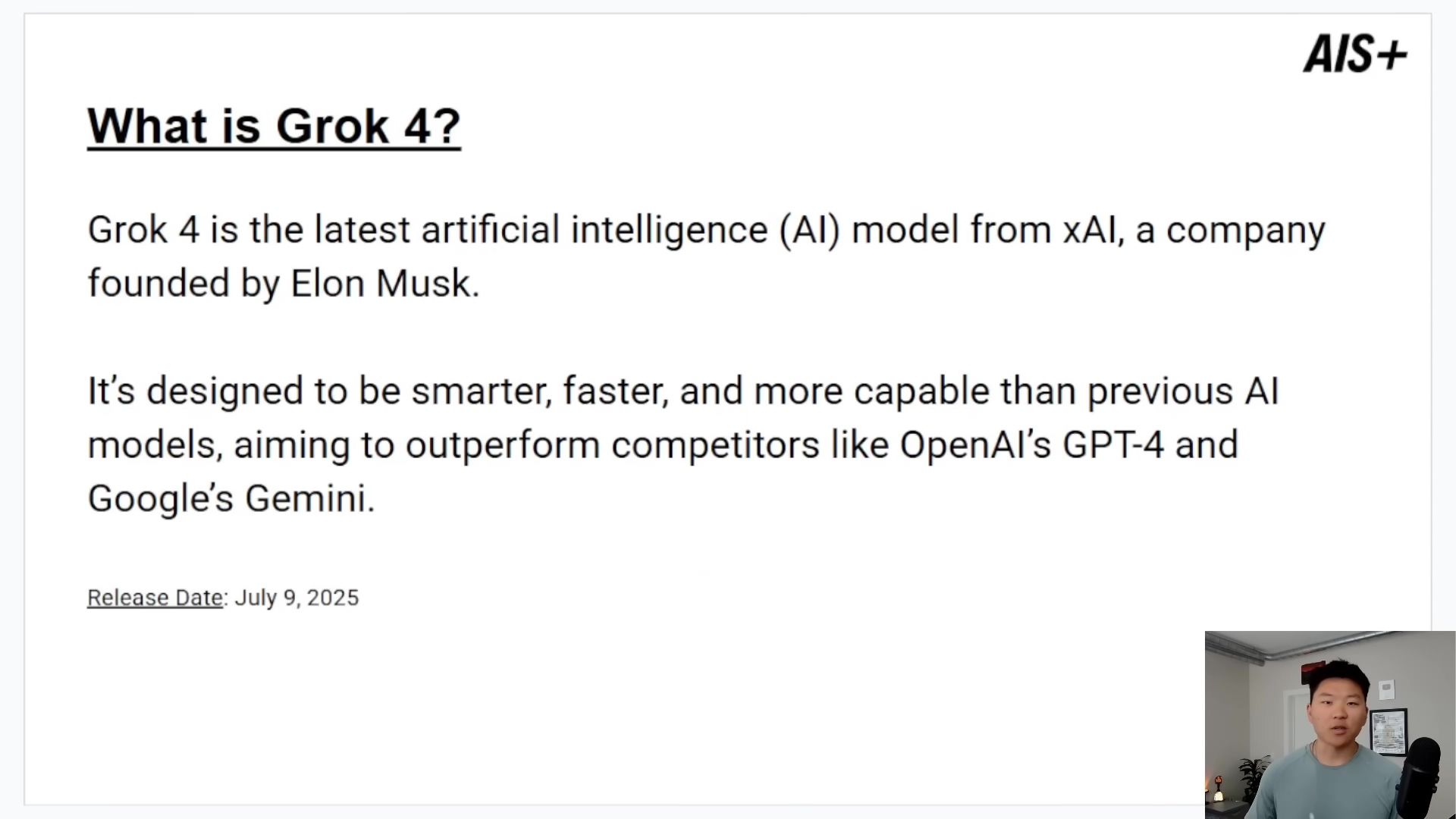

So, what’s the big deal with Grok 4? Imagine a super-smart alien brain that just landed on Earth, ready to help us automate everything. That’s kind of what Grok 4 is! It was officially launched on July 9, 2025, by XAI, Elon Musk’s company. Their goal? To make AI models like OpenAI’s GPT-4 and Google’s Gemini look like flip phones next to a smartphone. Pretty ambitious, right? But let’s see why it’s such a game-changer.

Unprecedented Scale

First off, Grok 4 is HUGE. We’re talking about an astounding 1.7 trillion parameters. Now, what are parameters? Think of them as the ‘knowledge points’ or ‘connections’ in the AI’s brain. The more parameters, generally, the more it can learn and understand. To give you some perspective, let’s look at how it stacks up against some other big players:

| Model | Parameters (approx.) |

|---|---|

| Grok 4 | 1.7 trillion |

| GPT-4 | 1.8 trillion |

| Gemini Ultra | 1 trillion |

| Claude 4 | 500 billion |

| Local AI Model | 1.5 billion |

This massive scale is a big reason why it’s so smart and good at solving problems. It’s like having a library the size of a planet!

Superior Intelligence

Now, while we should always take claims with a grain of salt (or a whole shaker, sometimes!), Grok 4 is said to have capabilities that are on par with, or even better than, someone with a PhD in various subjects. It’s truly amazing at grasping really complex ideas and spitting out sophisticated, well-thought-out answers. It’s not just memorizing stuff; it’s understanding it.

Enhanced Speed and Efficiency

And here’s the kicker: Grok 4 isn’t just smart; it’s fast! It’s built to handle multiple tasks at the same time, which is super handy for us automators. Whether you’re a solo entrepreneur or working for a huge company, this speed is crucial. Think about real-time AI agents or those really complex automation workflows you’ve been dreaming about – Grok 4 makes them a reality. No more waiting around for your AI to catch up!

Grok 4 Performance Benchmarks

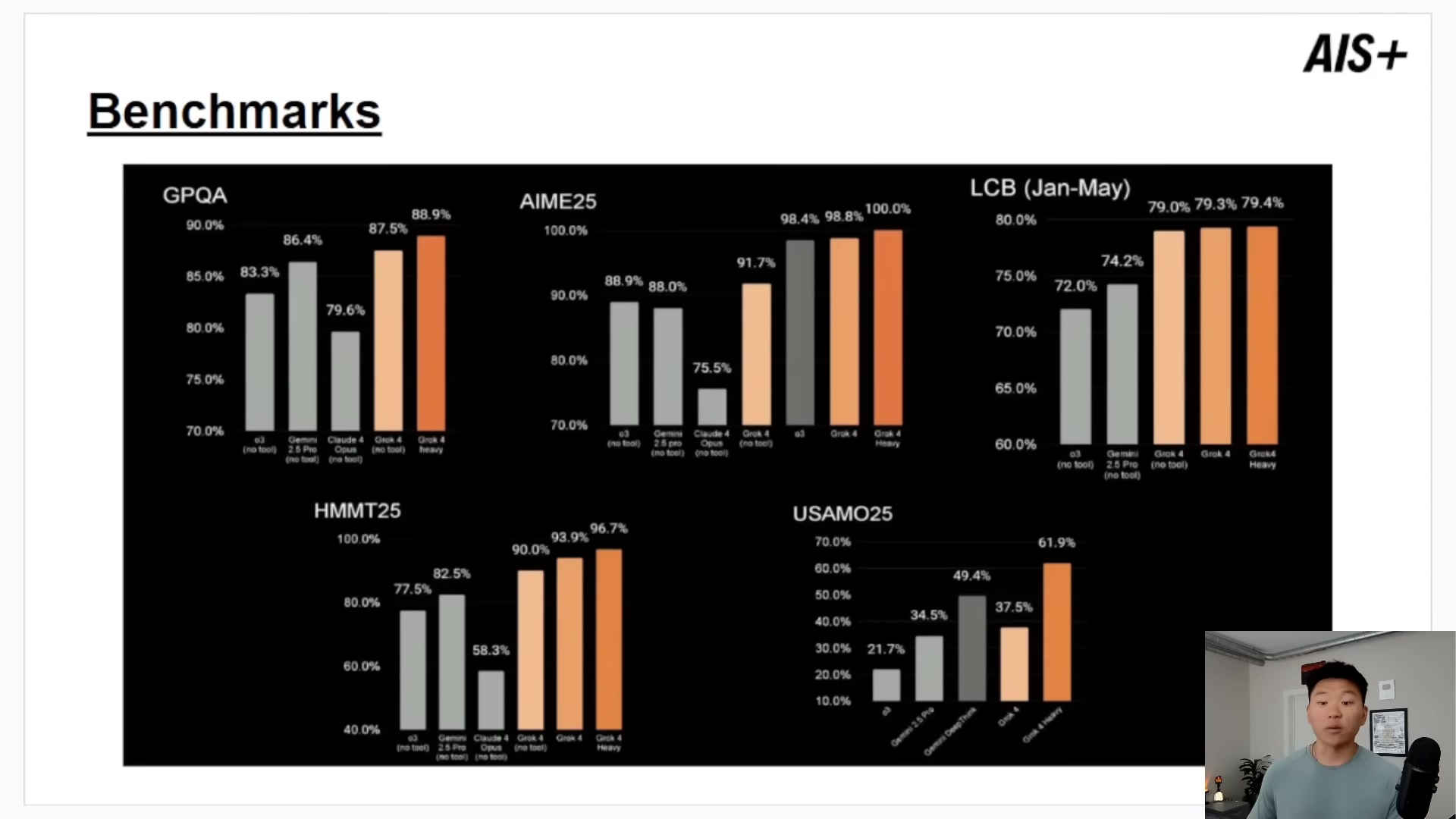

Okay, so we’ve heard the hype. But how does Grok 4 really perform? To get a clear picture, we need to look at some objective tests. These benchmarks compare Grok 4 against other top-tier, closed-source models. And let me tell you, the results are pretty impressive.

Humanity’s Last Exam (HLE)

This one sounds dramatic, right? The Humanity’s Last Exam (HLE) is a super tough test designed to challenge even the smartest humans (and AIs!) with questions from math, science, and humanities. Grok 4 scored 25% without any tools, but get this: when it worked in a ‘multi-agent mode’ (meaning it could use tools, like a smart assistant), its score jumped to 44.4%! This performance actually beat out previous top AI models from OpenAI and Google. It’s like it brought its own calculator and encyclopedia to the test!

Specialized Academic Benchmarks

Beyond the HLE, Grok 4 also shines in specific academic areas:

- GPQA (Graduate Level Physics and Astronomy Questions): Grok 4 nailed this one with an 87-88% score. To put that in perspective, Google Gemini got 86%, and Anthropic’s Claude 4 Opus scored 79%. This really shows its deep understanding of advanced scientific concepts. Pretty cool, huh?

- AIME (American Invitational Mathematics Examination): This is a seriously challenging math test for high school students. AI models usually struggle with complex math, but Grok 4 scored an incredible 95 out of 100! This is a huge deal and points to Grok 4’s advanced problem-solving skills in an area where AIs often stumble.

- SWE-bench (Software Engineering Questions): For all you coders out there, this one’s for you. SWE-bench evaluates real-world coding and software development tasks. Grok 4 scored 72-75%, just slightly ahead of other top AI models. This means it’s a fantastic tool for programming and development tasks – think of it as your super-smart coding buddy!

Practical Applications and Industry Impact

So, what does all this mean for us? Grok 4’s amazing abilities mean it can be used in tons of different industries, totally changing how businesses work and innovate. Its ability to team up with other tools and understand complex data makes it incredibly versatile. It’s like a Swiss Army knife for AI!

Key Application Areas

- Business: Imagine automating those boring risk assessments, predicting future trends, or generating reports in a flash. Grok 4 can do it.

- Healthcare: It can help doctors with diagnoses or even speed up the discovery of new medicines. That’s life-changing stuff!

- Education: Need a personalized tutor or custom learning materials? Grok 4 can be your super teacher.

- Programming: It can help you write code, review your existing code, and even write documentation. My personal favorite, honestly!

- Customer Service: Powering smart chatbots that can handle tricky questions and even understand how a customer is feeling. No more endless hold music!

Integrating Grok 4 with n8n for AI Automation

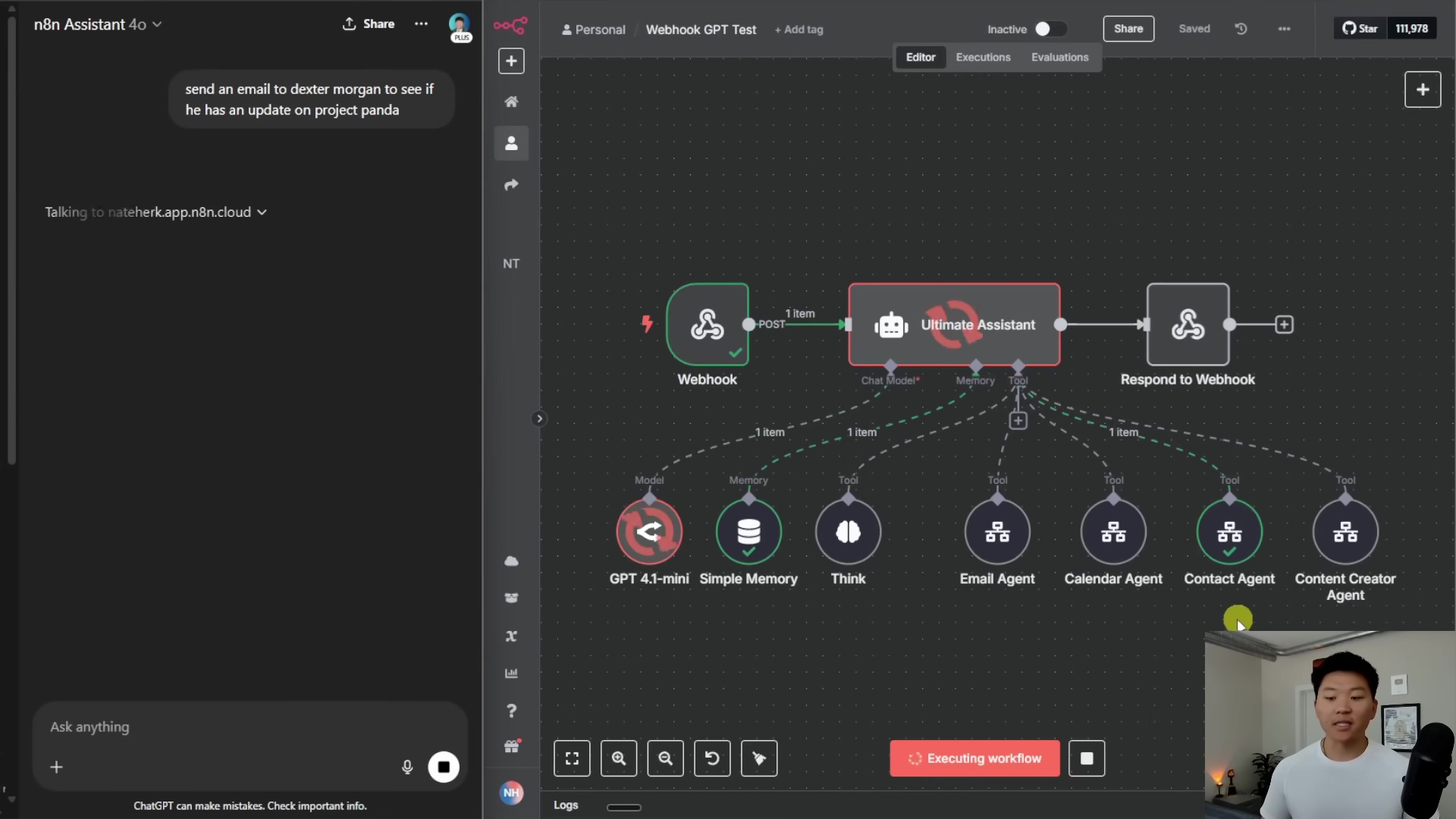

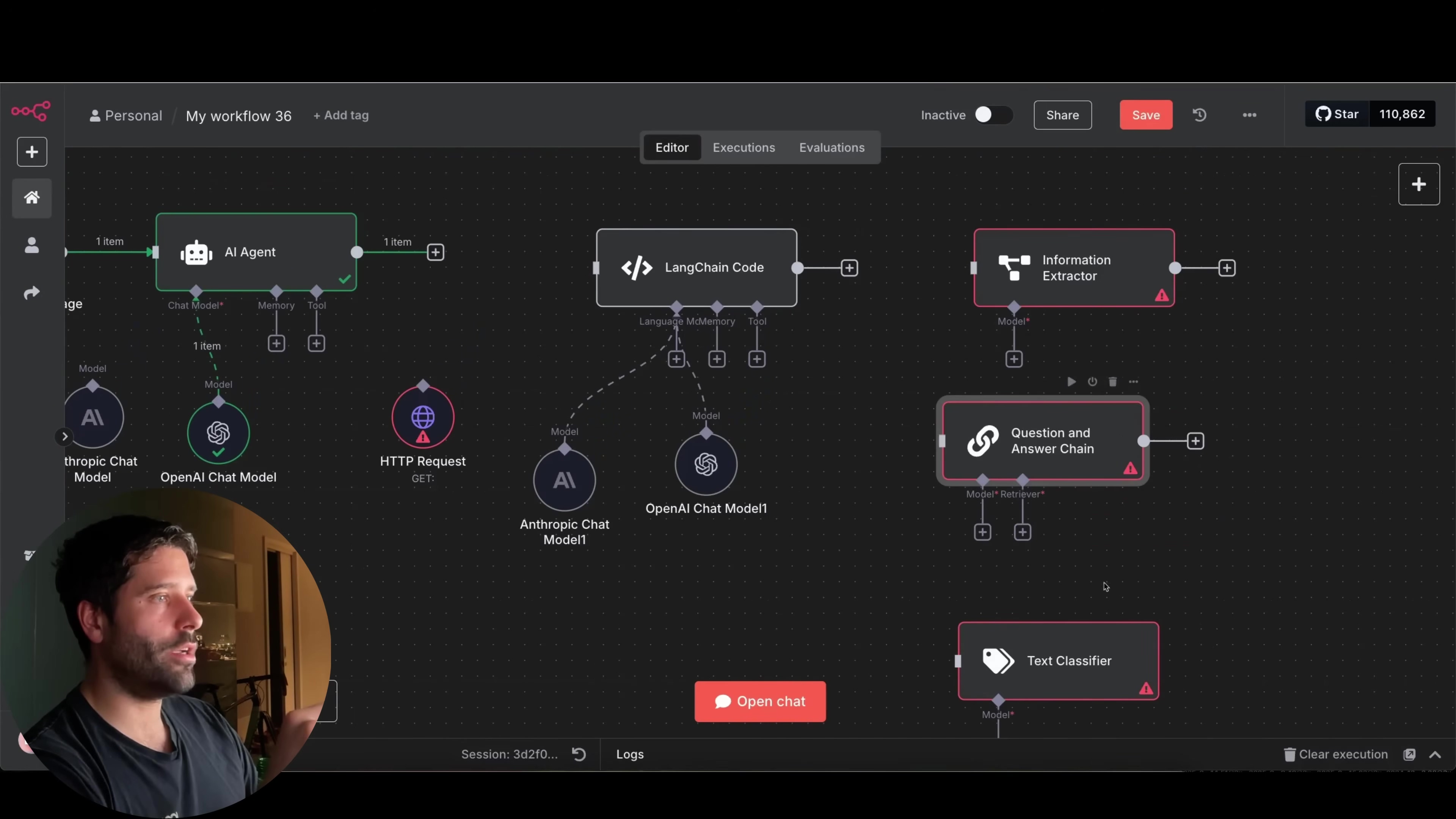

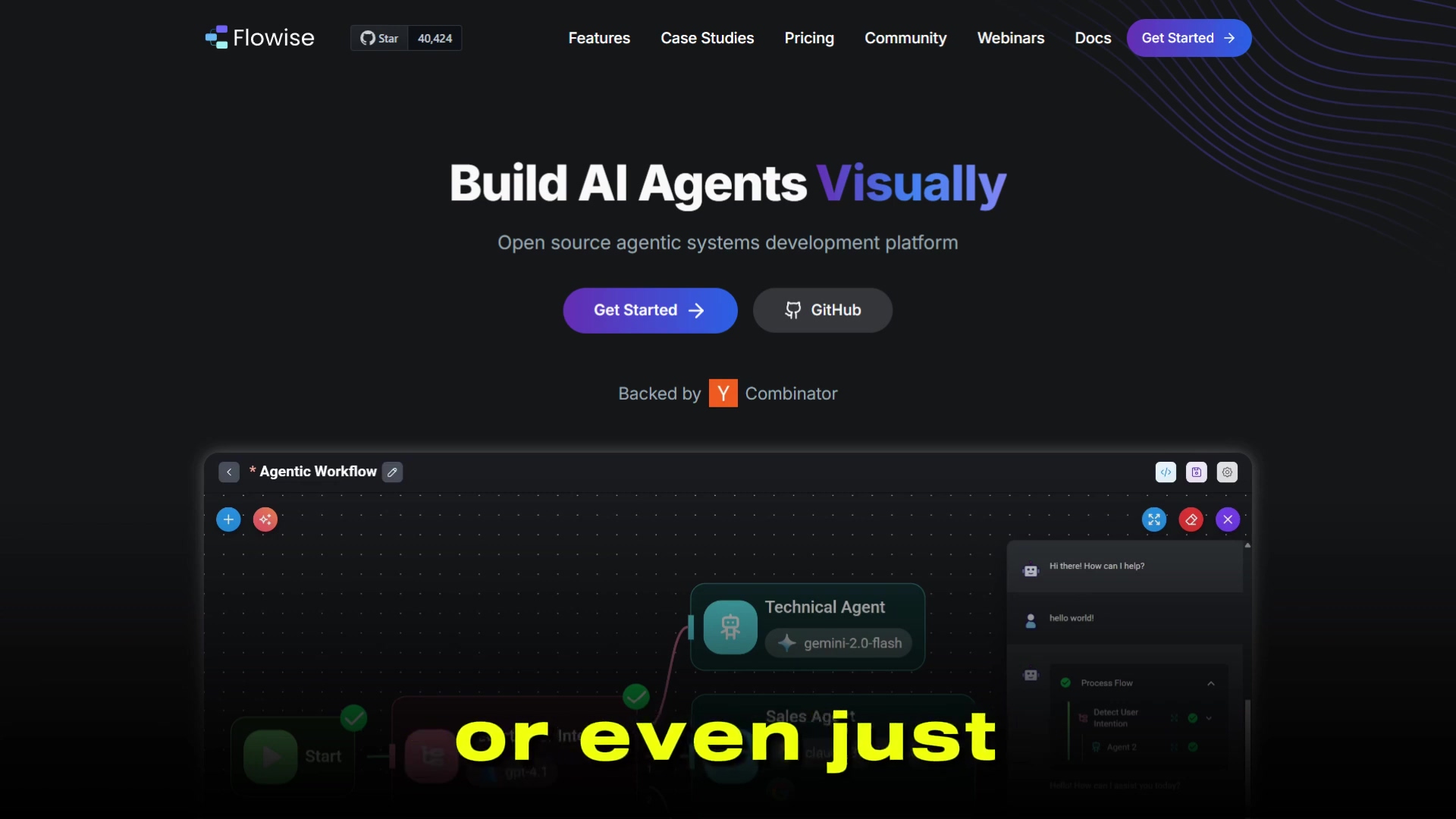

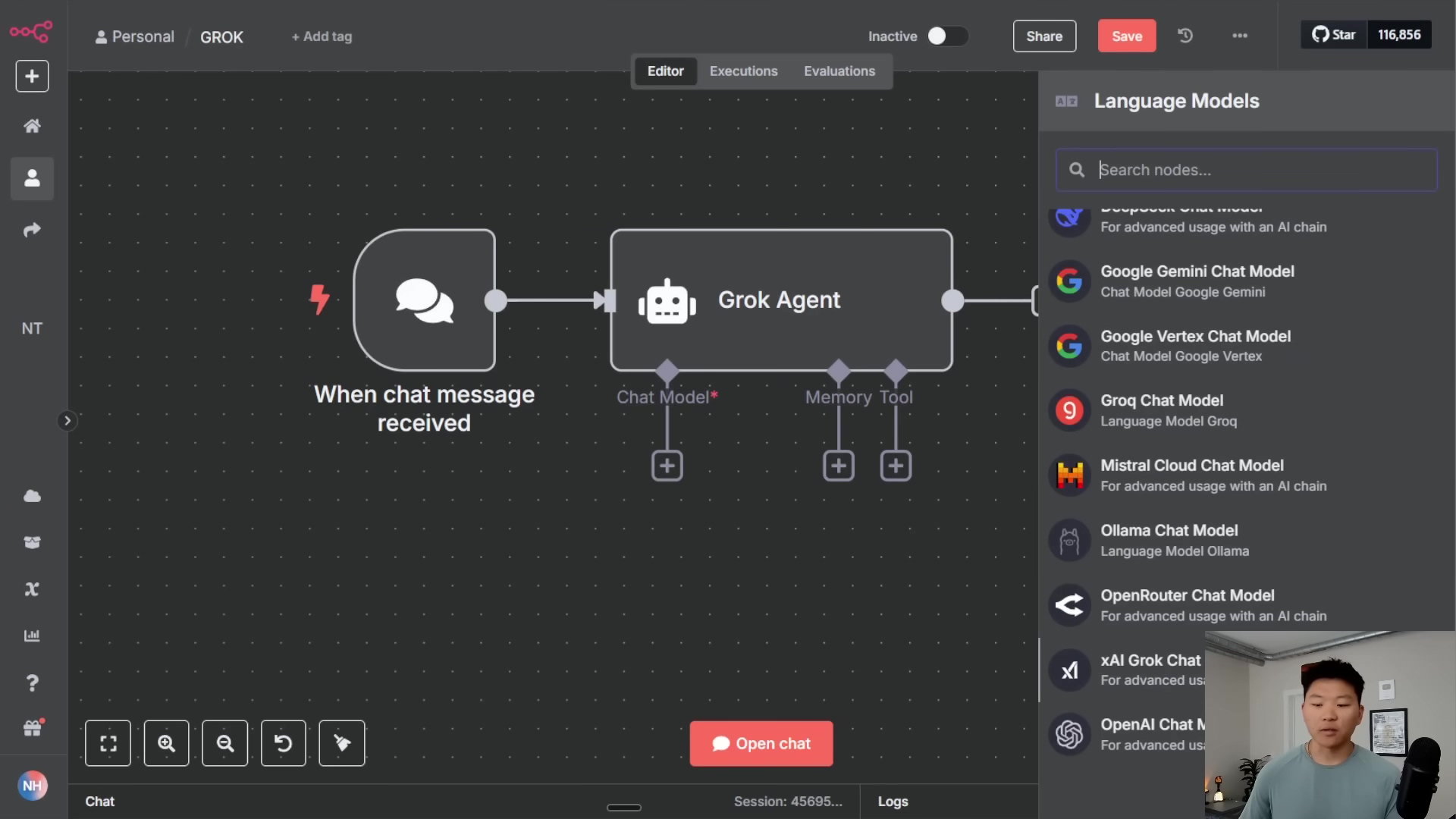

Alright, this is where the rubber meets the road! n8n is my go-to for workflow automation – it’s like the central nervous system for your digital tasks. And guess what? It’s the perfect platform to plug in Grok 4’s brainpower. This integration can seriously level up your existing automation workflows. Let’s get to it!

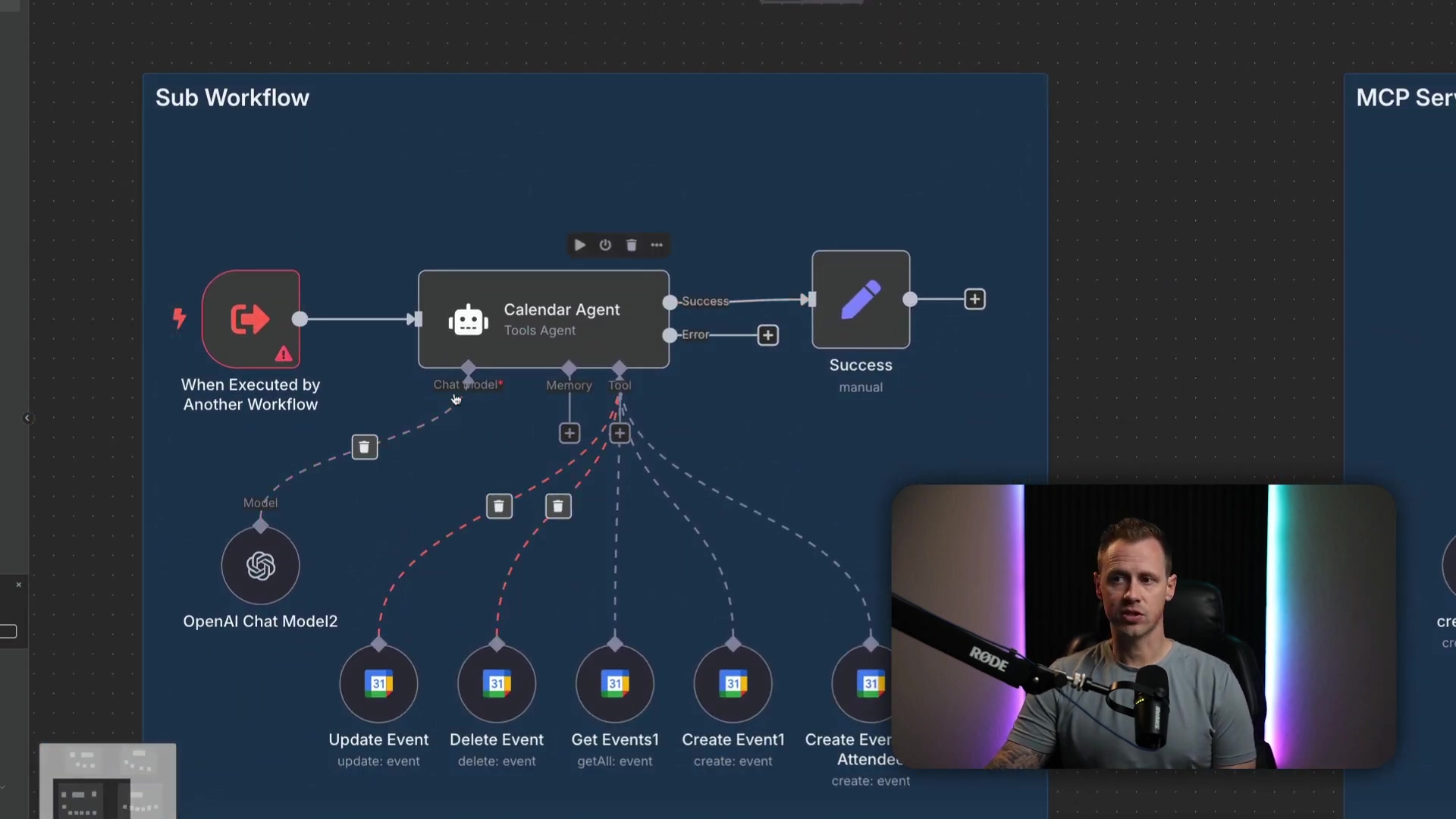

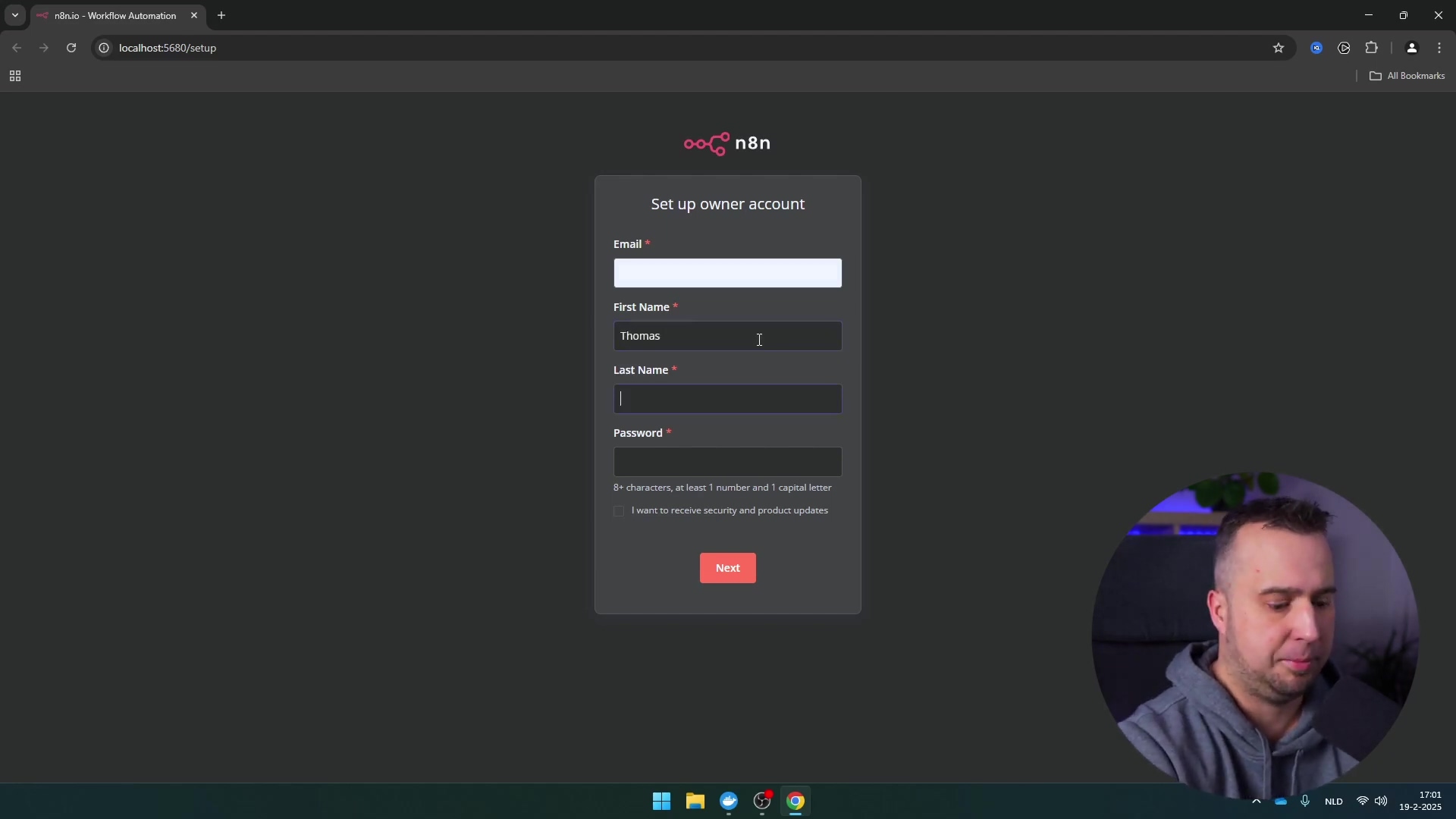

Direct Integration via xAI Grok Chat Model

The easiest way to get Grok 4 talking to n8n is by adding the ‘xAI Grok Chat’ model directly into your AI agent workflow. It’s pretty straightforward, but you’ll need one key thing: an API key. Think of an API key as a secret handshake that lets n8n and Grok 4 recognize each other.

Step 1: Get Your Grok API Key

First, you’ll need to head over to your Grok admin console. This is usually where you manage your Grok account and settings. Look for a section related to ‘API Keys’ or ‘Developer Settings’. If you’re not sure where to find it, check Grok’s official documentation for ‘how to generate an API key’.

Step 2: Add the API Key to n8n

Once you have that shiny new API key, jump into your n8n instance. You’ll need to add it as a new credential. Here’s how:

- In n8n, click on ‘Credentials’ in the left-hand sidebar. (If you don’t see it, you might need to expand the menu.)

- Click ‘New Credential’.

- Search for ‘xAI Grok Chat’ or ‘Grok’.

- Select it and paste your API key into the designated field. Give it a name you’ll remember, like ‘My Grok 4 API Key’.

- Click ‘Save’.

Expected Feedback: You should see a confirmation that your credential has been saved successfully. If there’s an error, double-check your API key – sometimes a stray space or character can mess things up.

Step 3: Select Grok 4 in Your Workflow

Now, when you’re building your n8n workflow and you add an ‘AI Agent’ node or a ‘Chat Model’ node, you’ll be able to select Grok 4 as your chat model. Make sure you pick the ‘0709 version’ – that’s the one that launched on July 9th, our super-smart friend!

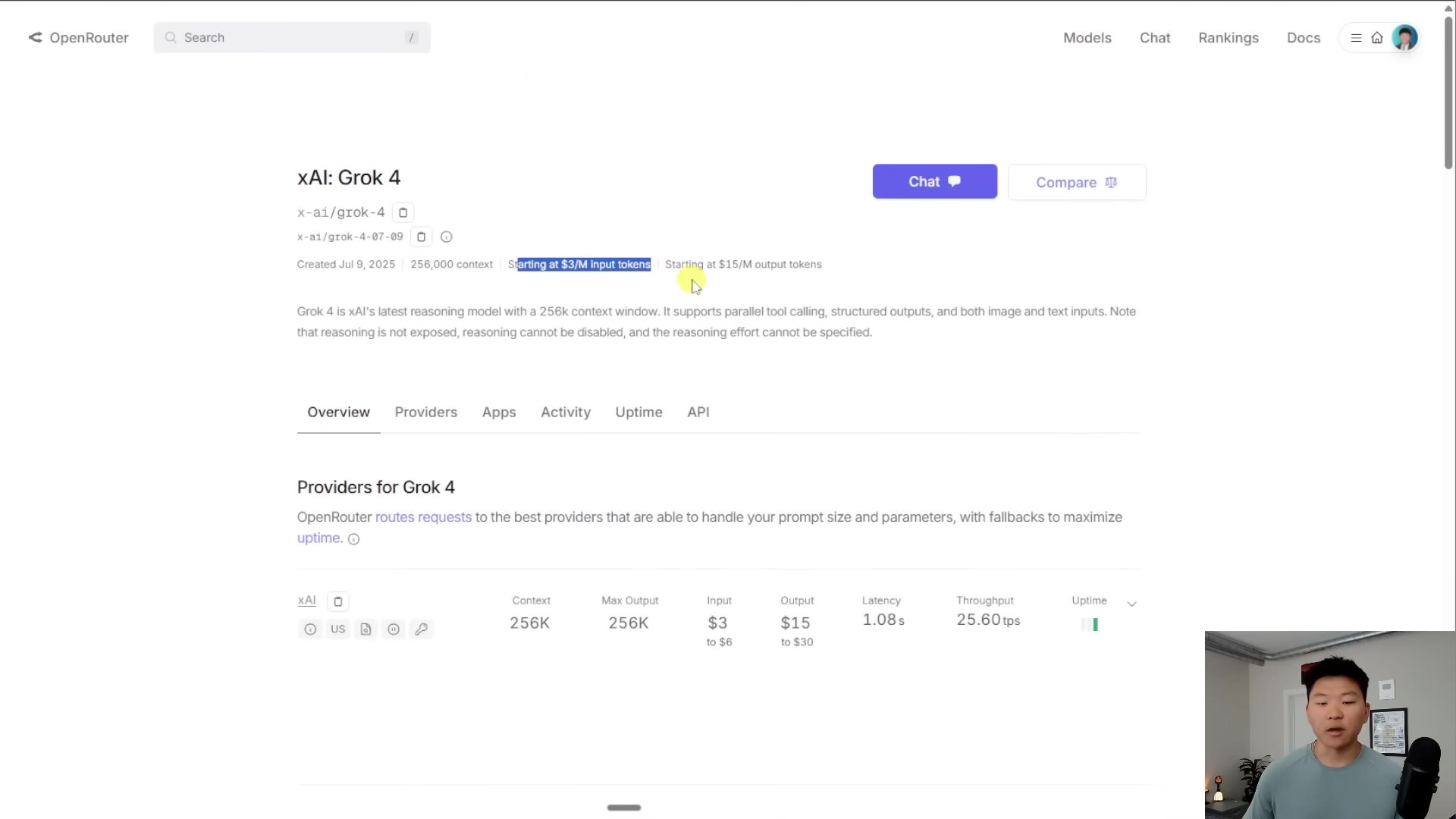

Enhancing Tool Calling with OpenRouter

Okay, so direct integration is great for simple chat tasks. But what if your AI agent needs to do more complex stuff, like using Perplexity for research or Tavi for web scraping? Sometimes, these ‘tool calls’ can get a bit messy, especially when dealing with how the AI parses complex data (like JSON outputs) from different tools. This is where a routing service like OpenRouter comes in super handy.

Think of OpenRouter as a universal adapter for all your AI models and tools. It sits in the middle, allowing you to connect to Grok 4 (and many other models!) while also centralizing your billing and analytics. This approach can really smooth out those parsing issues, especially when your AI agent is juggling lots of different tools and their unique data formats.

Why use OpenRouter?

- Flexibility: Connect to Grok 4 and a whole bunch of other models through one service.

- Centralized Billing: No more juggling multiple API keys and bills. Everything’s in one place.

- Tool Argument Parsing: This is the big one. OpenRouter is designed to handle the complexities of how different tools send and receive information, making your multi-tool workflows much more reliable.

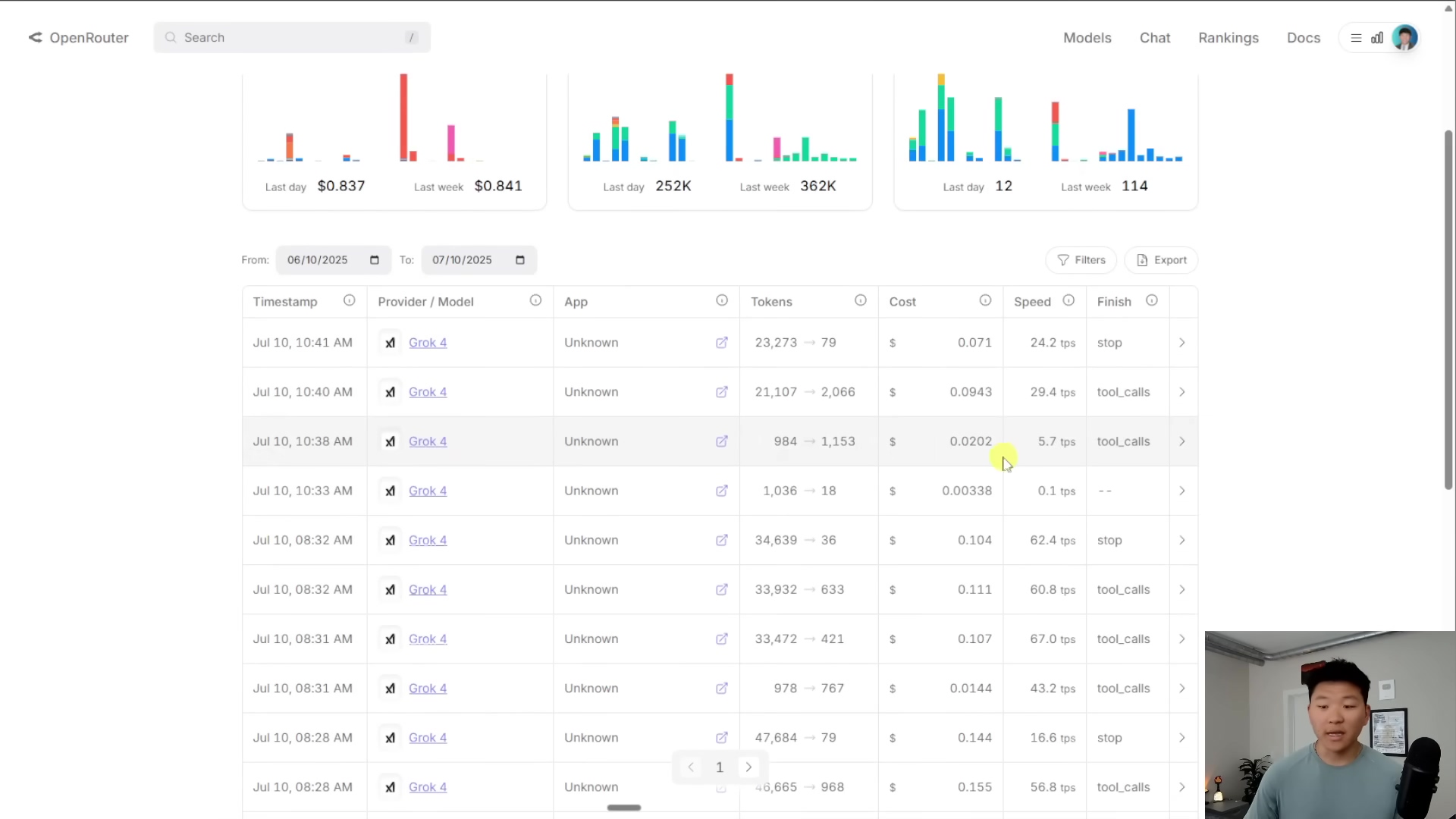

Cost and Speed Considerations

Alright, let’s talk brass tacks: cost and speed. Grok 4 is powerful, no doubt, but it’s not the cheapest model on the block. And its speed? Well, it can be a bit like rush hour traffic – sometimes fast, sometimes slow, depending on how many people are using it at the same time. This is what we call ‘server load’.

Complex workflows, especially those involving extensive research and multiple tool calls (like our Perplexity and Tavi example), can rack up higher costs. Why? Because they process a lot more ‘tokens’. What are tokens? Think of them as chunks of text – words, parts of words, or even punctuation. The more tokens processed, the more it costs, just like paying for data usage on your phone.

For instance, a big research task using both Perplexity and Tavi with Grok 4 might cost around $0.12 and take several minutes to complete. The speed can really jump around based on the time of day and how busy Grok 4’s servers are. It’s a bit like a cosmic lottery!

Boyce’s Pro Tip for Cost Optimization: To keep those costs down, here’s a trick I use: process the initial information with a cheaper, faster model first. Get the gist of what you need. Then, feed that summarized version to Grok 4 for the final, super-smart processing. It’s like having a junior assistant do the initial legwork before the CEO (Grok 4) steps in for the final decision. Smart, right?

Key Takeaways for Implementing Grok 4

So, you’re ready to unleash Grok 4 in your automations? Awesome! But before you dive in, here are some key things I’ve learned that will help you get the most out of it, without breaking the bank or pulling your hair out.

- Prioritize Integration: Start simple. For basic chat tasks, use the direct n8n integration. It’s quick and easy. But if you’re building a complex AI agent that needs to use multiple tools, definitely explore OpenRouter. It’s worth the extra step for the stability it provides.

- Monitor Costs: Keep an eye on your token usage. Those research tasks can eat up tokens faster than I eat pizza! Remember my tip about using a cheaper model for initial processing to manage expenses.

- Account for Latency: Grok 4’s speed can be a bit unpredictable. Design your automations with this in mind. Maybe add a small delay, or build in some error handling for timeouts. It’s like planning for traffic on your commute.

- Leverage Its Reasoning: Don’t just use Grok 4 for simple stuff. It’s a powerhouse for tasks that need deep thinking, complex problem-solving, and sophisticated data analysis. Let it do the heavy lifting!

- Stay Updated: The AI world moves at warp speed. Regularly check for updates on Grok 4’s features, pricing, and how to integrate it. You don’t want to miss out on new superpowers!

Conclusion

Well, we’ve reached the end of our journey, my friend! Grok 4 truly is a massive leap forward in AI capabilities. It’s got unparalleled intelligence and can be used for so many different things. By understanding its strengths and smartly integrating it with platforms like n8n, you’re not just automating tasks; you’re unlocking new levels of problem-solving and efficiency in your projects. Yes, you need to be smart about costs and speed, but the potential for building smarter, more efficient AI agents is just too exciting to ignore. Grok 4 is definitely a tool for the future of automation, and you’re now equipped to wield it!

And hey, if you’re looking to really dive deep into AI automation, master those token-saving tricks, or become a context engineering guru, consider joining a community. Platforms like the AI Automation Society Plus offer a fantastic space to connect with other like-minded folks, learn from each other, and build some seriously time-saving automations. It’s like a secret club for automation superheroes!

Now, go forth and build something amazing with Grok 4! And don’t be shy – share your experiences and discoveries in the comments below. I’d love to hear what you’re cooking up!

Frequently Asked Questions (FAQ)

Q: What is the main advantage of Grok 4 over other AI models?

A: Grok 4’s main advantages are its massive scale (1.7 trillion parameters), which contributes to its superior intelligence, and its enhanced speed and efficiency for handling multiple tasks concurrently. It also shows strong performance in complex academic and software engineering benchmarks, indicating advanced reasoning capabilities.

Q: Why would I use OpenRouter instead of directly integrating Grok 4 with n8n?

A: While direct integration works for basic chat, OpenRouter is super useful for more complex scenarios involving multiple tools (like web scrapers or research tools). It acts as an intermediary, centralizing billing and analytics, and more importantly, it helps mitigate parsing issues that can arise when different tools output complex data formats, making your multi-tool workflows more robust.

Q: How can I optimize costs when using Grok 4, since it’s not the cheapest model?

A: To optimize costs, consider a multi-model workflow. You can process initial information or perform preliminary tasks with a cheaper, faster AI model. Once you have a summarized or refined version of the data, feed that to Grok 4 for its advanced reasoning and final processing. This reduces the number of tokens Grok 4 needs to process, saving you money.

Q: What does ‘parameters’ mean in the context of AI models like Grok 4?

A: In AI models, ‘parameters’ are essentially the values that the model learns during its training process. Think of them as the ‘knowledge points’ or ‘connections’ within the AI’s neural network. A higher number of parameters generally means the model has a greater capacity to learn, understand, and generate complex patterns and information, leading to enhanced intelligence and problem-solving abilities.

Q: Grok 4’s speed can vary. How should I design my n8n automations to account for this?

A: Since Grok 4’s speed can fluctuate based on server load, it’s a good idea to design your n8n automations with this in mind. You might want to build in some buffer time or add error handling for potential timeouts. For critical workflows, consider implementing retry mechanisms or notifications if a task takes longer than expected. This makes your automations more resilient to varying response times.