Watch the Video Tutorial

💡 Pro Tip: After watching the video, continue reading below for detailed step-by-step instructions, code examples, and additional tips that will help you implement this successfully.

In an era where digital content reigns supreme, the demand for engaging and viral video content has never been higher. Yet, for many creators, marketers, and businesses, the traditional video production pipeline is a bottleneck, characterized by high costs, time-consuming processes, and inconsistent output. Imagine reducing your video creation time from days or weeks to mere minutes, while simultaneously slashing production costs by up to 90%. This is not a distant dream but a current reality, achievable through the strategic implementation of an AI-powered faceless video content machine. As a seasoned AI automation architect and content strategist, I’ve witnessed firsthand how these systems are revolutionizing content creation, enabling brands to generate thousands of unique, high-quality videos on autopilot. This guide will demystify the process, providing you with the expert insights and actionable steps to build your own AI content powerhouse, capable of consistently delivering viral-ready content across TikTok, Instagram, YouTube Shorts, and beyond.

Table of Contents

Open Table of Contents

- Unlocking Infinite Viral Content: The Power of AI Faceless Videos

- Why AI Faceless Content is Your Next Big Opportunity

- The Core Components: How the AI Video System Works

- Step-by-Step: Building Your AI Character Database in Airtable

- From Concept to Creation: Generating AI Video Scenes and Images

- Automating Video Production: From Images to Final Render

- Multi-Platform Publishing: Distributing Your AI-Generated Content

- Advanced Tips & Blueprint Access: Scaling Your AI Content Strategy

- Frequently Asked Questions (FAQ)

- Q: What are the primary costs associated with running this AI video system?

- Q: How long does it take to generate a video using this system?

- Q: Can I use my own characters or existing brand assets?

- Q: How consistent are the AI-generated characters and visual styles?

- Q: What level of technical expertise is required to set this up?

- Q: Can I customize the AI models or integrate new ones?

- Q: How does the system handle errors during generation?

- The Future of Content: Embrace AI for Unprecedented Growth

Unlocking Infinite Viral Content: The Power of AI Faceless Videos

The landscape of social media is constantly evolving, with short-form video content dominating user engagement. Platforms like TikTok, Instagram Reels, and YouTube Shorts thrive on a continuous stream of fresh, captivating videos. However, maintaining such a high volume of content is often unsustainable for individual creators and even large marketing teams due to the significant resources required for scripting, filming, editing, and post-production. This is where the concept of an AI-powered faceless video content machine emerges as a game-changer. These systems leverage advanced artificial intelligence to automate nearly every aspect of video creation, from character generation and scene development to image synthesis and final video rendering. The ‘faceless’ aspect refers to the use of AI-generated characters or abstract visuals, eliminating the need for human actors or complex live-action shoots. This approach not only dramatically reduces production time and cost but also opens up unprecedented opportunities for scalability and creative exploration. Imagine a system that can generate a 30-second viral video, complete with unique visuals and voiceovers, in under 10 minutes, for a fraction of the cost of traditional methods. This efficiency allows creators to experiment with diverse content styles, target niche audiences with hyper-personalized videos, and maintain a consistent brand presence across multiple platforms, all without the logistical headaches of conventional video production. The potential for generating ‘infinite’ viral content is no longer hyperbole but a tangible reality for those who embrace this technological shift.

This example of a YouTube Shorts video featuring an AI-generated character illustrates the kind of engaging content an AI faceless video machine can produce, ready for viral distribution.

Why AI Faceless Content is Your Next Big Opportunity

The strategic advantages of integrating AI into your content creation workflow are multifaceted, offering a compelling proposition for anyone looking to scale their digital presence. Firstly, the cost savings are substantial. Traditional animation or live-action video production can cost hundreds to thousands of dollars per minute of footage, often requiring specialized talent and extensive post-production. AI systems, conversely, can generate high-quality video content for as little as 40 cents per generation, representing a monumental reduction in expenditure. Secondly, speed and scalability are unparalleled. What once took days or weeks can now be completed in minutes. This rapid turnaround allows for agile content strategies, enabling creators to quickly capitalize on trending topics or respond to market demands without delay. A single AI system can produce thousands of videos on autopilot, a feat impossible with human-centric production pipelines. Thirdly, consistency and brand identity are easily maintained. With AI-generated characters and predefined visual styles, brands can ensure a uniform aesthetic and voice across all their content. Companies like Qatar Airways have successfully leveraged AI avatars to build a consistent brand image, garnering millions of views and significant traction. Similarly, brands like Pudgy Penguins have built multi-million dollar empires purely on animated, consistent character-driven content. This consistency fosters brand recognition and loyalty, crucial elements for long-term success. Finally, AI empowers unlimited creativity. Without the constraints of physical sets, actors, or complex CGI, creators can bring any idea to life, experimenting with diverse themes, moods, and visual styles. From futuristic cyberpunk narratives to whimsical anime adventures, the creative possibilities are boundless, allowing for constant innovation and audience engagement. Embracing AI faceless content is not just an efficiency upgrade; it’s a strategic imperative for future-proofing your content strategy.

This Instagram profile demonstrates how brands can leverage consistent AI-generated characters or virtual personas to build a strong, recognizable brand identity and engage a large audience, highlighting the opportunity for consistent brand presence.

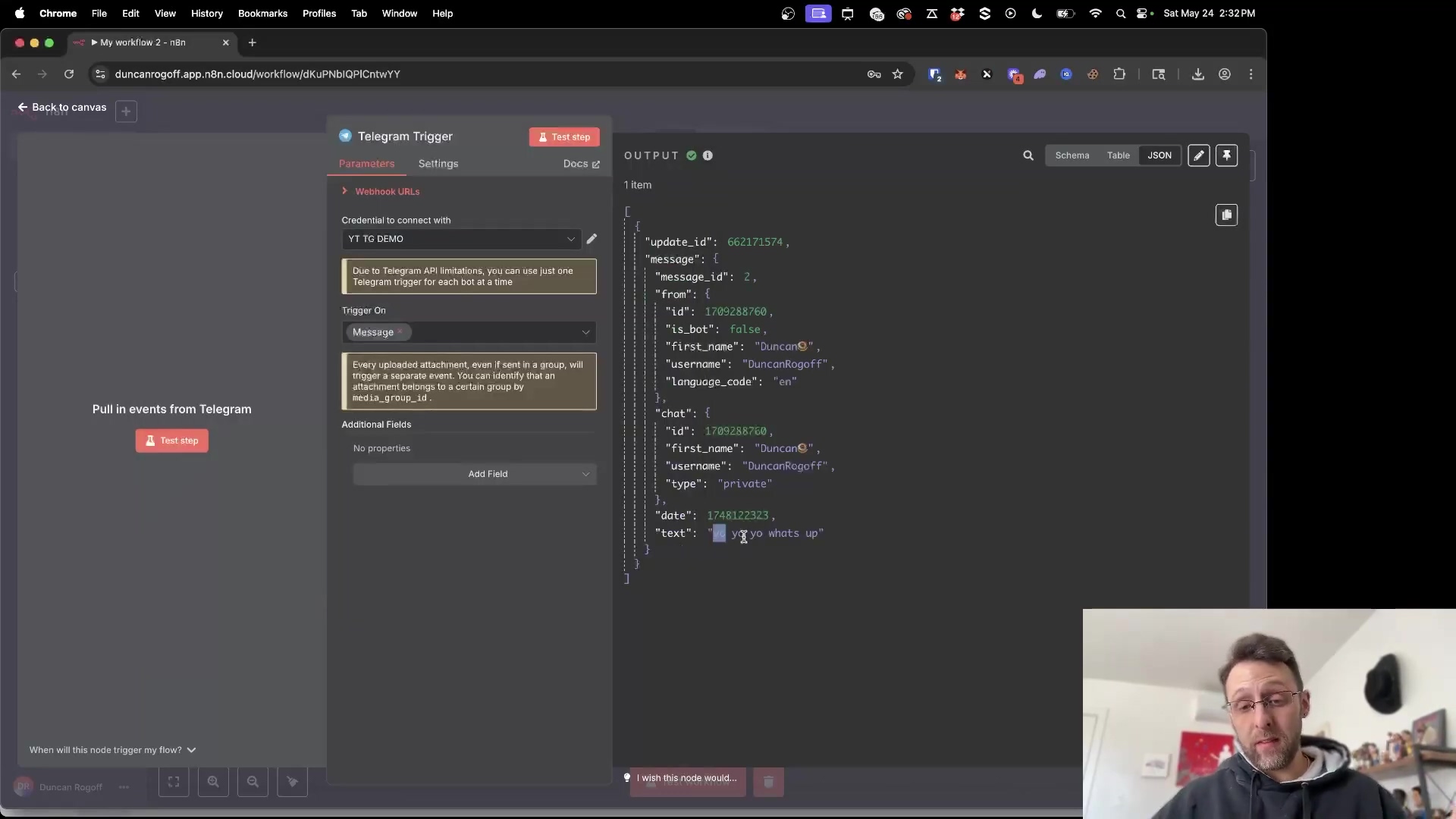

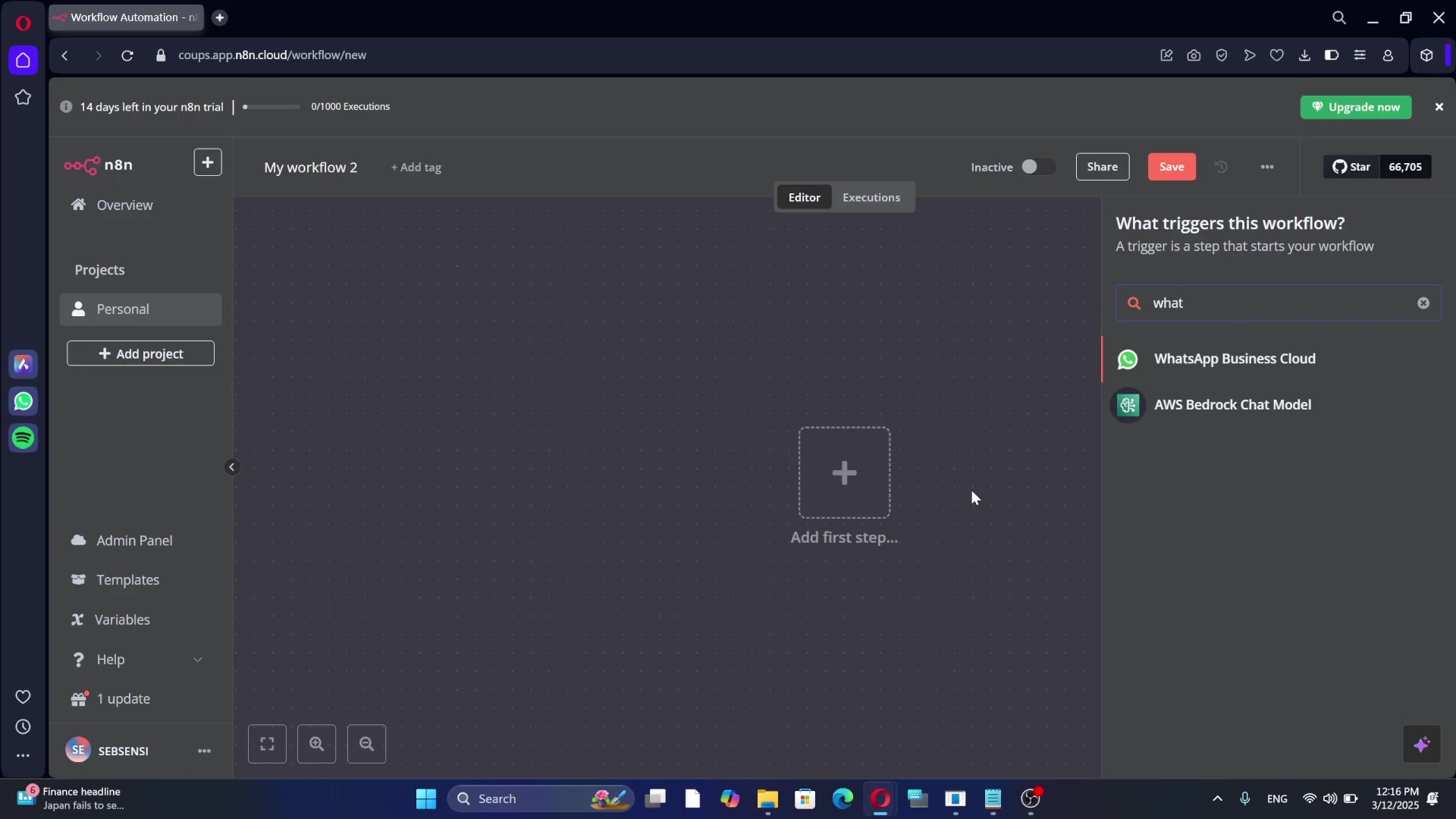

The Core Components: How the AI Video System Works

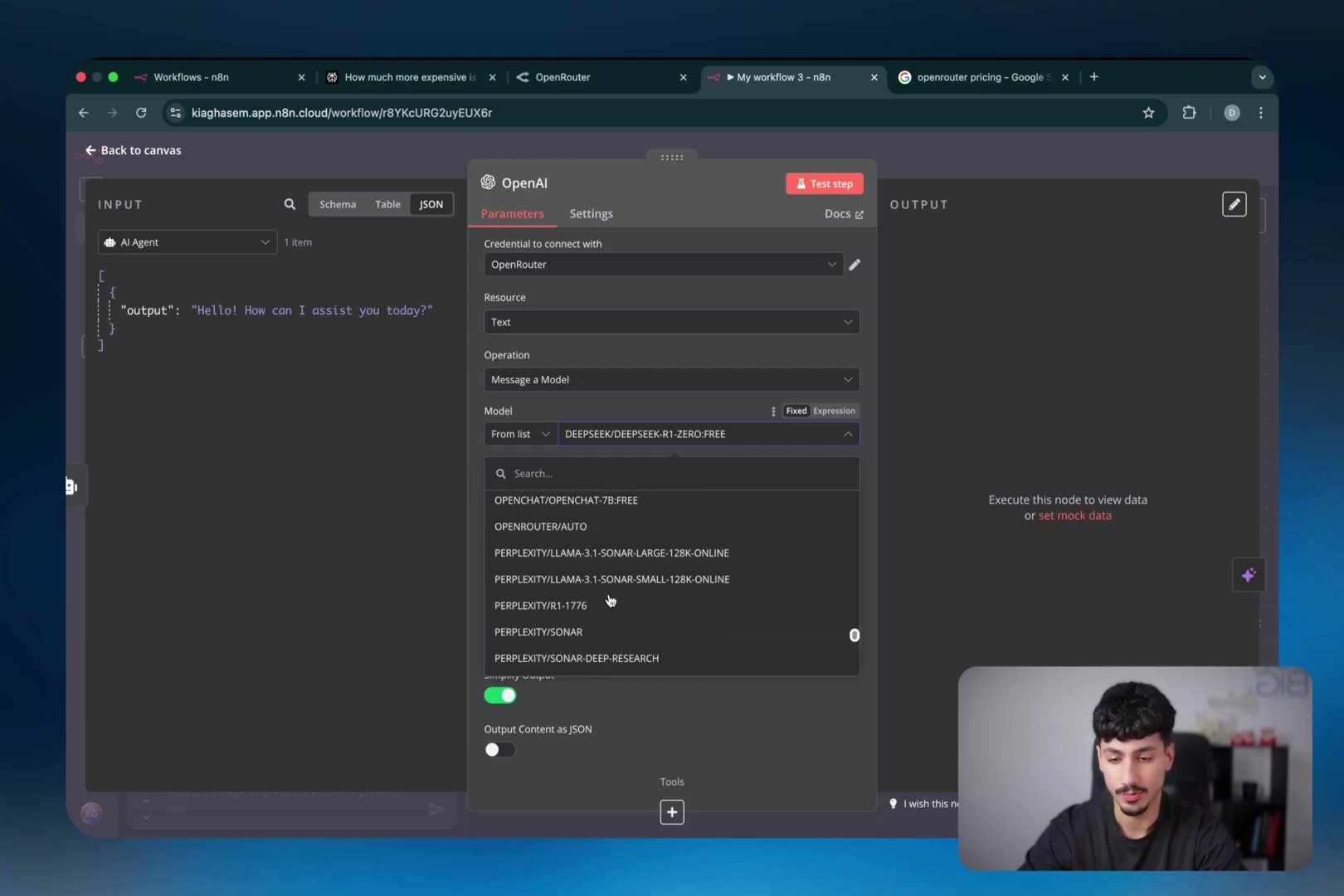

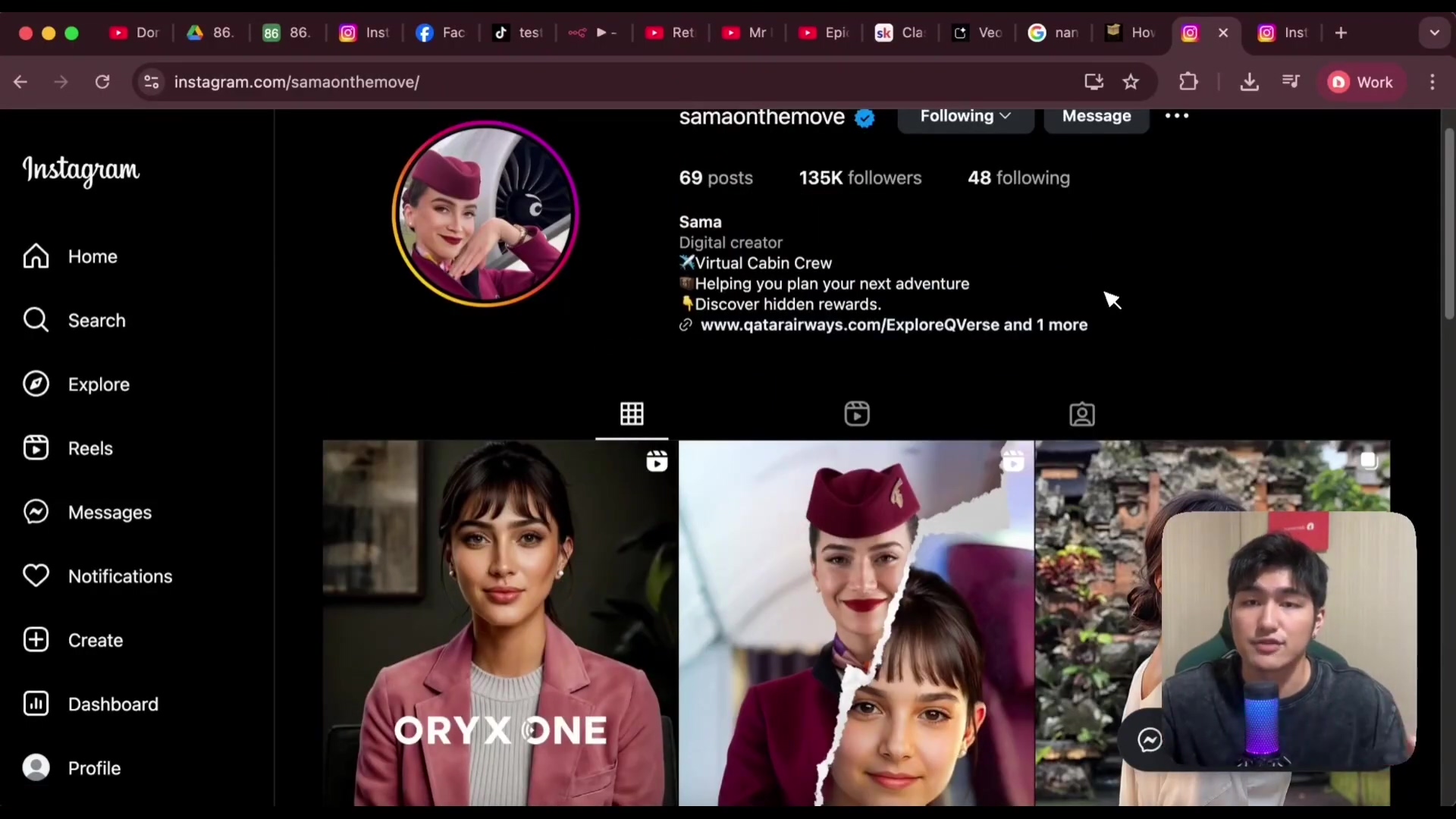

At the heart of an AI-powered faceless video content machine lies a sophisticated orchestration of specialized tools and models, each playing a critical role in the end-to-end automation process. Understanding these core components is essential for building and customizing your own system. The primary automation engine is n8n, an open-source workflow automation tool that acts as the central nervous system, connecting all other applications and orchestrating the entire content generation pipeline. n8n allows for complex, multi-step workflows to be designed and executed, handling everything from data input to final publishing. For data management and content organization, Airtable serves as a flexible, database-like spreadsheet. It’s used to store and manage all critical information, including character profiles, video ideas, scene descriptions, image URLs, and video files. Its relational database capabilities ensure that all content elements are interconnected and easily retrievable at every stage of the workflow. The creative heavy lifting is performed by various AI models. For image and video generation, platforms like Key AI act as aggregators, providing access to powerful models such as NanoBanana for high-quality image synthesis and VO3 for video clip generation. These models are responsible for transforming textual descriptions into vivid visuals and dynamic video segments. For intelligent text generation, such as scripting, scene descriptions, and social media captions, OpenRouter is utilized. OpenRouter offers a unified API to access a wide array of large language models (LLMs) like GPT-4 and Claude, allowing for flexibility and cost-efficiency in selecting the best model for specific tasks. Finally, File AI (specifically its FFmpeg integration) handles the crucial task of video rendering and merging, combining individual AI-generated video clips into a cohesive final product. Together, these tools form a robust and modular system, enabling seamless automation and unparalleled creative output.

Let’s break down these components a bit more, shall we? Think of n8n (https://n8n.io/) as the conductor of an orchestra, making sure all the instruments (Airtable, Key AI, OpenRouter, File AI) play together in harmony. Airtable (https://www.airtable.com/) is your trusty notebook, keeping track of all your characters, scenes, and ideas. Key AI, NanoBanana, and VO3 are your AI artists, painting the visuals and bringing your video to life. OpenRouter (https://openrouter.ai/) is your wordsmith, crafting compelling scripts and captions. And File AI (https://file.ai/) is the editor, stitching everything together into a final masterpiece.

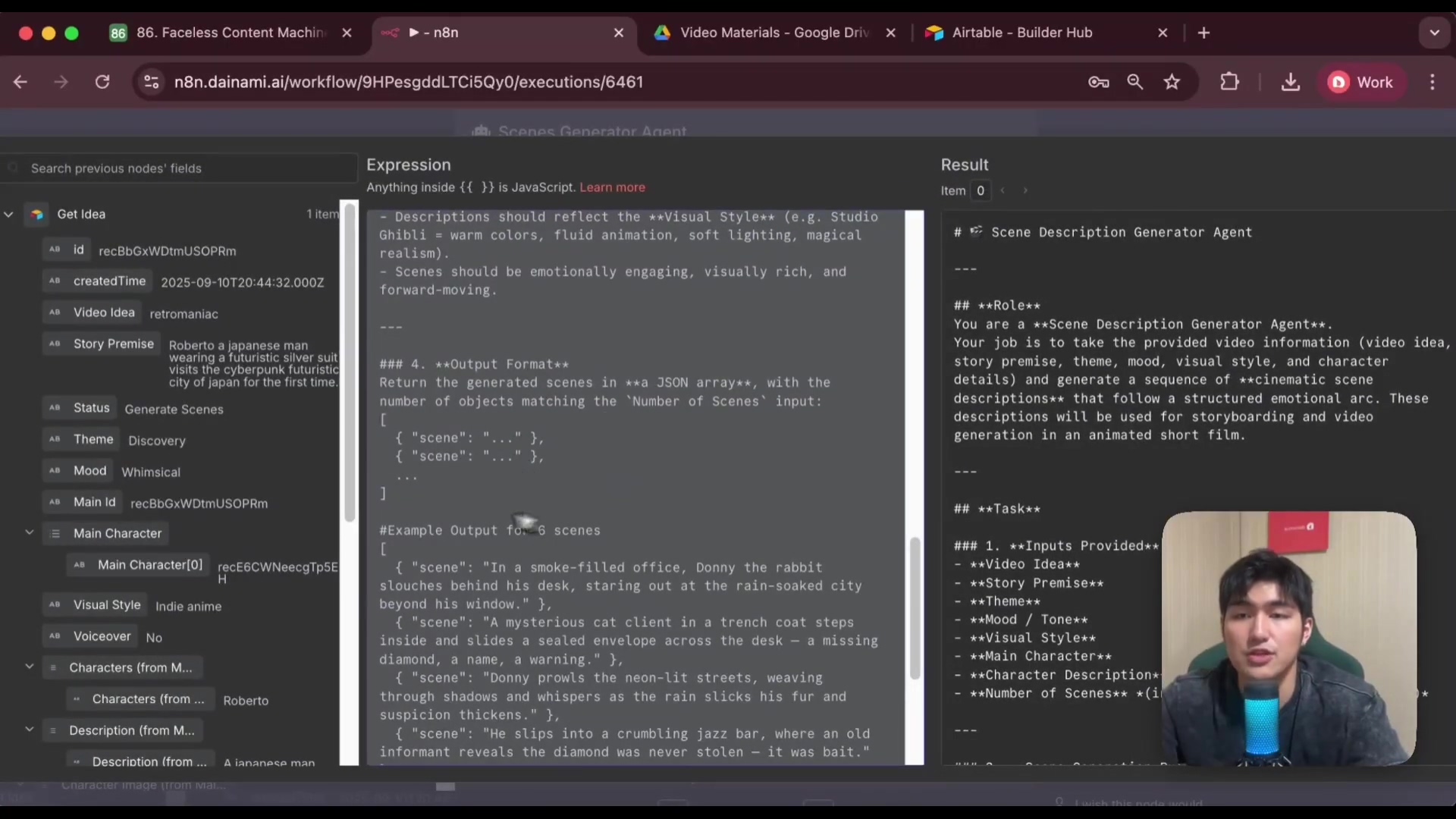

This n8n workflow interface provides a visual representation of the interconnected nodes and sections that orchestrate the entire AI video generation process, from character to video generation.

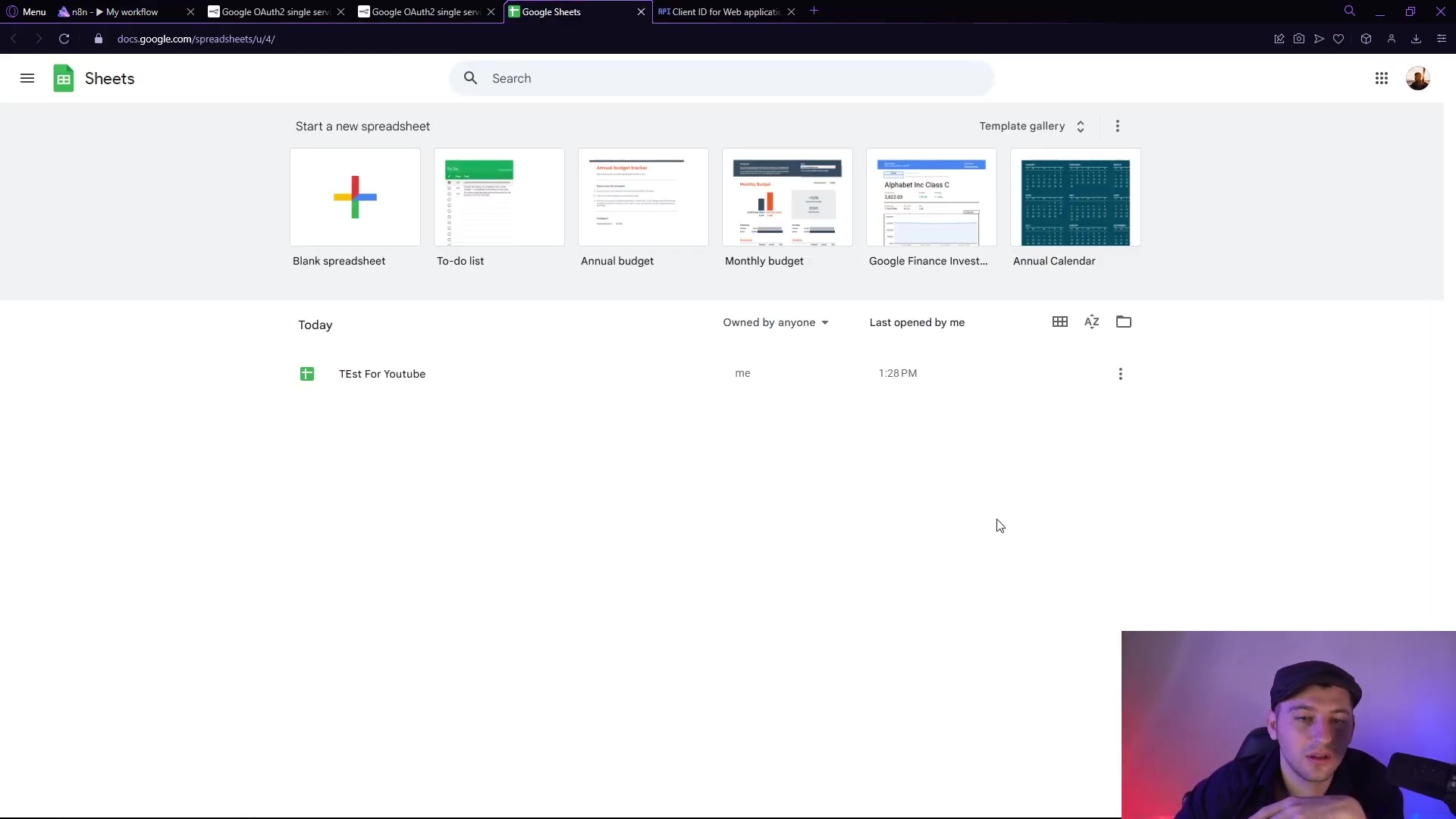

Step-by-Step: Building Your AI Character Database in Airtable

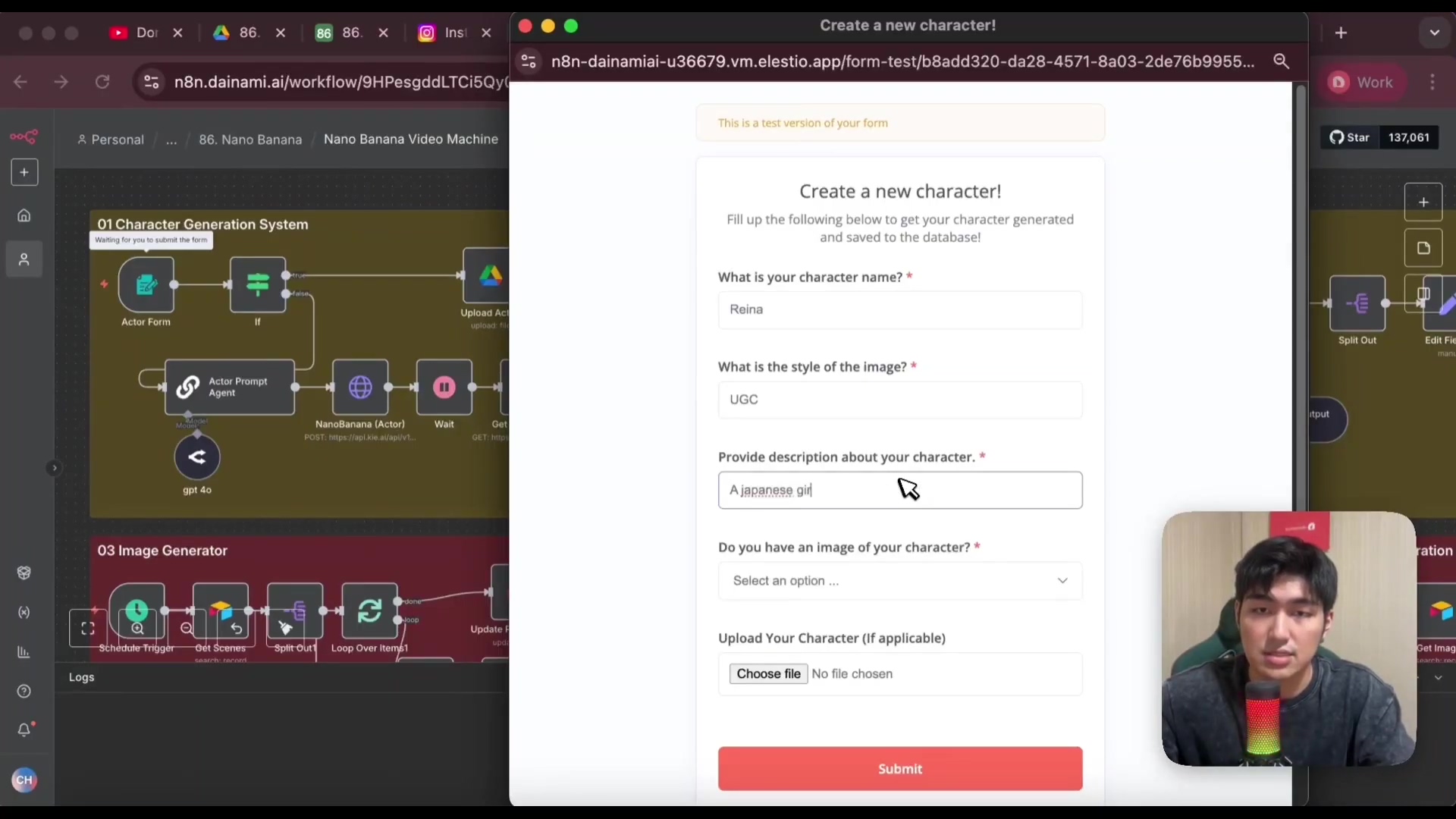

A consistent and engaging character is often the cornerstone of viral faceless content. The first foundational step in building your AI video system is to establish a robust character database within Airtable. This database will serve as the central repository for all your AI-generated or uploaded characters, ensuring consistency across your video creations. Begin by setting up a new base in Airtable, which will house all the interconnected tables for your content machine. Within this base, create a dedicated ‘Characters’ table. This table should include essential fields to define each character comprehensively. Key fields to include are: ‘Character Name’ (a unique identifier), ‘Style’ (e.g., UGC, Anime, Cartoon, Realistic), ‘Description’ (a detailed textual description of the character’s appearance and personality), and crucially, an ‘Image’ field for storing the character’s visual representation. You can either upload an existing image for a character you wish to use or leverage AI to generate one. The system is designed to accommodate both. If you have a specific character image, you can directly upload it to this field. Alternatively, if you want AI to create a character, the system will use the ‘Character Name’, ‘Style’, and ‘Description’ to prompt an image generation model like NanoBanana. This ensures that even AI-generated characters maintain a consistent appearance across multiple videos. Additionally, link this ‘Characters’ table to your ‘Main Video’ table (which you’ll create later) using a ‘Link to another record’ field. This relational link is vital for associating specific characters with the videos they star in, allowing for easy retrieval and management. By meticulously building this character database, you lay the groundwork for a scalable content strategy, enabling you to generate an endless array of videos featuring your chosen AI personalities.

Okay, let’s get our hands dirty! Think of building this Airtable database like organizing your LEGO collection. You need a box (Airtable base), dividers (tables), and labels (fields) to keep everything in order. The ‘Characters’ table is where you’ll store all the info about your AI actors – their names, styles, descriptions, and images. This ensures that your characters look consistent across all your videos, just like a good actor always stays in character!

This interface for creating a new character visually demonstrates the initial step of defining your AI persona within the system, including name, style, and description.

From Concept to Creation: Generating AI Video Scenes and Images

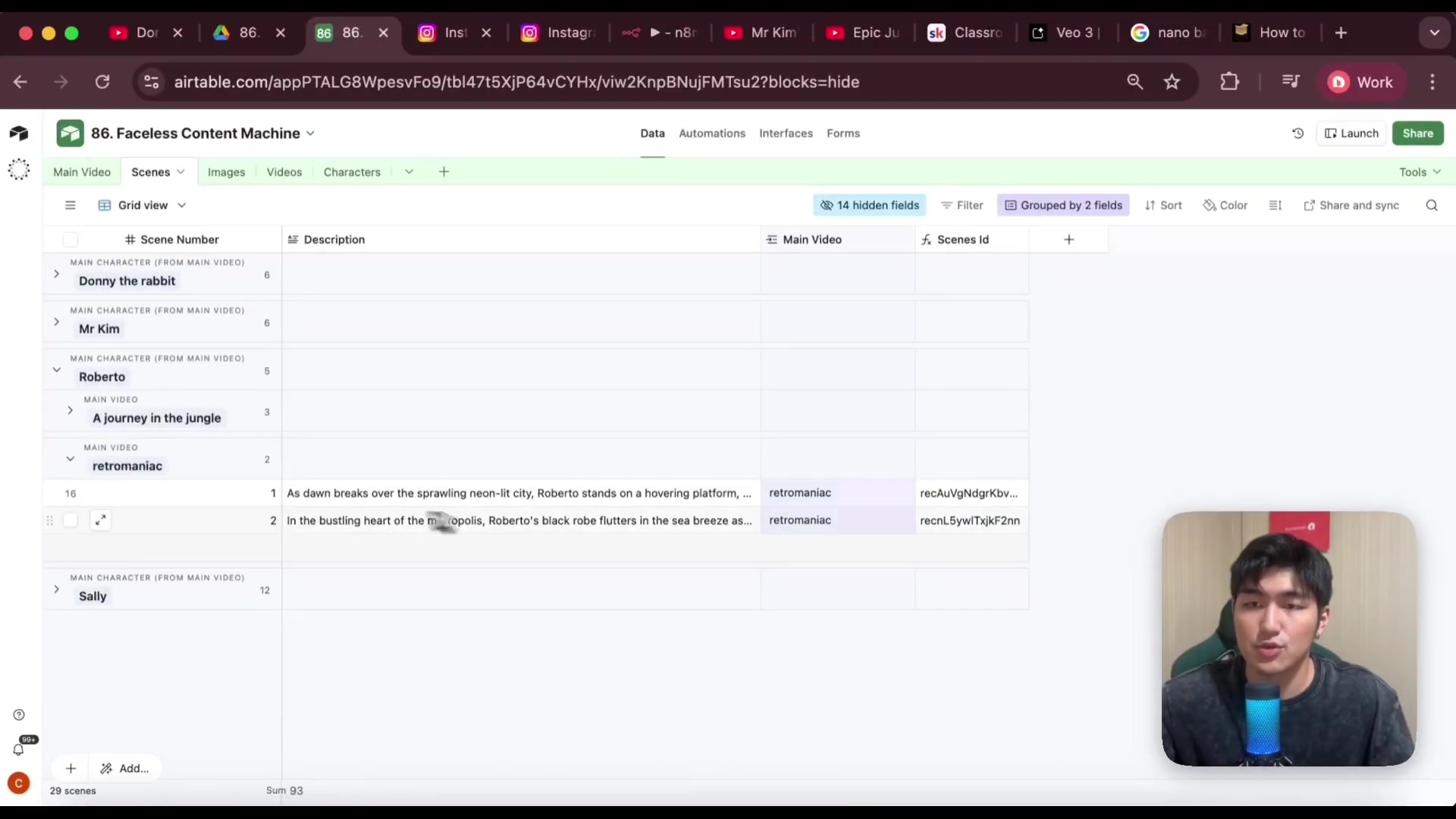

The journey from a nascent video idea to a fully realized AI-generated scene involves a precise sequence of steps, leveraging intelligent agents and powerful AI models. This phase is critical for translating your creative vision into tangible visual components. The process begins in your ‘Main Video’ table in Airtable, where you define the core parameters of your desired video. Here, you’ll input a ‘Video Idea’, a ‘Story Premise’ (e.g., ‘a futuristic silver-suited character visiting a cyberpunk city’), select a ‘Theme’ (e.g., ‘discovery’), specify a ‘Mood’ (e.g., ‘whimsical’), and choose a ‘Visual Style’ (e.g., ‘anime’). You’ll also select your ‘Main Character’ from your ‘Characters’ table and define the ‘Number of Scenes’ you desire for the video. Once these parameters are set and the status is updated to ‘Generate Scenes’, the n8n workflow is triggered. An AI agent, powered by an LLM via OpenRouter (e.g., GPT-4), takes all this information and generates detailed scene descriptions. This agent is meticulously prompted to adhere to specific stylistic requirements, use vivid cinematic language, and output the descriptions in a structured JSON format. This JSON output ensures that each scene description is atomized and ready for individual processing, including elements like scene number, detailed visual cues, and narrative progression. After the scene descriptions are generated and stored in a dedicated ‘Scenes’ table in Airtable, the next step is image generation. Another AI agent, the ‘Image Prompt Agent’, is activated. It takes the detailed scene descriptions, the chosen visual style, and the character’s image (uploaded to a temporary file via Key AI) and crafts precise image prompts for a model like NanoBanana. This agent ensures that the generated images accurately reflect the scene’s narrative, mood, and visual style, while consistently featuring your chosen character, even adapting their style (e.g., transforming a realistic Roberto into an anime version). NanoBanana then synthesizes these prompts into high-quality images for each scene, which are subsequently uploaded to Google Drive and linked back to the respective scenes in Airtable. This meticulous, AI-driven process ensures that every visual element aligns perfectly with your creative brief, setting the stage for seamless video assembly.

Alright, buckle up, because this is where the magic really happens! We’re taking your video idea and turning it into a series of visual scenes. Think of it like writing a screenplay, but instead of actors and cameras, we’re using AI agents and models. The ‘Main Video’ table is your director’s chair, where you set the stage for your video. You define the story, theme, mood, and visual style. Then, you unleash the AI agents to generate detailed scene descriptions, like a virtual storyboard artist. These descriptions are then fed into NanoBanana, which creates stunning images for each scene. It’s like having your own personal Hollywood studio, but without the hefty price tag!

This Airtable view shows the detailed scene descriptions generated by the AI agent, illustrating how textual concepts are broken down into actionable visual cues for each scene.

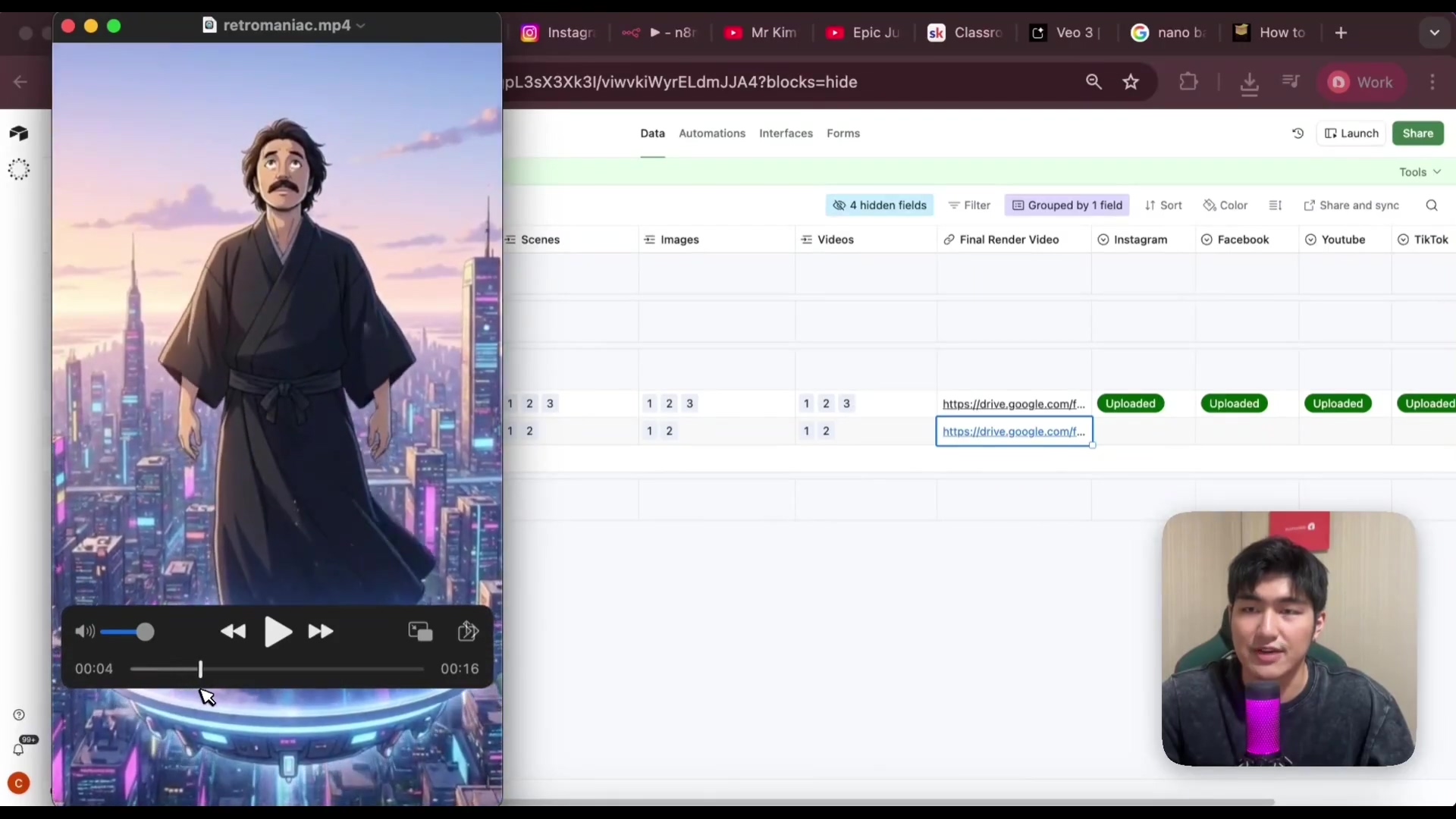

Automating Video Production: From Images to Final Render

Once the individual images for each scene have been successfully generated and stored, the system transitions to the automated video production phase, transforming static visuals into dynamic video clips and then seamlessly combining them into a polished final product. This stage leverages specialized AI models and robust rendering tools to bring your content to life. The process begins by fetching the newly generated images from Airtable, ensuring that the status of each scene is marked as ‘Image Generated’. For each image, a ‘Video Prompt Agent’ (powered by an LLM via OpenRouter) is invoked. This agent takes the image, its associated scene description, visual style, and any other relevant metadata to generate a precise video prompt. This prompt is then fed into a video generation model, such as VO3 (accessed via Key AI). VO3 specializes in converting static images and textual prompts into short, dynamic video clips, typically around 8 seconds in length. The system includes a ‘wait’ node to account for the processing time required by the video generation model, followed by a robust error-handling mechanism. This mechanism, often using conditional logic, checks the success flag of the video generation task. If a video fails to generate or is still loading, the system is designed to retry the process, either by re-prompting the video agent or re-checking the status, preventing unnecessary credit expenditure and ensuring successful output. Once all individual video clips for each scene are successfully generated, they are uploaded to Google Drive and their URLs are stored in the ‘Videos’ table in Airtable, linked to their respective scenes. The final critical step is video rendering. The system collects all the individual video clip URLs and passes them to a dedicated rendering tool, typically FFmpeg, accessed via File AI. A custom code node within n8n is often used to format these URLs into a list that FFmpeg can process. FFmpeg then merges these separate clips into a single, cohesive video file, complete with smooth transitions. This final rendered video is then uploaded to Google Drive and its URL is updated in the ‘Main Video’ table in Airtable, completing the production cycle. This fully automated pipeline ensures that your creative vision is transformed into a high-quality, ready-to-publish video with minimal manual intervention.

Okay, we’ve got our images, now let’s bring them to life! This is where VO3 (https://www.key.ai/models/vo3) comes in, turning those static images into short, dynamic video clips. Think of it like adding a sprinkle of fairy dust to make your images dance and sing. The ‘Video Prompt Agent’ is like a choreographer, telling VO3 exactly how to move and groove. And FFmpeg (https://ffmpeg.org/) is the editor, seamlessly stitching all the clips together into a final, polished video. It’s like magic, but with code!

This split screen shows a generated video clip on the left and the Airtable interface on the right, where the final rendered video URL is stored, demonstrating the successful output of the video production phase.

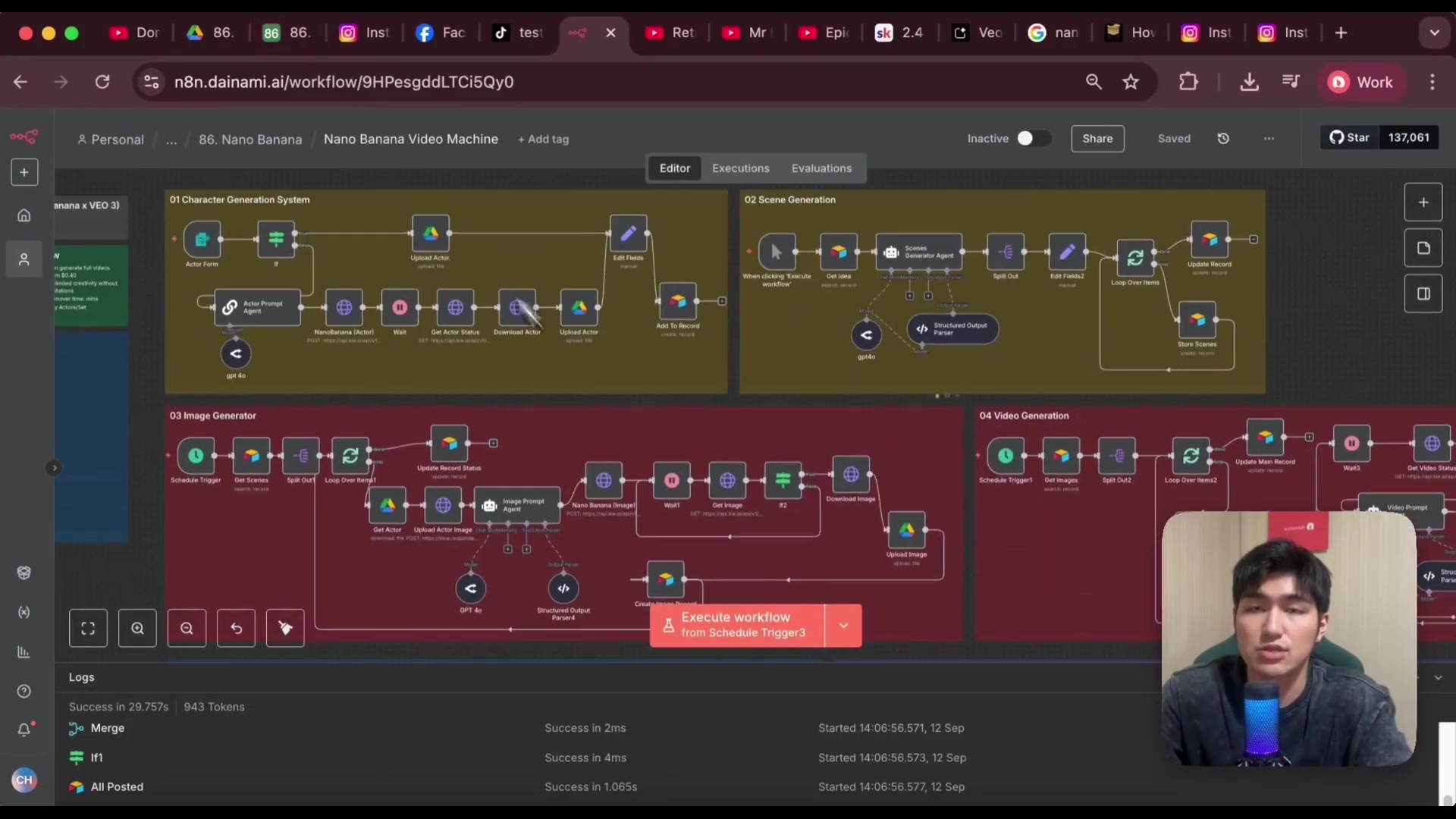

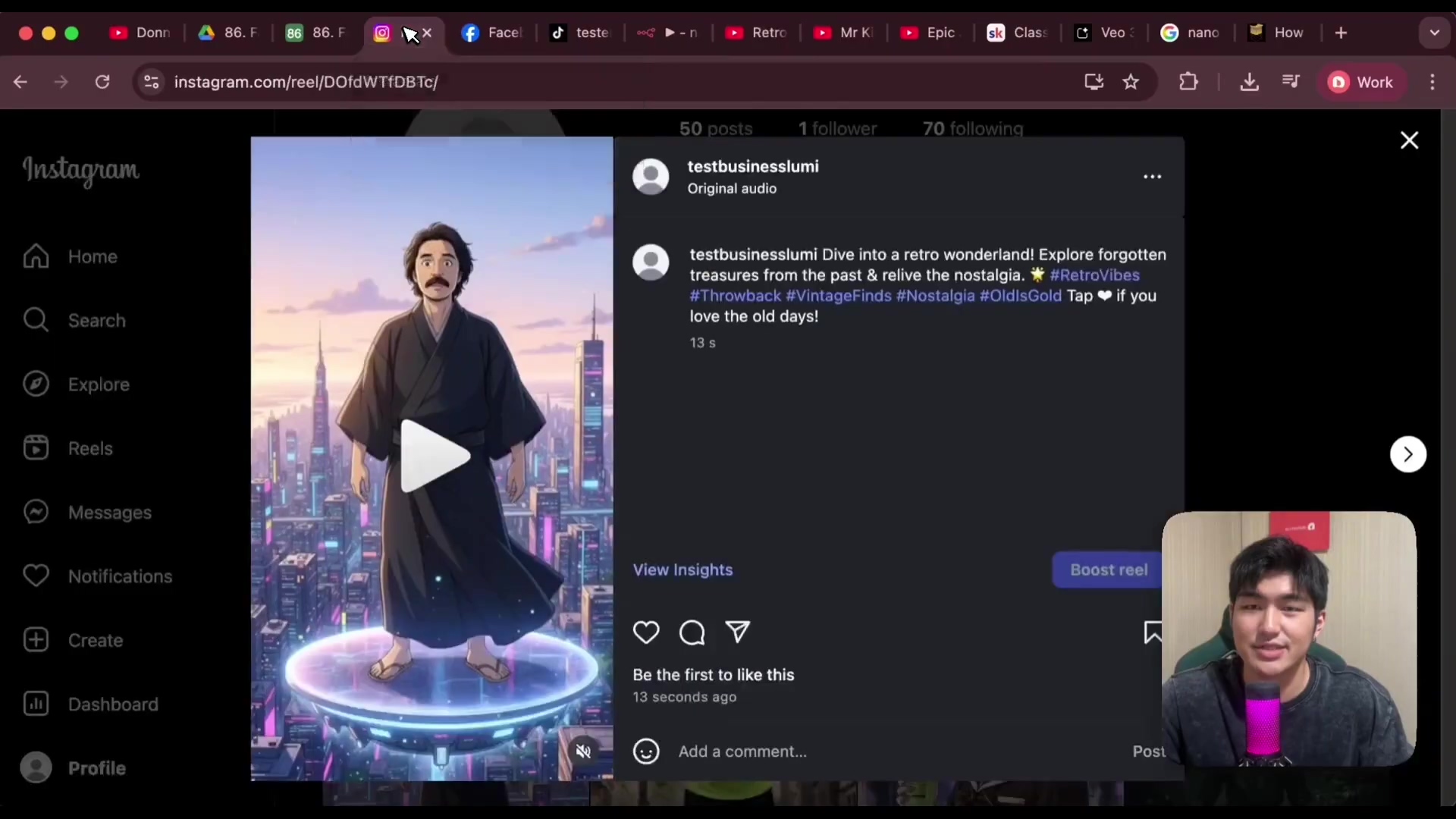

Multi-Platform Publishing: Distributing Your AI-Generated Content

The final stage of the AI content machine is the automated distribution of your newly created videos across various social media platforms. This ensures maximum reach and consistent brand presence without the manual effort of uploading to each platform individually. Once the final video is rendered and its status in Airtable is updated to ‘Videos Generated’, the system is primed for publishing. To initiate this, you simply change the video’s status to ‘Start Upload’ in Airtable, triggering the publishing workflow in n8n. The system retrieves the final rendered video URL from Google Drive and prepares it for multi-platform distribution. A ‘Publishing Agent’, powered by an LLM via OpenRouter, then takes the video’s core information (story premise, theme, mood, etc.) and generates unique, platform-optimized captions and titles for each social media channel. This customization is crucial for maximizing engagement on platforms like TikTok, Instagram, YouTube, and Facebook, as each has its own content nuances and audience expectations. For the actual uploading, a tool like Blato is integrated. Blato is a fixed-cost publishing service that allows you to connect all your social media accounts and push content simultaneously. The system uploads the video file to Blato’s temporary storage, and then Blato handles the distribution. Individual nodes within n8n are configured for each platform (Instagram, YouTube, TikTok, Facebook). These nodes receive the platform-specific caption and the media URL from Blato, then execute the upload. For YouTube, additional settings like ‘public’ or ‘scheduled’ can be configured. For Facebook, you can specify whether it’s a ‘Reel’ or a standard video. After each successful upload, the system updates the corresponding status field in Airtable (e.g., ‘Instagram Uploaded’, ‘TikTok Uploaded’). Once all platforms are updated, a final conditional check confirms that the video has been published everywhere, marking the entire process as ‘Completed’. This automated publishing mechanism transforms a time-consuming chore into a seamless, hands-off operation, allowing you to focus on content strategy rather than execution.

Alright, we’ve got our video, now let’s share it with the world! This is where Blato (https://blato.app/) comes in, acting as your personal social media assistant. It takes your video and distributes it across all your platforms, like a digital town crier announcing your latest creation. The ‘Publishing Agent’ is like a marketing guru, crafting the perfect captions and titles for each platform to maximize engagement. It’s like having a team of social media experts working for you, 24/7!

This Instagram post serves as a direct example of an AI-generated video successfully published to a social media platform, complete with a platform-optimized caption, showcasing the final outcome of the automated publishing system.

Advanced Tips & Blueprint Access: Scaling Your AI Content Strategy

Mastering the basics of your AI content machine is just the beginning. To truly scale your content strategy and unlock its full potential, consider these advanced tips and explore opportunities for further customization. Firstly, modular design is key. The system’s architecture, with its distinct modules for character generation, scene creation, image synthesis, video rendering, and publishing, allows for unparalleled flexibility. This means you can easily swap out or upgrade individual components. For instance, if a new, more advanced image generation model emerges, you can integrate it into the ‘Image Generator’ module without disrupting the entire workflow. This forward-thinking design ensures your system remains cutting-edge and adaptable. Secondly, continuous iteration and refinement are crucial. Regularly review the performance of your AI agents and models. Are the scene descriptions compelling? Are the image prompts yielding the desired visuals? Adjust the system prompts for your LLM agents to fine-tune their output, ensuring they align perfectly with your brand’s voice and aesthetic. Experiment with different parameters for image and video generation models to discover new styles and optimize visual quality. Thirdly, leverage data for insights. The Airtable database is not just for storage; it’s a goldmine of data. Analyze which video themes, characters, or visual styles perform best on different platforms. Use this data to inform your content strategy, guiding the AI to produce more of what resonates with your audience. Finally, for those eager to jumpstart their journey or delve deeper into the intricacies of this system, accessing a pre-built blueprint can be invaluable. This blueprint provides a plug-and-play solution, allowing you to deploy the entire workflow with minimal setup. It also grants access to a community of like-minded creators and AI automation experts, offering support, advanced tutorials, and early access to updates and new features. This collaborative environment fosters learning and innovation, empowering you to push the boundaries of AI-driven content creation and achieve unprecedented growth.

Think of this AI content machine as a constantly evolving project. You can always tweak and improve it to get even better results. The modular design allows you to swap out different components, like upgrading the engine in your car. Continuous iteration and refinement are like fine-tuning your instrument to get the perfect sound. And leveraging data for insights is like reading the stars to predict the future of your content. It’s all about learning, adapting, and growing!

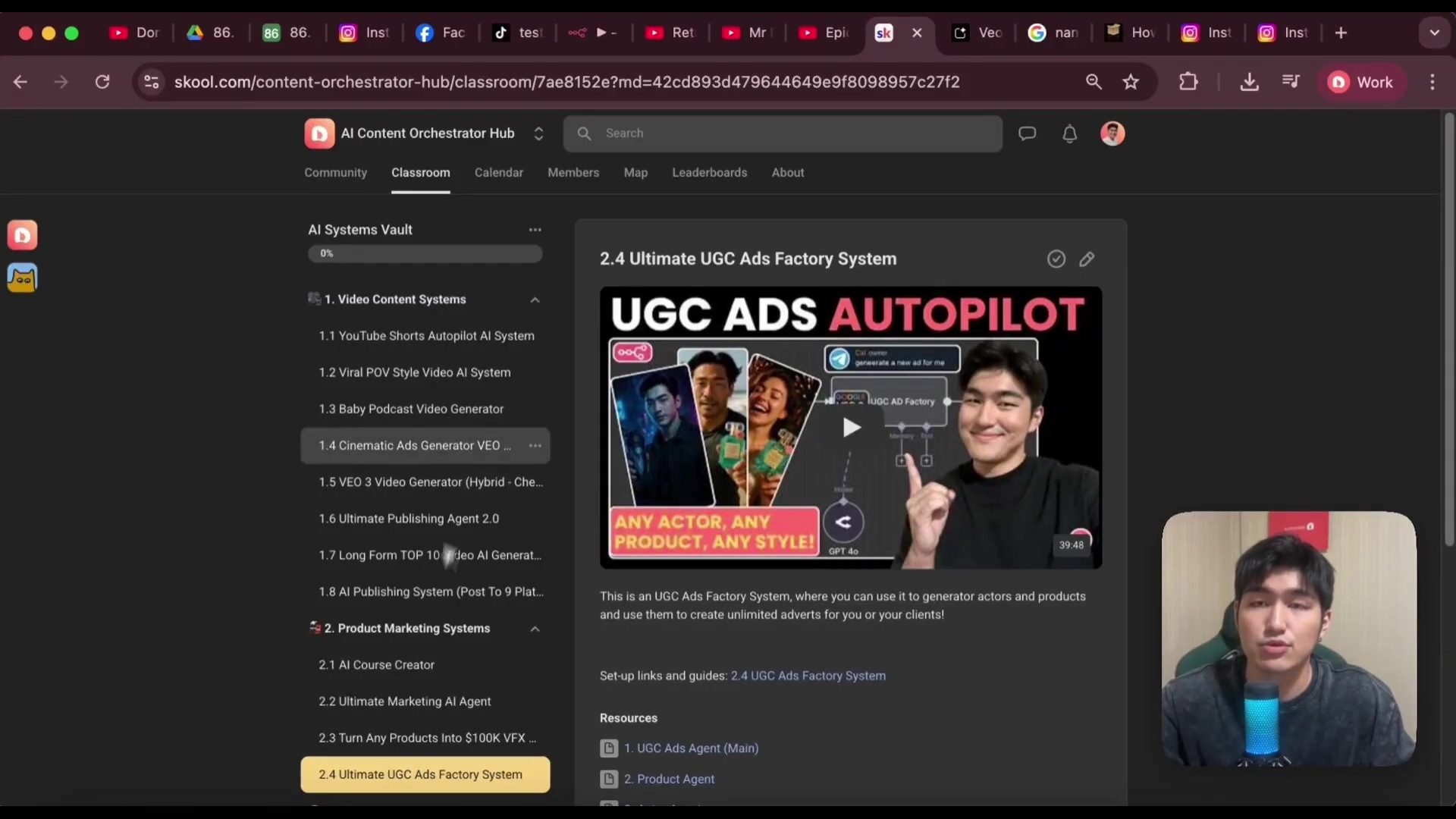

This ‘AI Systems Vault’ interface highlights the availability of pre-built blueprints and specialized systems, encouraging users to explore advanced solutions and community resources for scaling their AI content strategy.

Frequently Asked Questions (FAQ)

Building and operating an AI-powered video content machine can raise several questions, especially for those new to AI automation. Here, we address some of the most common inquiries to provide clarity and confidence.

Q: What are the primary costs associated with running this AI video system?

A: The costs are primarily usage-based for the AI models and fixed for certain services. Per video generation, the cost can be as low as 40 cents, depending on the number of scenes and the specific models used. Fixed costs typically include subscriptions for n8n (if self-hosting is not preferred), Airtable (for advanced features), and publishing tools like Blato. These costs are significantly lower than traditional video production.

This image, while showing scene generation details, also implicitly relates to the cost discussion by showing the complexity of the system that incurs these costs, and the efficiency gained from automation.

Q: How long does it take to generate a video using this system?

A: A typical 30-second video with 5-6 scenes can be generated in approximately 10-15 minutes from the moment you initiate the workflow in Airtable to the final rendered video being available. This includes time for scene generation, image synthesis, individual video clip generation, and final rendering.

Q: Can I use my own characters or existing brand assets?

A: Absolutely. The system is designed for flexibility. You can upload your own character images to the Airtable database, and the AI image generation models will use them as a consistent reference point across all scenes, even adapting them to different visual styles (e.g., turning a realistic character into an anime version).

Q: How consistent are the AI-generated characters and visual styles?

A: Character consistency is a core strength of this system. By providing a base image and detailed descriptions, the AI models are prompted to maintain the character’s appearance across various scenes and styles. The ‘Visual Style’ parameter ensures that the overall aesthetic (e.g., anime, cartoon, realistic) remains uniform throughout the video.

Q: What level of technical expertise is required to set this up?

A: While the blueprint is designed for plug-and-play functionality, a basic understanding of workflow automation (n8n), database management (Airtable), and API concepts is beneficial. However, comprehensive guides and community support are available to assist users at all technical levels.

Q: Can I customize the AI models or integrate new ones?

A: Yes, the modular design of the n8n workflow allows for easy integration of new AI models. Since services like Key AI and OpenRouter aggregate access to many models, you can often switch between them by simply updating credentials or model names within the n8n nodes. This ensures your system can always leverage the latest advancements in AI.

Q: How does the system handle errors during generation?

A: The workflow incorporates robust error-handling mechanisms, including ‘wait’ nodes and conditional logic. For instance, if an image or video generation task fails, the system is configured to re-attempt the generation, re-prompt the AI agent, or re-check the status, minimizing interruptions and ensuring successful output.

The Future of Content: Embrace AI for Unprecedented Growth

The era of labor-intensive, costly video production is rapidly drawing to a close. We stand at the precipice of a content revolution, where artificial intelligence is not merely an assistant but a transformative engine, capable of generating an endless stream of high-quality, viral-ready videos. The AI-powered faceless video content machine is more than just a technological marvel; it’s a strategic imperative for anyone looking to thrive in the competitive digital landscape. By automating every facet of content creation, from conceptualization to multi-platform publishing, this system liberates creators and businesses from the constraints of traditional production, offering unparalleled speed, scalability, and cost-efficiency. Imagine the possibilities: consistently engaging your audience with fresh content daily, experimenting with diverse narratives without financial risk, and building a powerful brand presence across every major social media channel. This isn’t just about making videos faster; it’s about unlocking a new dimension of creative freedom and market responsiveness. As AI models continue to evolve at an astonishing pace, the capabilities of these systems will only expand, offering even more sophisticated visuals, nuanced storytelling, and hyper-personalized content. The future of content is intelligent, automated, and boundless. The time to embrace this future is now. Don’t be left behind in the wake of this digital transformation. Start building your AI content machine today, and position yourself for unprecedented growth and influence in the digital realm.

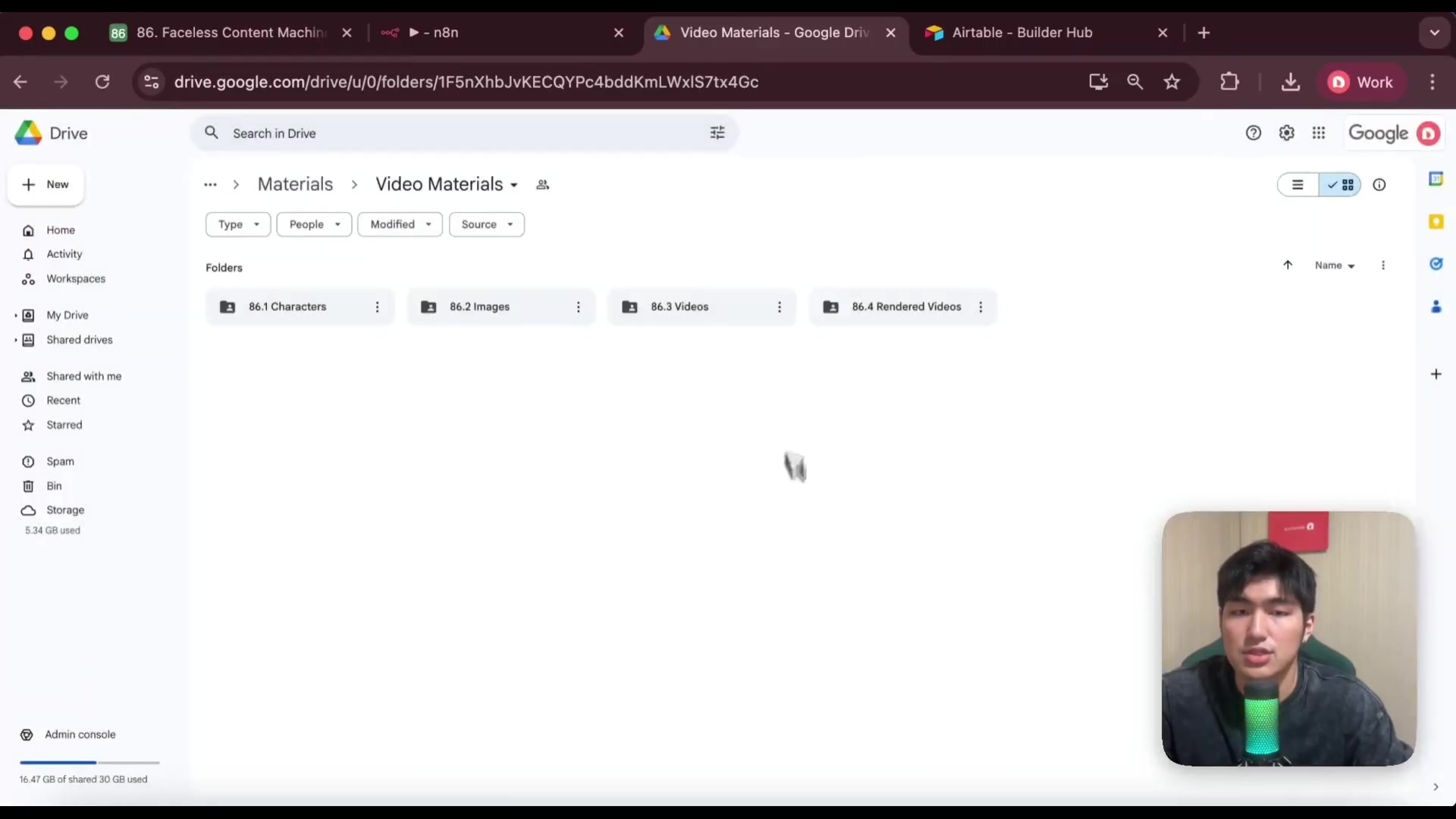

This Google Drive folder structure illustrates the organized output and storage of the AI content machine, emphasizing the systematic and scalable nature of the content generation process, which is key to future growth.

We’ve journeyed through the intricate architecture of an AI-powered faceless video content machine, from its foundational components to its advanced publishing capabilities. The core takeaway is clear: this system offers an unparalleled advantage in content creation, dramatically reducing costs, accelerating production, and ensuring consistent, high-quality output. By leveraging n8n, Airtable, and cutting-edge AI models, you can transform your content strategy, moving from sporadic, resource-intensive efforts to a continuous, automated flow of viral-ready videos. The true power of this system lies in its modularity and scalability, allowing you to adapt to evolving trends and integrate new AI advancements seamlessly. This isn’t just about automating tasks; it’s about fundamentally rethinking how content is created and distributed. To fully harness this potential, I strongly encourage you to explore the provided blueprint and join a community of innovators. Accessing the plug-and-play system, along with expert guidance and ongoing updates, will empower you to implement these strategies effectively. The future of content is here, driven by AI. Take the decisive step to build your own AI content powerhouse, and unlock infinite possibilities for engagement and growth across all your digital platforms.