Watch the Video Tutorial

💡 Pro Tip: After watching the video, continue reading below for detailed step-by-step instructions, code examples, and additional tips that will help you implement this successfully.

Table of Contents

Open Table of Contents

- Introduction: Automating LinkedIn Profile Discovery

- Required Resources and Cost-Benefit Analysis

- Building the Google Scrape Tool Workflow

- Building the AI Agent Workflow

- Testing the AI Agent

- Limitations and Advanced Considerations

- Critical Safety and Best Practice Tips

- Key Takeaways

- Conclusion

- Frequently Asked Questions (FAQ)

- Q: Why use n8n for this project instead of just writing a Python script?

- Q: What happens if Google blocks my IP address due to too many requests?

- Q: Can I use a different AI model instead of OpenAI’s GPT models?

- Q: How can I handle more than 10 search results per query?

- Q: Is it possible to scrape other social media platforms like Facebook or Instagram using a similar method?

Introduction: Automating LinkedIn Profile Discovery

In our data-obsessed world, finding specific info quickly is like having a superpower. This guide is all about building an AI agent that can scrape Google for LinkedIn profile URLs. Trust me, trying to do this manually is like trying to empty a swimming pool with a teaspoon – it’s going to take forever! By the time we’re done, you’ll not only have a working Google scraping AI agent in n8n, but you’ll also understand how it can tap into different tools to collect and process data. Pretty neat, right?

What You’ll Achieve

Ever needed to find, say, all the CEOs in real estate in Chicago? Or maybe founders in tech in San Francisco? This AI agent is your secret weapon. It can perform these super-targeted searches, grab those LinkedIn profiles, and then neatly pop them into a Google Sheet for you. This means way less manual grunt work and a massive boost in how fast and accurately you can get your data. Imagine the possibilities!

Required Resources and Cost-Benefit Analysis

Alright, every good mission needs the right gear. To build our Google scraping AI agent, we’ll need a few key platforms and services. Our main players are n8n for the workflow magic, OpenAI for the AI brains, and Google Sheets for storing our precious data.

Essential Tools and Materials

| Resource/Tool | Purpose | Notes |

|---|---|---|

| n8n | Workflow automation platform | This is our mission control, where we’ll build and manage all our automation workflows. |

| OpenAI API | AI agent capabilities (GPT models) | This is where the AI smarts come from. You’ll need an OpenAI account and an API key to let our AI agent think and interact. |

| Google Sheets | Data storage for scraped URLs | Our trusty database! We’ll use this to neatly store all the LinkedIn profile URLs we collect. |

| ChatGPT (Optional) | Assistant for code and configuration help | Think of ChatGPT as your super-smart co-pilot. It’s incredibly useful for getting code snippets or understanding tricky setups. |

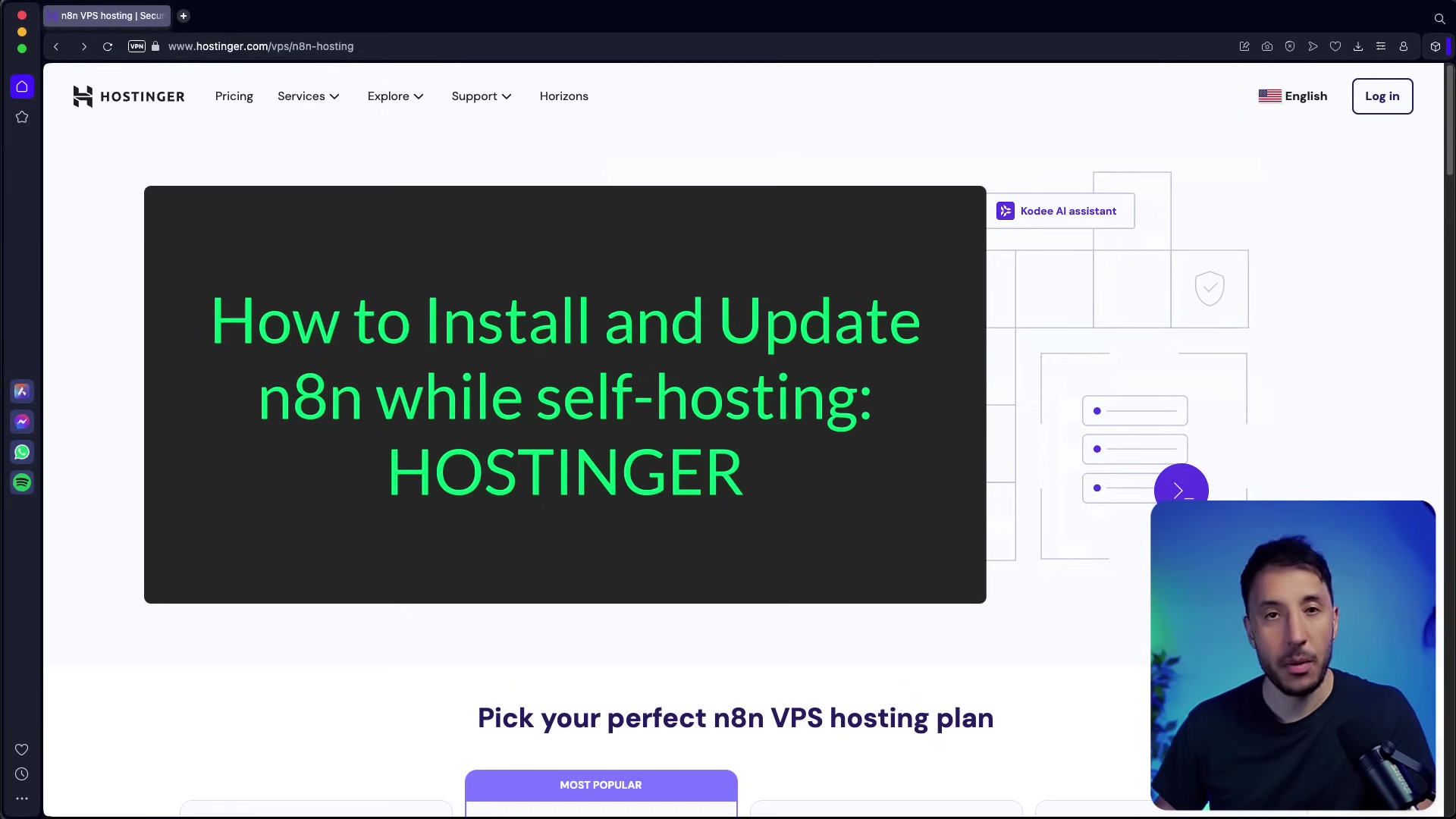

DIY vs. Commercial Solutions: A Cost-Benefit Comparison

Now, you might be thinking, “Boyce, why build it myself when there are ready-made tools?” Great question! Building your own AI agent gives you a huge leg up in terms of cost and customization compared to those off-the-shelf commercial options. Sure, commercial tools like ZoomInfo or Apollo.io might seem like a quick fix, but they often hit you with recurring subscription fees and way less flexibility. With our DIY approach, you’re the boss!

| Feature/Aspect | DIY Solution (n8n + OpenAI) | Commercial Scraping Service (e.g., ZoomInfo, Apollo.io) |

|---|---|---|

| Initial Setup | Requires manual configuration | Often quick setup, but may involve onboarding fees |

| Cost | Pay-per-use (OpenAI API), n8n hosting | Monthly/Annual subscriptions, often tiered |

| Customization | Highly customizable workflows | Limited to platform’s predefined features |

| Scalability | Scalable based on n8n/OpenAI usage | Scales with subscription tier |

| Data Ownership | Full control over data | Data often resides on vendor’s servers |

| Learning Curve | Moderate (workflow automation concepts) | Low to moderate (user-friendly interfaces) |

| Maintenance | Self-managed | Vendor-managed |

Building the Google Scrape Tool Workflow

Alright, let’s get our hands dirty! This is the first of two main workflows we’ll be building. Its whole job is to actively scrape Google for those LinkedIn URLs based on whatever criteria we throw at it. Think of this as the engine of our data-gathering machine.

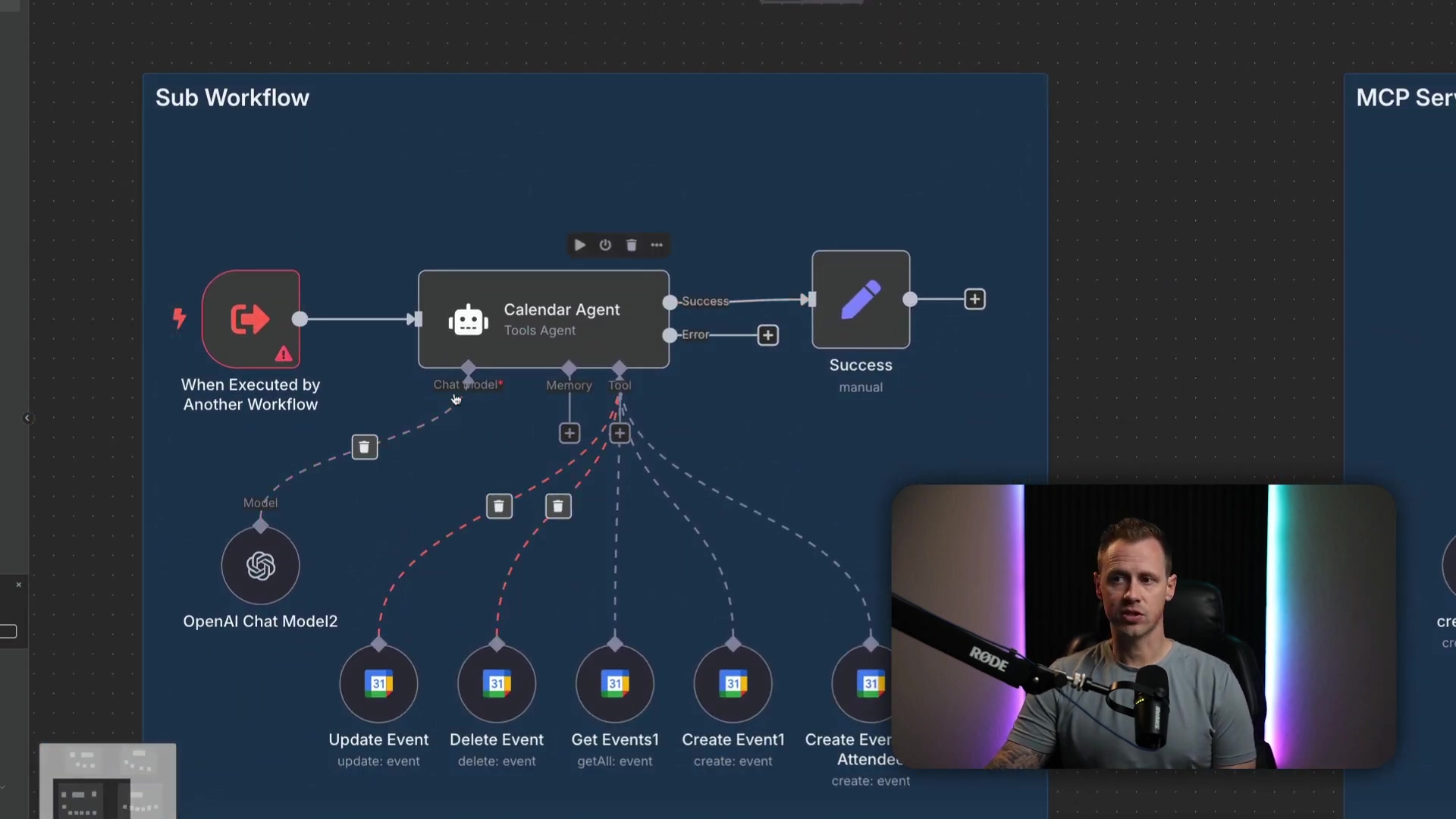

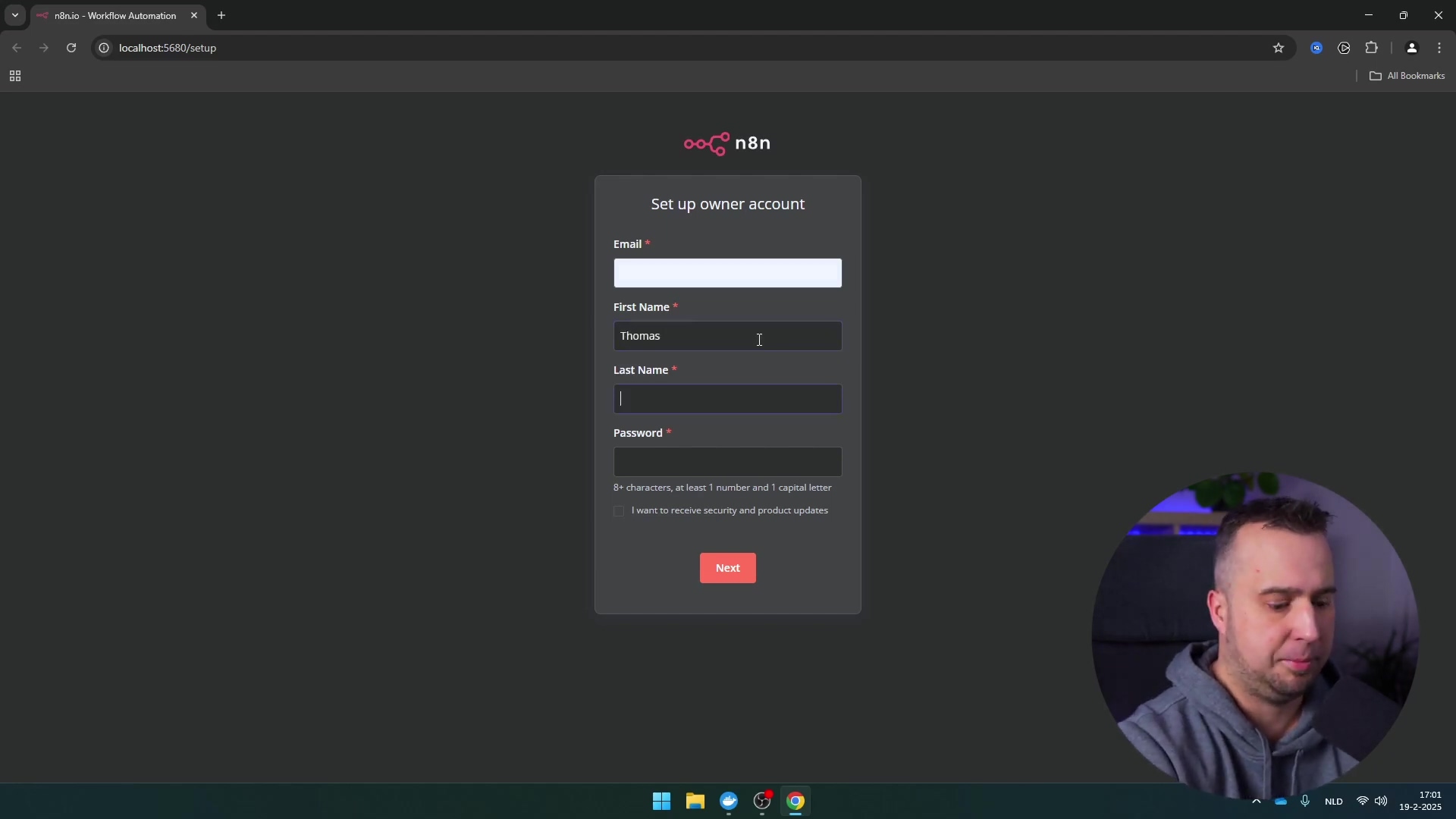

Workflow Trigger: When Called by Another Workflow

The very first step in this workflow is a “When called by another workflow” trigger. What does that mean? It means this workflow isn’t going to just run on its own. It’s like a specialized tool that only gets activated when our main AI agent workflow (which we’ll build next) gives it a shout. This setup is super handy because it makes our workflows modular and reusable – like building with LEGOs!

When you’re setting up this node, you’ll define the parameters that our AI agent will use to search Google. These are jobTitle, companyIndustry, and location. For testing purposes, you can initially hardcode values like “CEO”, “real estate”, and “Chicago” right into the node. This helps you make sure everything’s wired up correctly before we make it dynamic. Once you’ve confirmed it’s working, remember to remove these hardcoded values so your agent can take dynamic inputs!

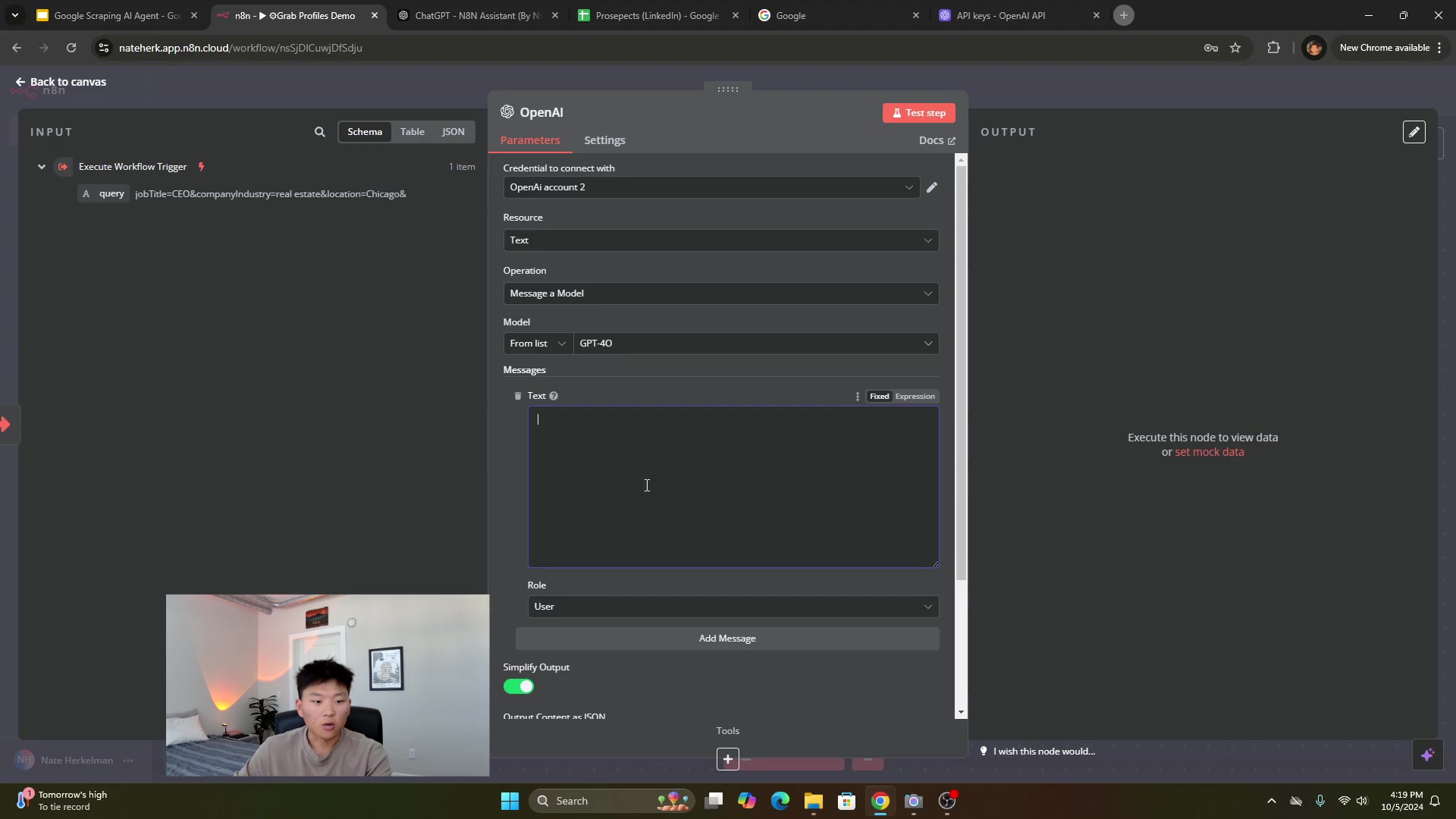

Integrating OpenAI for Query Parsing

Next up, connect an OpenAI node to your workflow. This node is the brain that will take our natural language queries (like “find CEOs in real estate”) and turn them into structured parameters (like jobTitle: CEO, industry: real estate) that Google can understand. If you haven’t already, make sure you’ve set up your OpenAI account and grabbed your API key. Then, you’ll need to create a new credential in n8n to link them up. It’s like giving n8n the keys to OpenAI’s super-smart brain!

Here’s how to configure the OpenAI node:

- Model: Choose a powerful GPT model like

GPT-4o. This ensures consistent and accurate performance. - System Prompt: This is where you tell the AI what its job is. Instruct the model to parse the JSON query it receives and output the parameters (job title, company, industry, location) separately. For example, you might tell it: “You are a helpful assistant that extracts job title, company industry, and location from a user query and returns them in a JSON object. If a field is not present, return null.”

- User Message: This is the actual query the AI will process. Drag the

queryinput from the workflow trigger (the “When called by another workflow” node) into this field. This makes sure the AI processes the dynamic query coming from our agent, not just a static text. - Output Content: Set this to

JSON. We want structured data back, not just plain text, so it’s easy for n8n to work with.

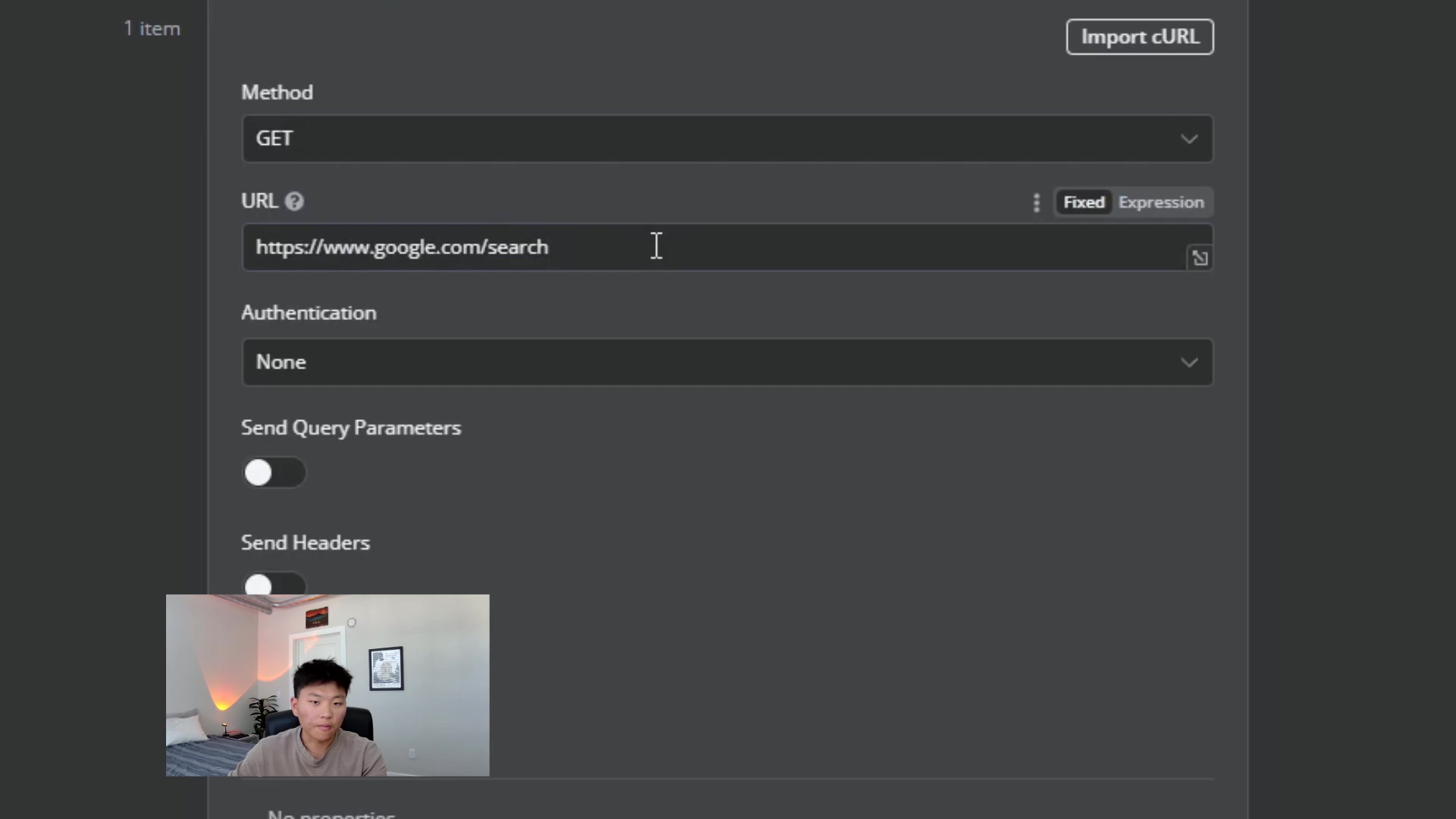

Configuring the HTTP Request to Google

Now for the real action! Add an HTTP Request node to your workflow. This node is what will actually go out and perform the Google search. It’s like sending a scout out into the internet wilderness!

Here are the key configurations:

-

Method: Set this to

GET. WhyGET? Because we’re simply requesting information from Google, not sending data to change anything. -

URL: This is where we tell it where to search:

https://www.google.com/search. Simple enough! -

Parameters: This is crucial! Add a parameter named

q. This is the standard query parameter Google uses. Its value should combine the LinkedIn site search operator (site:linkedin.com/in/) with the dynamic variables we got from the OpenAI node. For example, it would look something like this:site:linkedin.com/in/{{$json.jobTitle}} {{$json.industry}} {{$json.location}}This tells Google: “Hey, only show me results from

linkedin.com/in/that also contain the job title, industry, and location I’m giving you!” Super powerful, right? -

Headers: This is a sneaky but super important part! Add a header with the name

User-Agentand a value likeMozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36. Why do we do this? Many websites, including Google, try to block automated requests (like ours!) if they don’t look like they’re coming from a real web browser. ThisUser-Agentheader makes our request look legitimate. If you’re ever unsure about the correctUser-Agentto use, you can always ask an AI assistant like ChatGPT (especially if you find an n8n assistant GPT!) for the appropriate header configuration. They’re super helpful for these kinds of details!

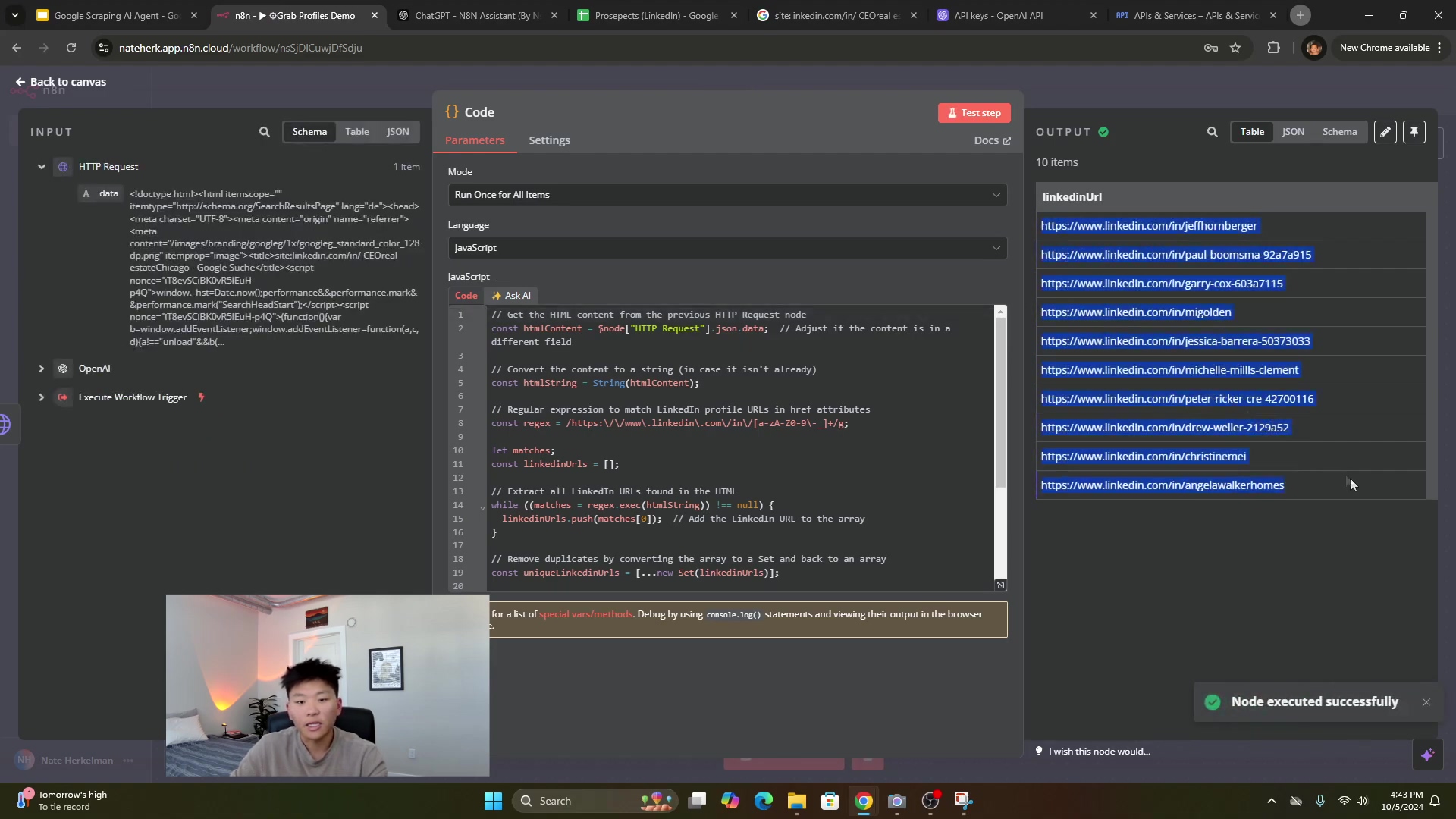

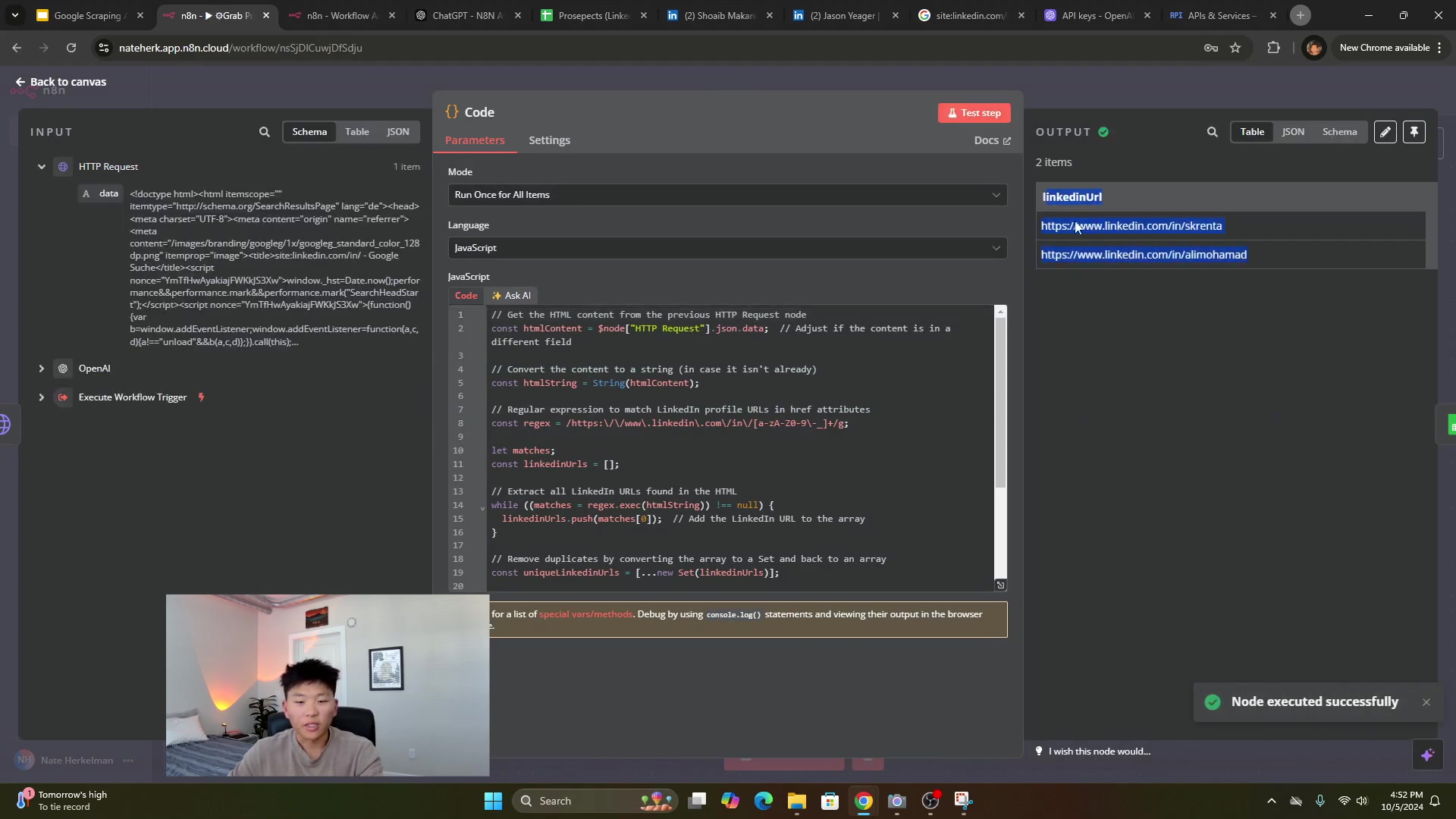

Parsing HTML with a Code Node

Okay, so our HTTP Request node just brought back a huge chunk of HTML – basically, the entire Google search results page. Now, we need to sift through that digital mess and pull out only the LinkedIn profile URLs. This is where a Code node comes in. Think of it as our data filter!

Writing complex parsing code can be a bit like trying to solve a Rubik’s Cube blindfolded. So, here’s a pro tip: leverage an AI assistant! Provide the AI with an example of the HTML output you get from the HTTP Request node (you can see this in n8n’s execution results) and ask it to generate JavaScript code to extract LinkedIn URLs using regular expressions. The assistant can also be your debugging buddy if you run into any errors with the code. It’s like having a coding mentor on demand!

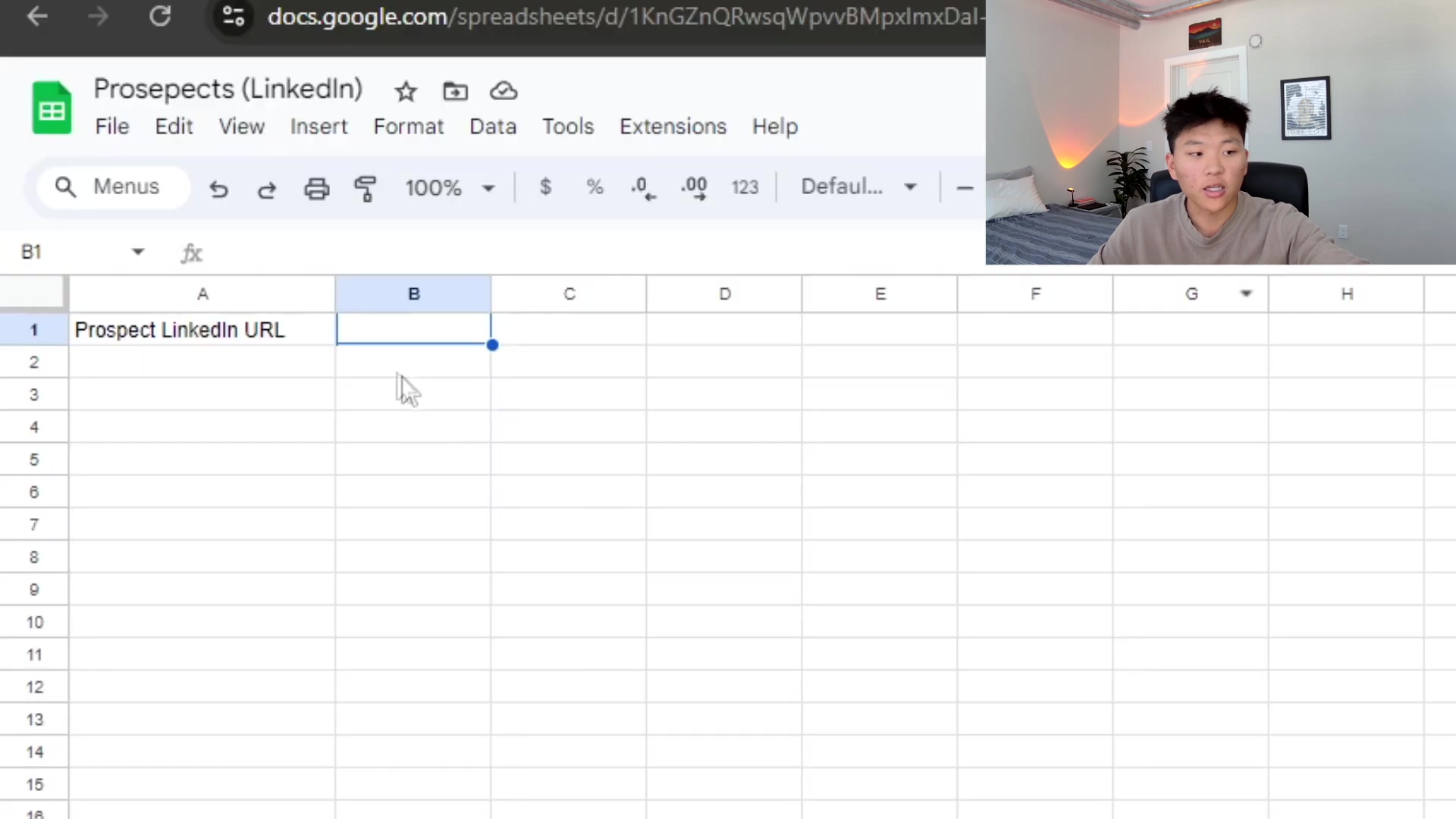

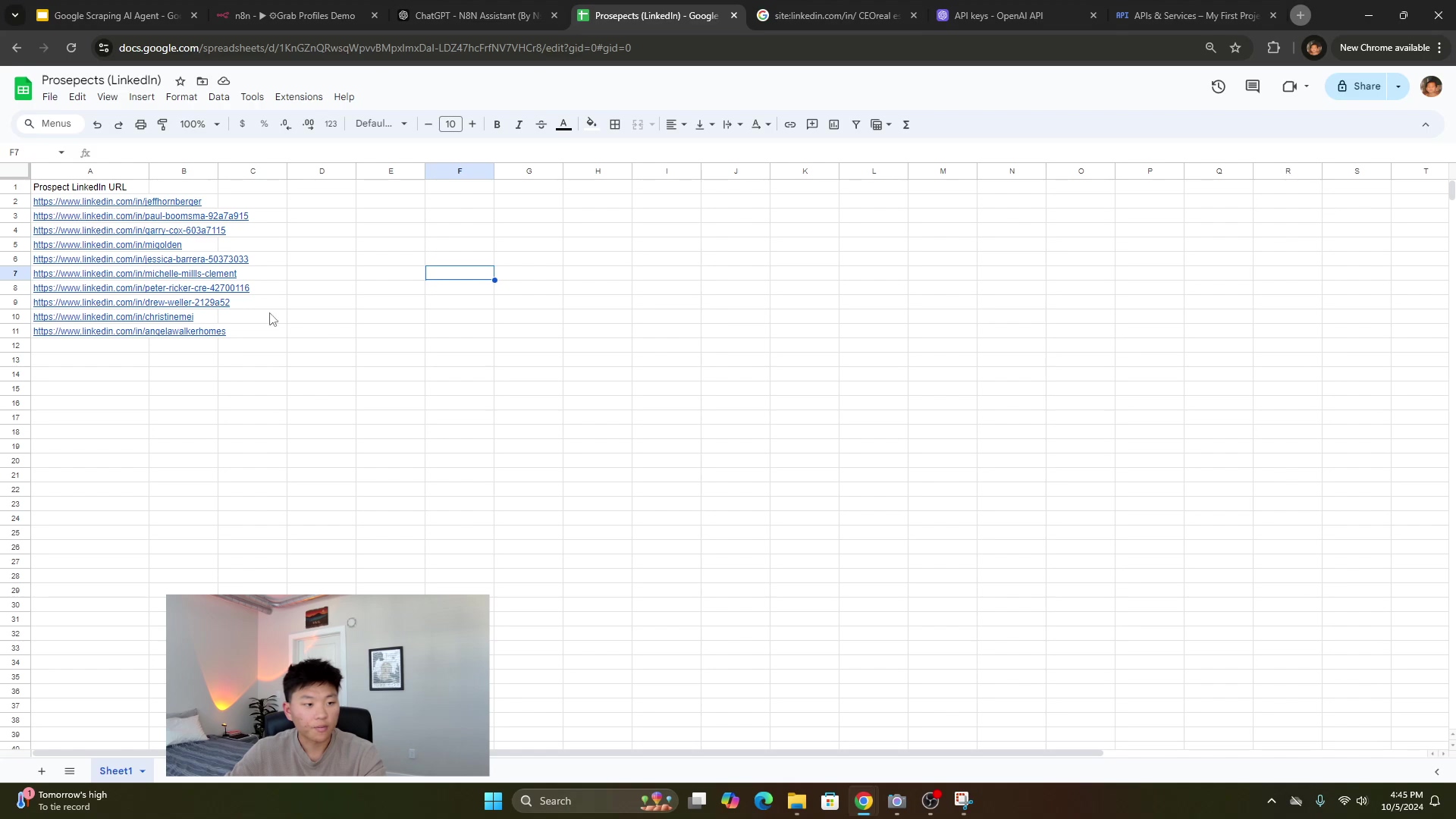

Storing Data in Google Sheets

We’ve scraped the URLs, now let’s put them somewhere useful! Connect a Google Sheets node to append those extracted LinkedIn URLs to your spreadsheet. This is where our collected data finally lands, safe and sound.

Here’s how to set it up:

- Operation: Choose “Append Row.” This means every time our workflow runs, it’ll add a new row of data to your sheet.

- Credentials: You’ll need to set up your Google Sheets credentials. This usually involves connecting your Google Cloud service account to n8n. If you hit any snags here (and trust me, setting up OAuth can sometimes feel like navigating a maze!), n8n’s documentation provides super detailed, step-by-step guides for setting up OAuth consent screens and enabling the necessary APIs. Don’t be afraid to consult it!

- Spreadsheet: Select your target Google Sheet. For example, you might have one named “Prospects (LinkedIn)”.

- Sheet: If your spreadsheet has multiple tabs, choose the specific sheet within it where you want the data to go.

- Column Mapping: This is where you tell n8n which piece of data goes into which column. Manually map the

linkedinUrloutput from your Code node to the appropriate column in your Google Sheet (e.g., a column you’ve named “Prospect LinkedIn URL”). This ensures your data lands exactly where you want it.

Finalizing the Tool Workflow

Almost there with our first workflow! Add a Set node at the very end of this workflow. Name a field response and set its value to done. Why do this? This response acts like a little signal flag, telling our calling AI agent that the scraping process is complete and it can move on. Before you hit save, double-check and remove any hardcoded test variables you might have put in the initial workflow trigger. We want it to be dynamic and ready for action!

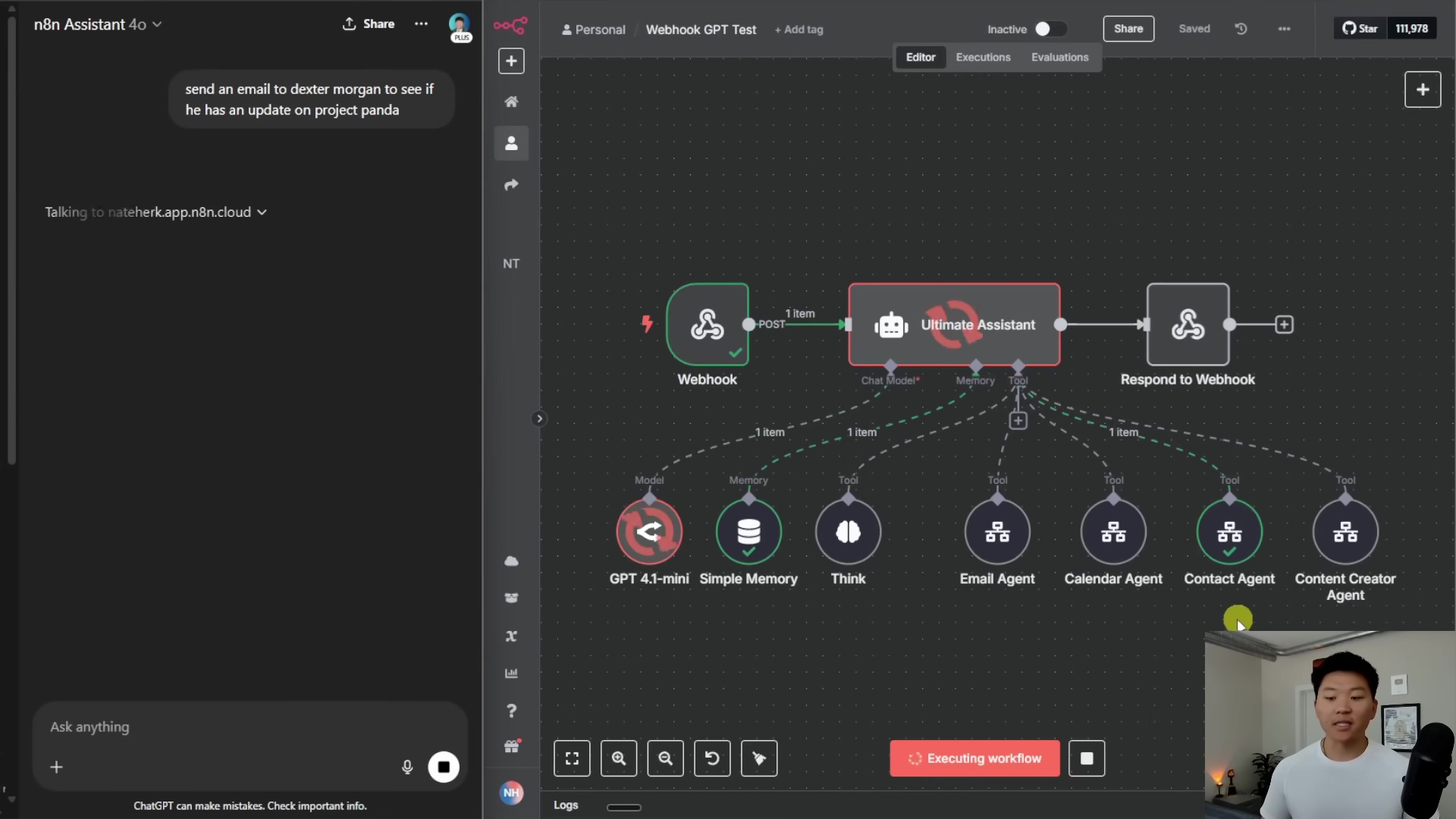

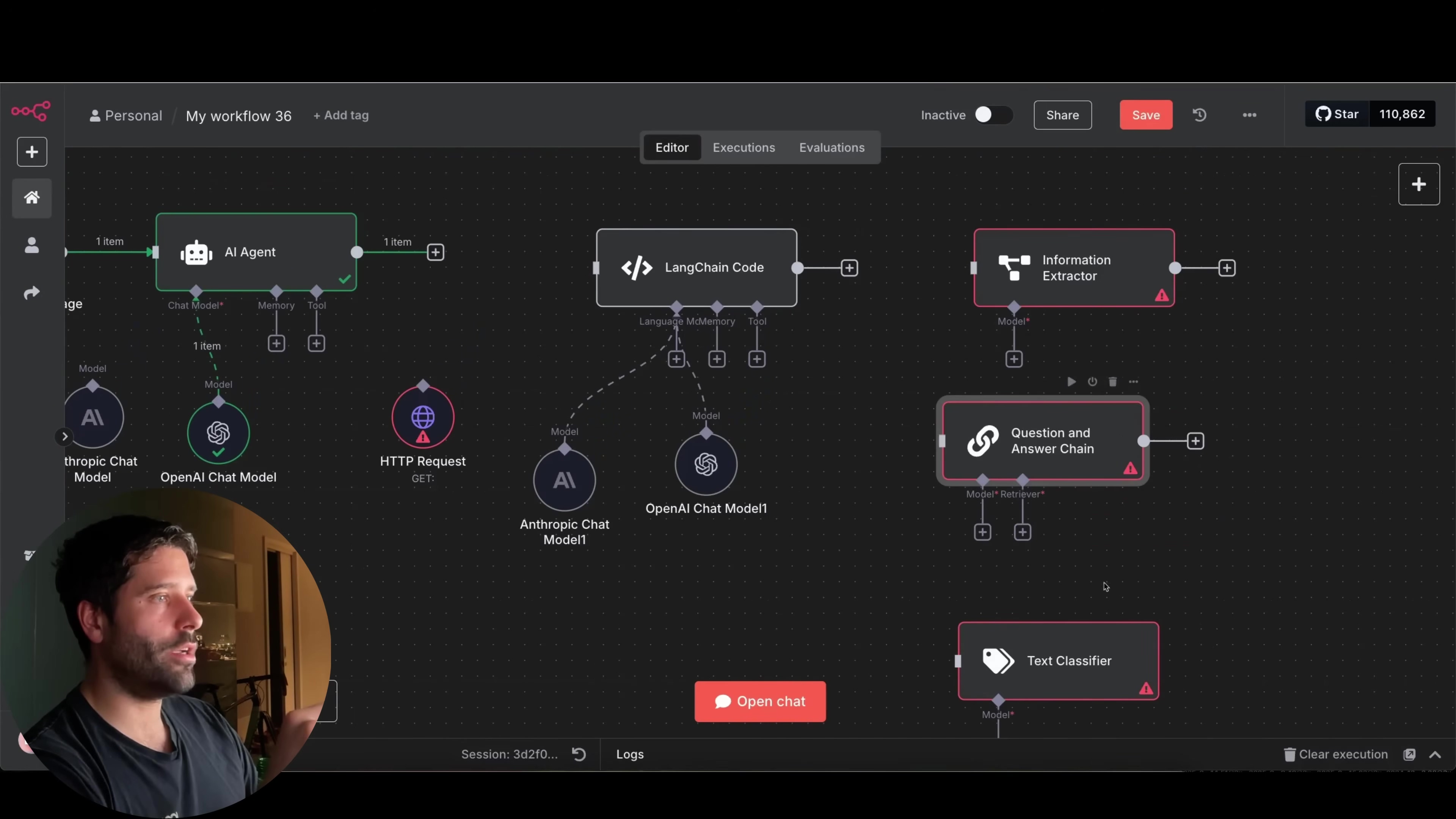

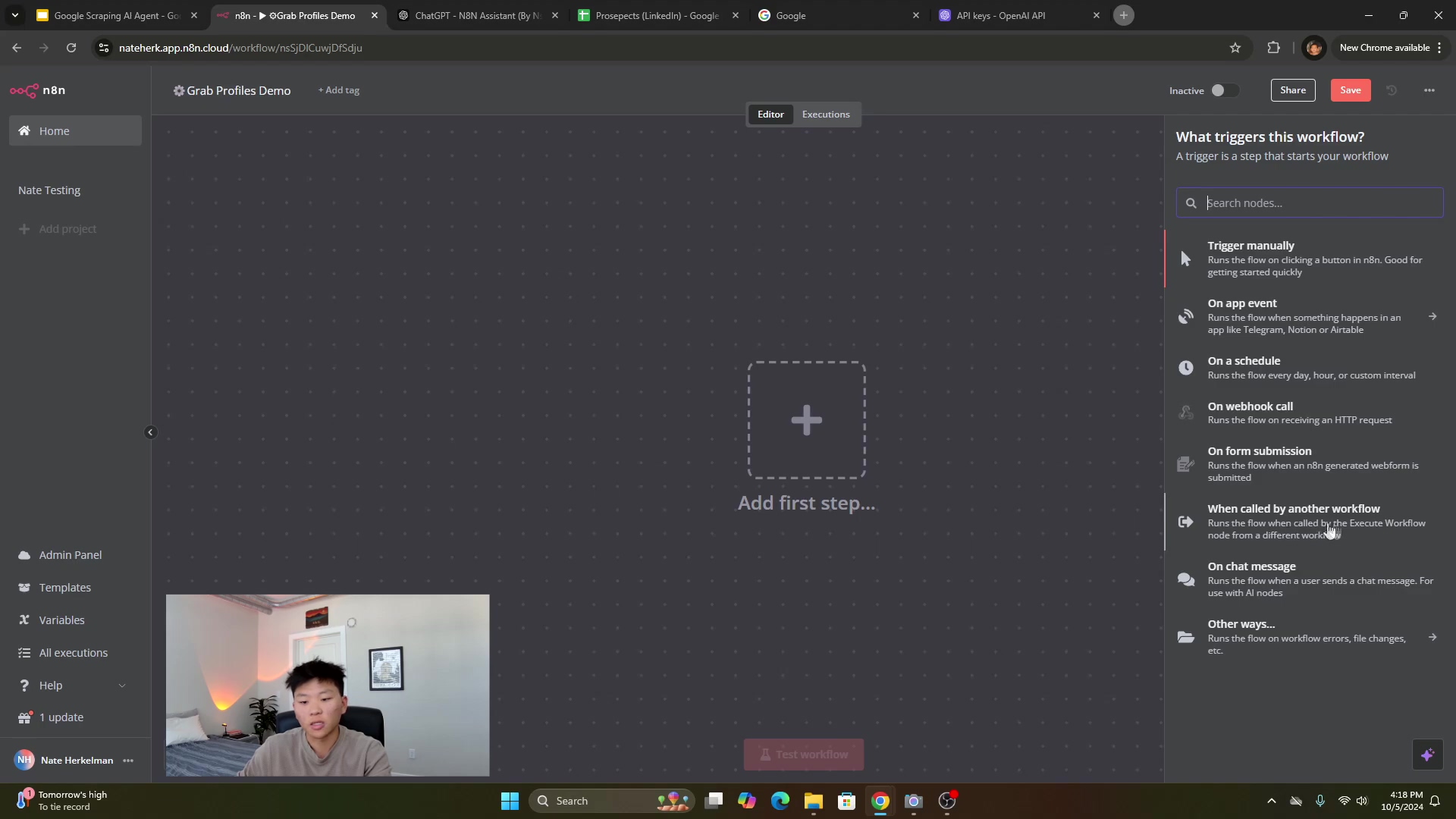

Building the AI Agent Workflow

Alright, now for the brains of the operation! This second workflow is a bit simpler and acts as our interactive AI agent. It’s the part that listens to your commands and then calls our Google Scrape Tool when needed. Think of it as the mission commander!

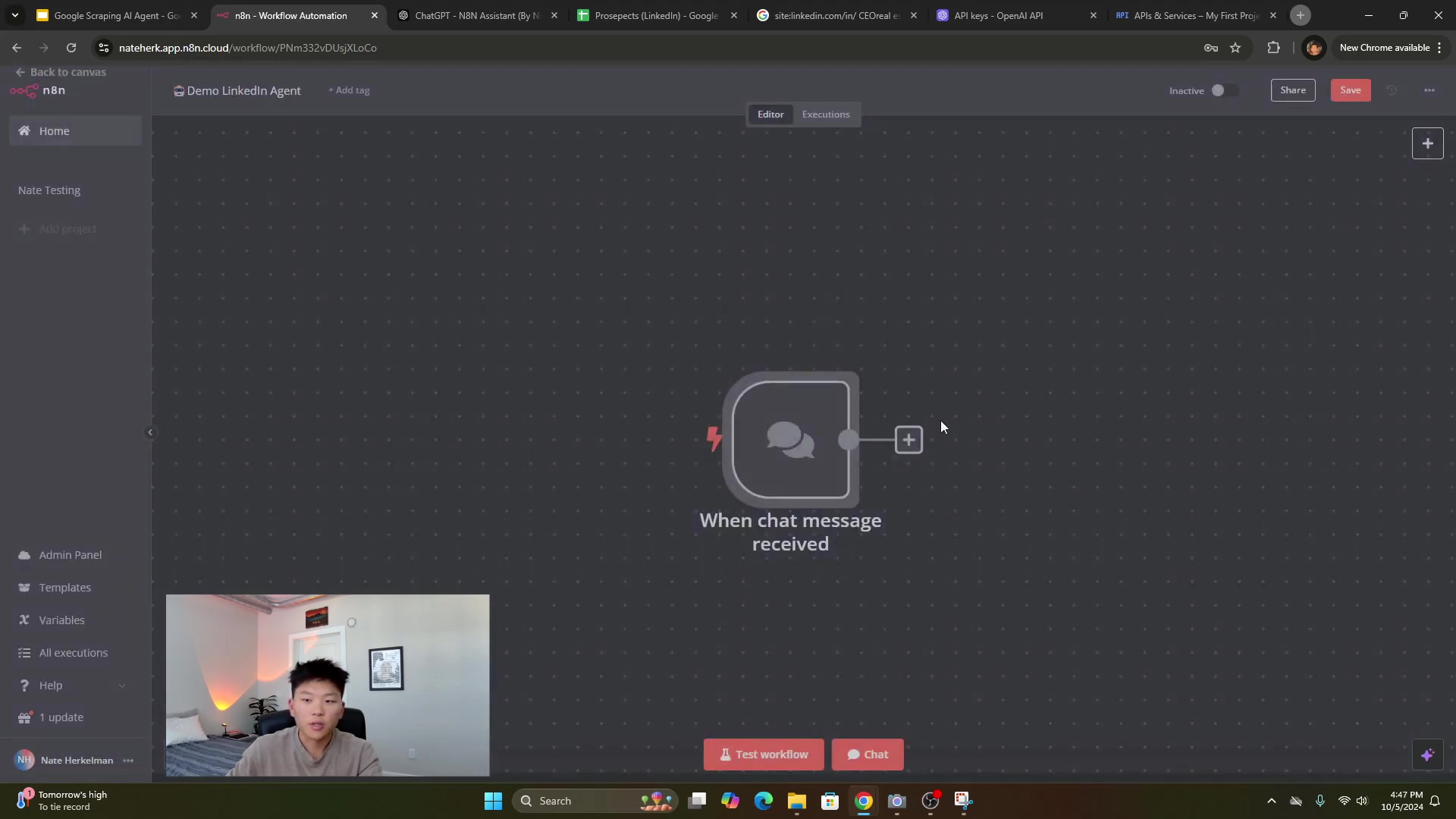

Agent Trigger: When Chat Message Received

To kick things off, start with a “When chat message received” trigger. This is what makes our AI agent interactive! It means the agent will spring into action whenever you send it a message, just like chatting with a friend (or a very smart robot friend!).

Configuring the AI Agent Node

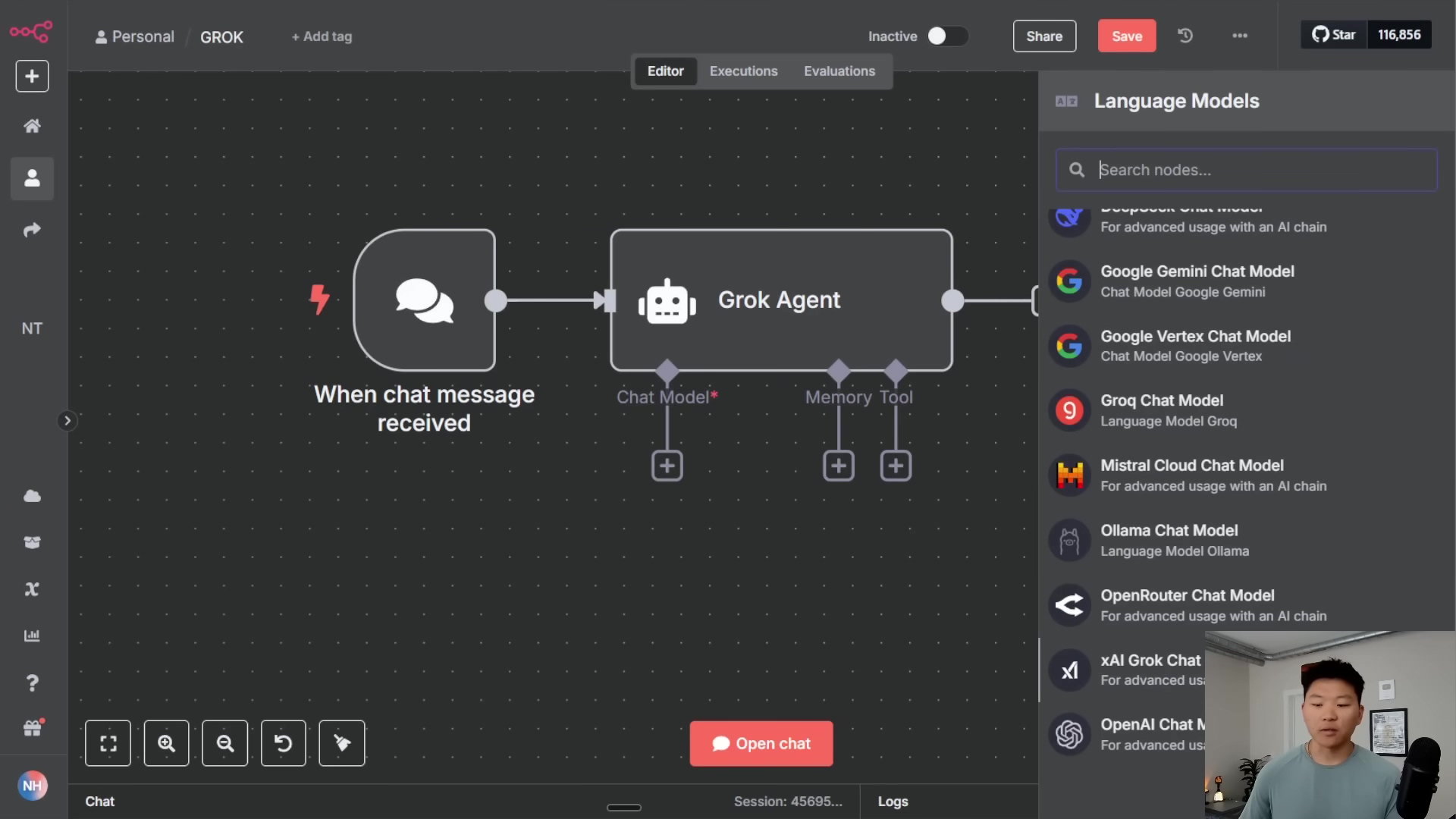

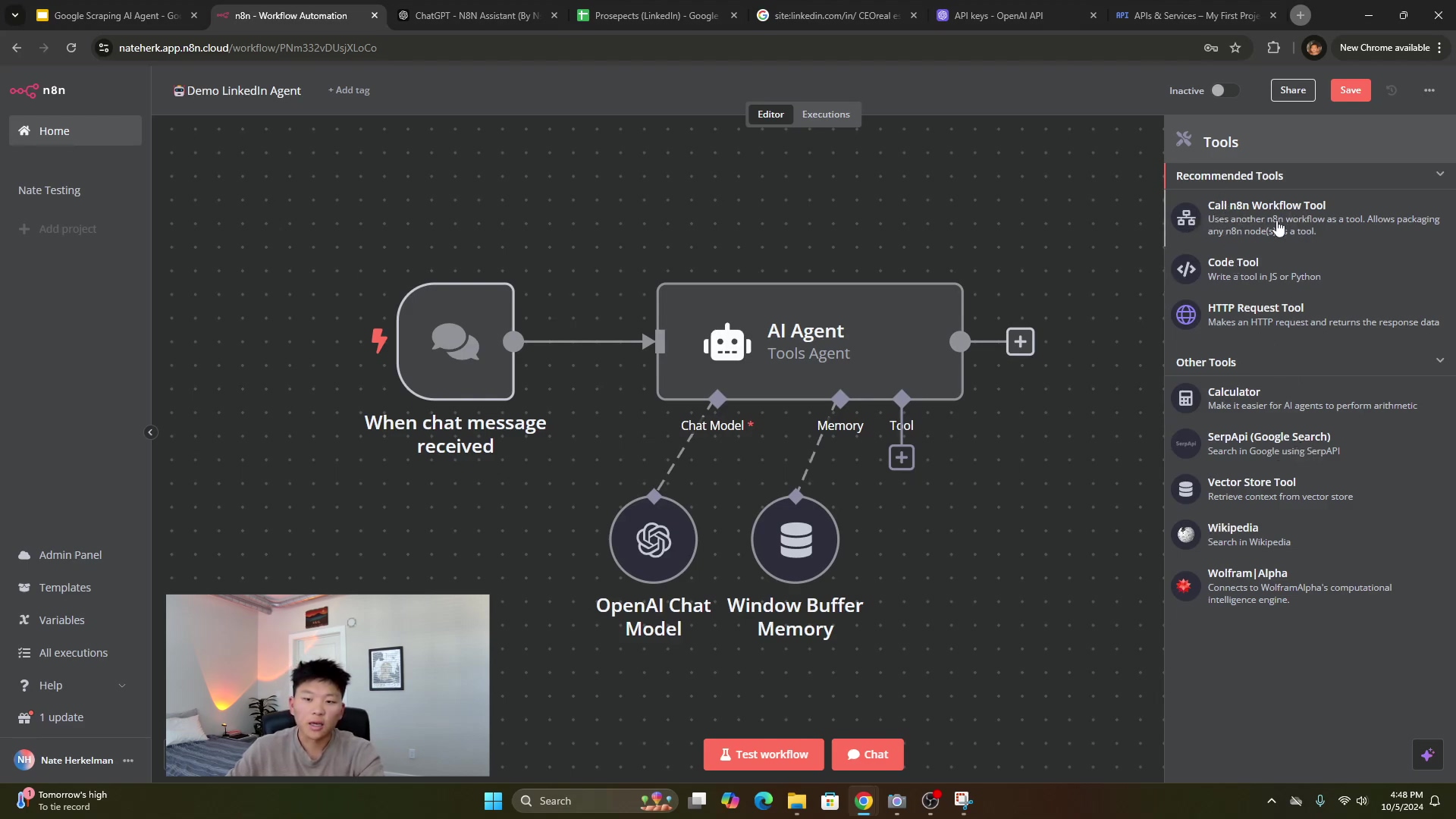

Next, add an “AI Agent” node. When prompted, select “Tools Agent” as its type. This type is crucial because it allows our agent to understand and utilize the tools we provide it – like our shiny new Google Scrape Tool!

Let’s configure this powerhouse:

- Chat Model (Brain): Connect an OpenAI Chat Model node. Use the same OpenAI credentials you set up earlier and select a powerful GPT model (again,

GPT-4ois a great choice for its capabilities). This is literally giving our agent its thinking power! - Memory: Add a “Window Buffer Memory” node. This is super important for making our conversations with the AI agent feel natural. It allows the agent to remember context from previous interactions, so it doesn’t forget what you just talked about. It’s like giving it a short-term memory!

- Tool Integration: This is where the magic happens! Add a “Tool” node and select “Call n8n Workflow Tool.” This is how we connect our main AI agent to the Google Scrape Tool workflow we just built.

- Tool Name: Give it a descriptive name, something easy for the AI to understand, like

grabProfiles. This is the internal name the AI will use to refer to this tool. - Description: Provide a clear, concise description of when this tool should be called. For example: “Call this tool to get LinkedIn profiles based on job title, industry, and location.” This description is what the AI agent reads to decide if it needs to use this tool.

- Workflow: Select your “Grab Profiles Demo” workflow (or whatever you named your Google Scrape Tool workflow) from the dropdown list. This links the two workflows together.

- Response Field: Specify

response(remember thatdonevalue we set in theSetnode of your tool workflow?). This tells the AI agent when the tool has successfully completed its task. It’s like a confirmation signal!

- Tool Name: Give it a descriptive name, something easy for the AI to understand, like

Testing the AI Agent

Alright, the moment of truth! Both workflows are set up. Now, let’s see our AI agent in action. Head over to the chat interface in n8n (usually found in the left sidebar or by clicking on the “When chat message received” trigger node).

Initiate a chat with your AI agent. Try asking it something natural, like: “Can you get CEOs in real estate in Chicago?” or “Find founders in technology in San Francisco.” Be specific, but conversational!

What should happen? The agent should process your request, recognize that it needs to use the grabProfiles tool, call your Google Scrape Tool workflow, and then respond to you once the profiles have been obtained and saved to your Google Sheet. You can then pop over to your Google Sheet directly to verify the results. If everything’s working, you’ll see those shiny new LinkedIn URLs appearing there! How cool is that?

Limitations and Advanced Considerations

Okay, let’s talk brass tacks. While this method is awesome, it does have a few limitations. Typically, this approach will return about 10 results per search. If you need to pull in a lot more data, you might run into Google’s anti-scraping measures, like those annoying CAPTCHAs. Google is pretty smart about detecting automated requests, and they don’t always play nice.

For larger-scale scraping operations, where you need hundreds or thousands of results, you’ll want to consider using a dedicated Google Search API like SerpAPI. These services are specifically designed to handle high volumes of search queries and can bypass common scraping obstacles, giving you more reliable and extensive data sets. Think of them as the heavy-duty industrial version of our current setup.

Critical Safety and Best Practice Tips

Building powerful automation tools comes with great responsibility! Here are some crucial tips to keep your workflows running smoothly and ethically:

⚠️ Respect Rate Limits: This is super important! Be mindful of the API rate limits for both OpenAI and any external services (like Google Search APIs) you integrate. Exceeding these limits can lead to temporary bans, throttled requests, or even unexpected costs. Always check the documentation for the services you’re using!

⚠️ Ethical Scraping: Always, always, always adhere to the terms of service of the websites you are scraping. Avoid overwhelming servers with excessive requests – that’s just rude and can get your IP blocked! Only collect publicly available information. And be super aware of privacy regulations like GDPR and CCPA when handling any personal data. We’re building cool tech, but we’re doing it responsibly!

💡 Error Handling: Implement robust error handling in your n8n workflows. Use Try-Catch blocks (a common programming concept for handling errors) to gracefully manage unexpected responses from APIs or parsing failures. This prevents your workflow from crashing and ensures your data stays intact. It’s like having a safety net for your automation!

💡 Dynamic Variables: Avoid hardcoding values directly into your nodes whenever possible. Instead, utilize dynamic expressions and variables. This makes your workflows flexible and adaptable to different inputs and scenarios. It’s the difference between a rigid machine and a versatile robot!

Key Takeaways

So, what have we learned today, my fellow automation enthusiast?

- Modular Design: Building separate workflows for the scraping tool and the AI agent isn’t just good practice; it makes your automation easier to reuse, understand, and maintain. It’s like building a complex machine from smaller, specialized parts.

- AI for Parsing: OpenAI’s GPT models are truly amazing! They’re incredibly effective at taking natural language queries and turning them into structured data, often without you needing to write a single line of complex parsing code. Mind-blowing, right?

- Leverage AI Assistants: Tools like ChatGPT, especially with specialized n8n assistants, can seriously speed up your development process. They’re like having an expert sitting right next to you, ready to provide code snippets and configuration guidance.

- Google Sheets Integration: Seamlessly storing your scraped data in Google Sheets makes it super easy to access, organize, and analyze later. It’s your personal data warehouse!

- Scalability Considerations: For those really big data missions, remember that dedicated search APIs are your friends. They help you overcome the limitations of direct HTTP requests and handle massive volumes of data with ease.

Conclusion

Building a Google scraping AI agent with n8n is a game-changer. It empowers you to automate complex data collection tasks, transforming what used to be hours of tedious manual work into a swift, automated process. This guide has shown you how to combine n8n’s intuitive visual workflow builder with the incredible power of AI to create a customized solution for extracting valuable information, like LinkedIn profiles, directly into your Google Sheets. It’s like having a personal data miner at your fingertips!

While our DIY approach offers unparalleled flexibility and can save you a ton of money, it’s important to be realistic about its limitations, especially when you’re dealing with massive data extraction needs. In those cases, dedicated APIs might be the more robust solution. But no matter the scale, always, always prioritize ethical scraping practices and build in robust error handling. This ensures your automated workflows are not just powerful, but also sustainable and responsible.

Now, you’re armed with the knowledge and the tools. Go forth, build your own AI agent, and unlock new possibilities for automation! I’d love to hear about your experiences and any innovative ways you put this to use. Share your discoveries in the comments below – let’s build the future together!

Frequently Asked Questions (FAQ)

Q: Why use n8n for this project instead of just writing a Python script?

A: Great question! While Python is super powerful for scripting, n8n offers a visual, low-code approach that makes building and managing complex workflows much easier, especially for those of us who aren’t full-time developers. You can see your entire workflow at a glance, debug visually, and integrate with hundreds of services without writing custom API calls for each. It’s about speed, visibility, and accessibility for automation!

Q: What happens if Google blocks my IP address due to too many requests?

A: Ah, the dreaded IP block! This is a common challenge with direct scraping. If Google detects unusual activity (like too many requests from one IP), it might temporarily block you or present CAPTCHAs. That’s why for large-scale operations, I recommend using dedicated Google Search APIs like SerpAPI. They handle the IP rotation and anti-blocking measures for you, making your scraping much more reliable. For smaller, occasional tasks, you might just need to wait a bit for the block to lift or consider using a proxy service.

Q: Can I use a different AI model instead of OpenAI’s GPT models?

A: Absolutely! n8n is designed to be flexible. While we used OpenAI’s GPT models here because they’re incredibly powerful and versatile, n8n supports integrations with other AI services and models. As long as the model can process natural language and return structured data (ideally JSON), you can likely swap it out. Just make sure to adjust the node configurations and prompts accordingly.

Q: How can I handle more than 10 search results per query?

A: As mentioned, direct Google scraping often limits you to about 10 results. To get more, you’d typically need to paginate through results (which gets complicated with Google’s anti-bot measures) or, more reliably, use a specialized Google Search API (like SerpAPI or Google’s Custom Search API). These services are built to provide more comprehensive results and handle the complexities of large-scale data extraction.

Q: Is it possible to scrape other social media platforms like Facebook or Instagram using a similar method?

A: In theory, yes, the concept of using an AI agent to parse queries and an HTTP request node to fetch data is transferable. However, each platform has its own unique terms of service, rate limits, and anti-scraping mechanisms. Facebook and Instagram, in particular, are much stricter than LinkedIn about public data scraping. You’d likely need to use their official APIs (if available for your use case) or specialized third-party tools that comply with their policies, rather than direct HTTP requests, to avoid immediate blocks and legal issues. Always check the platform’s rules first!